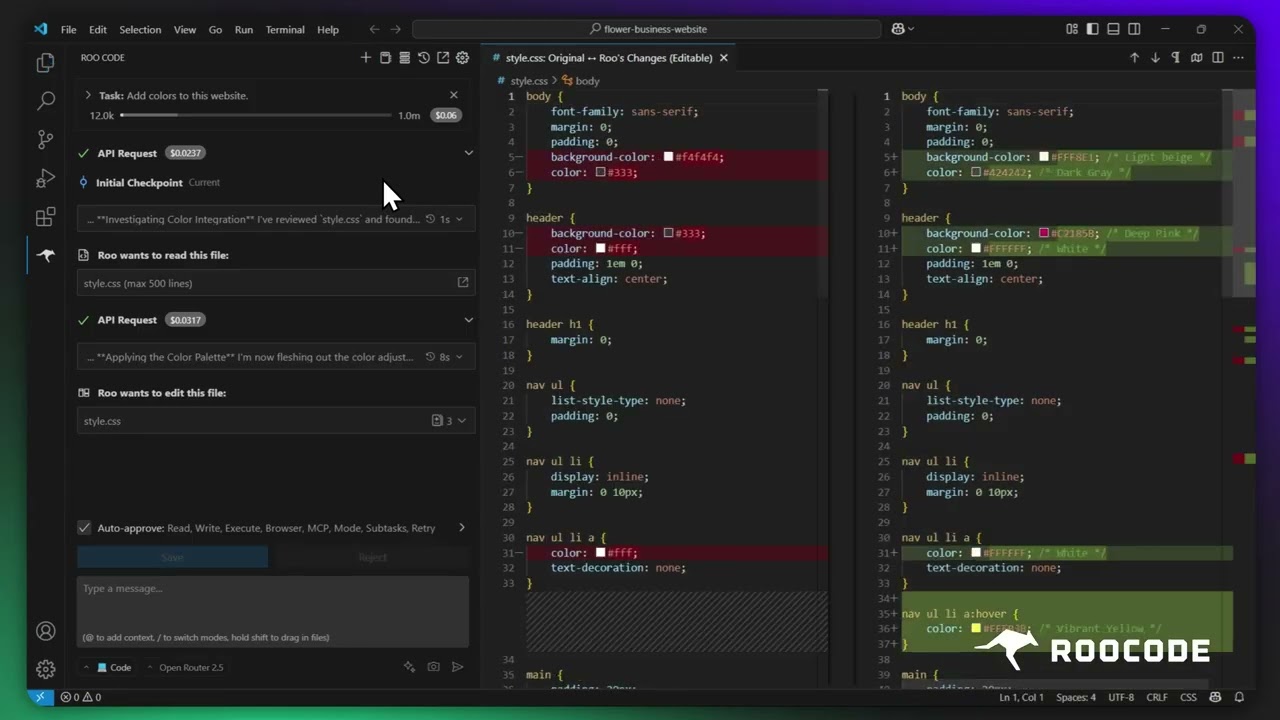

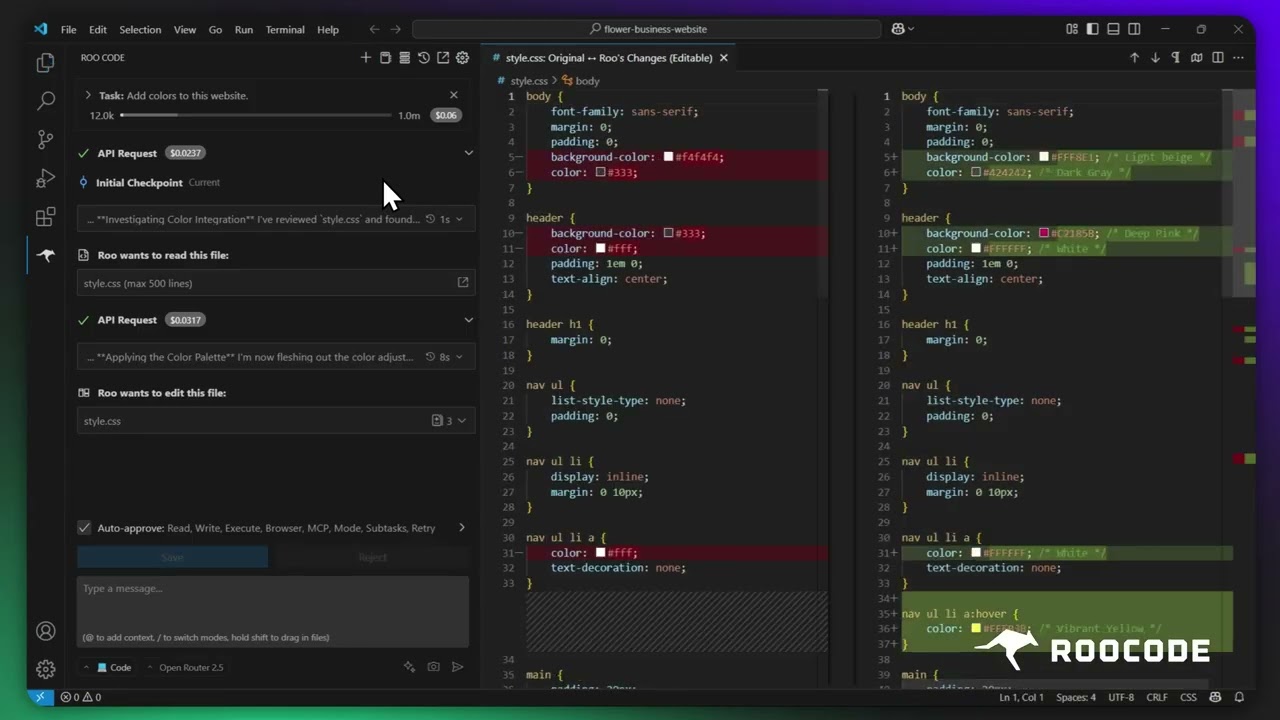

Installing Roo Code |

Configuring Profiles |

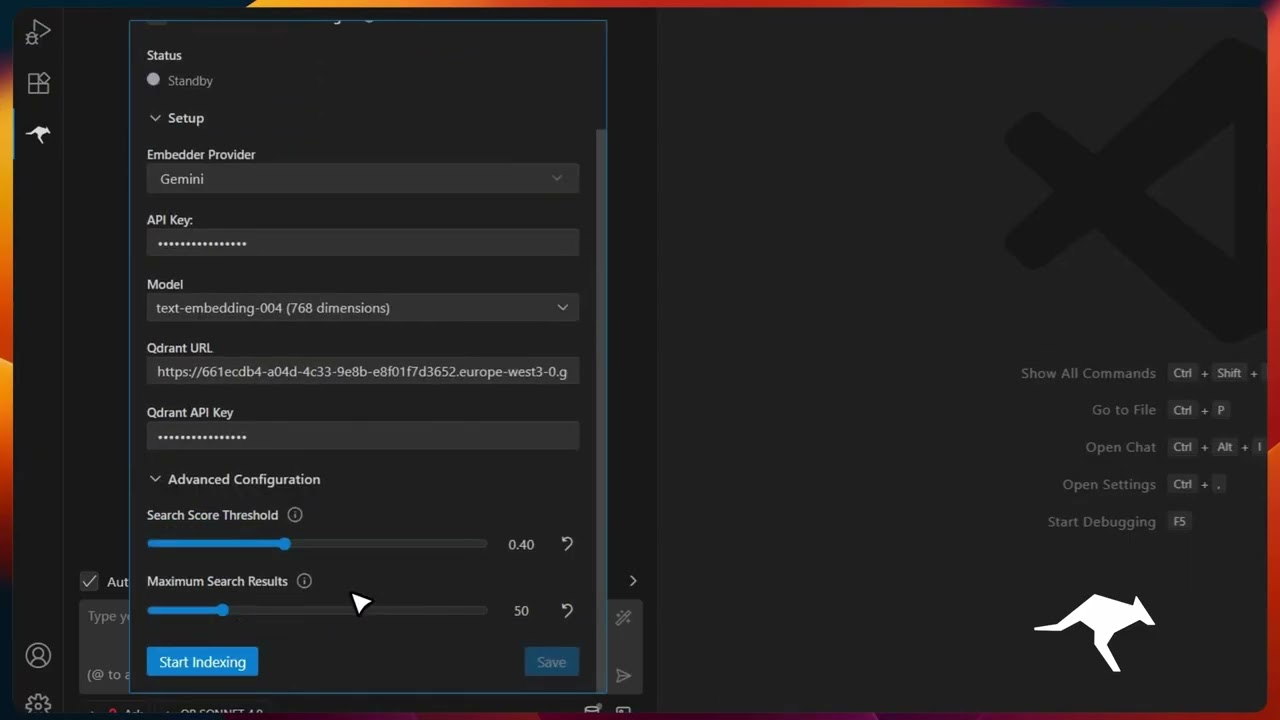

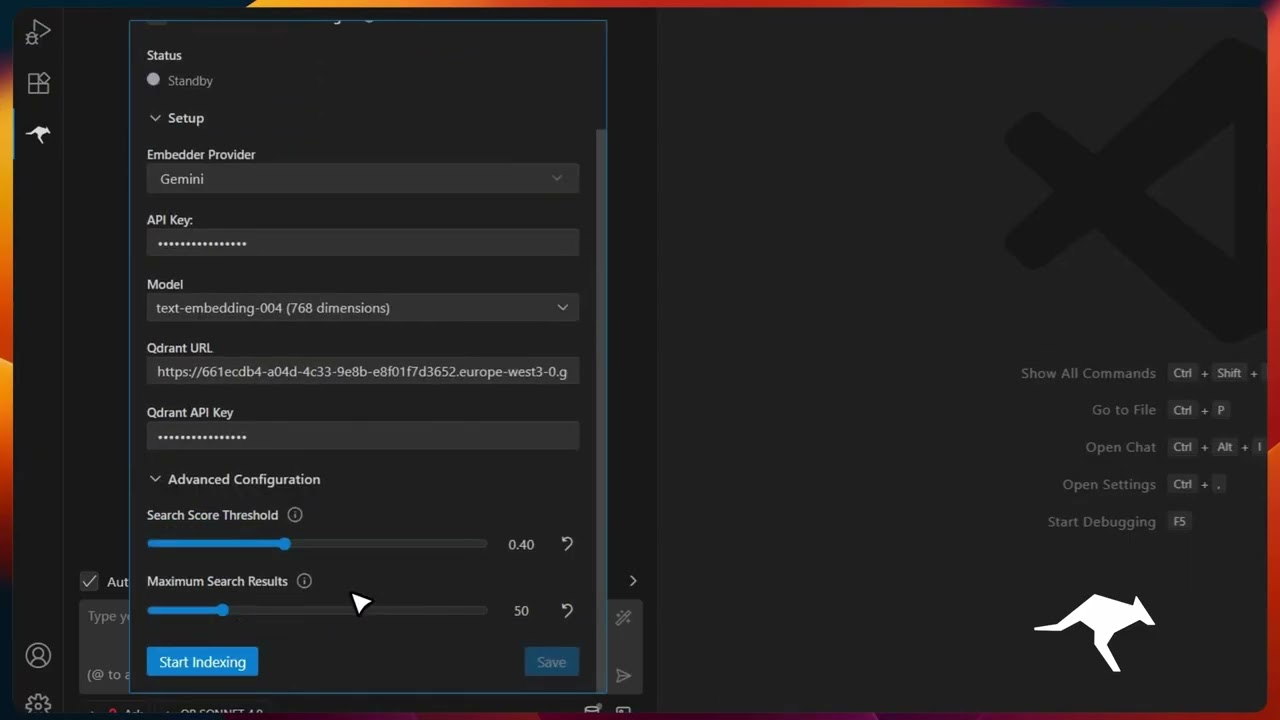

Codebase Indexing | -|

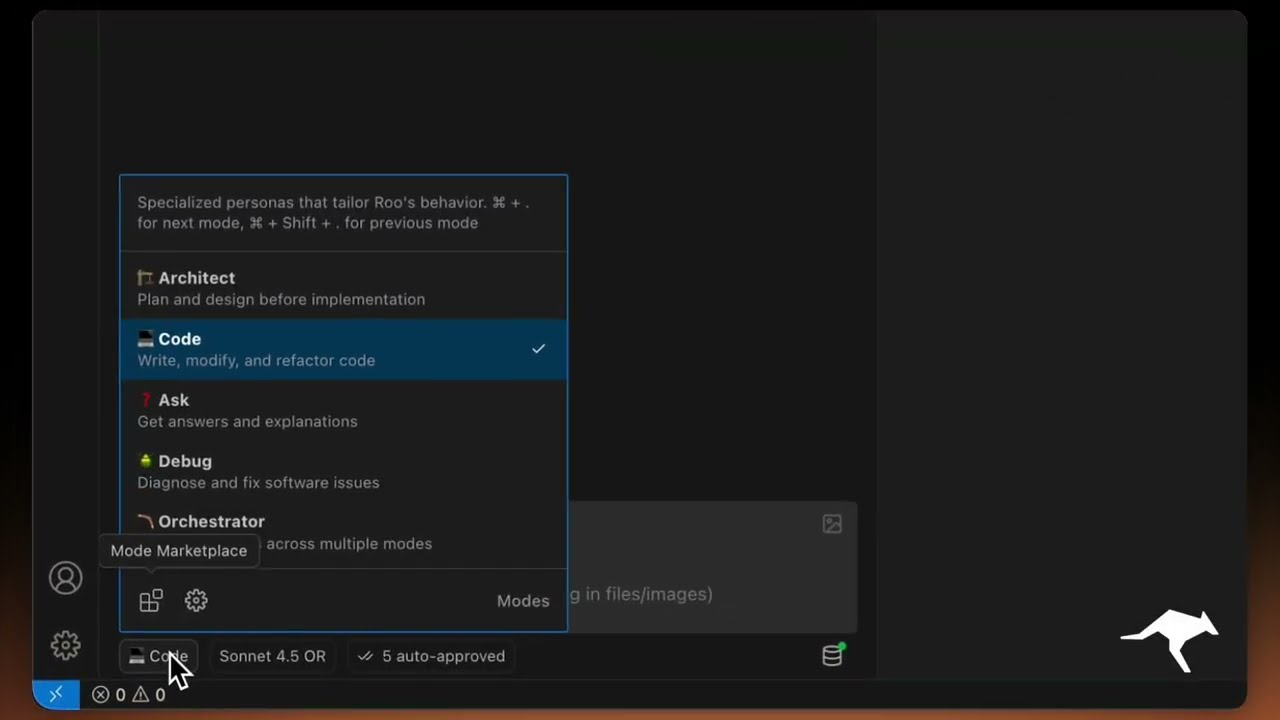

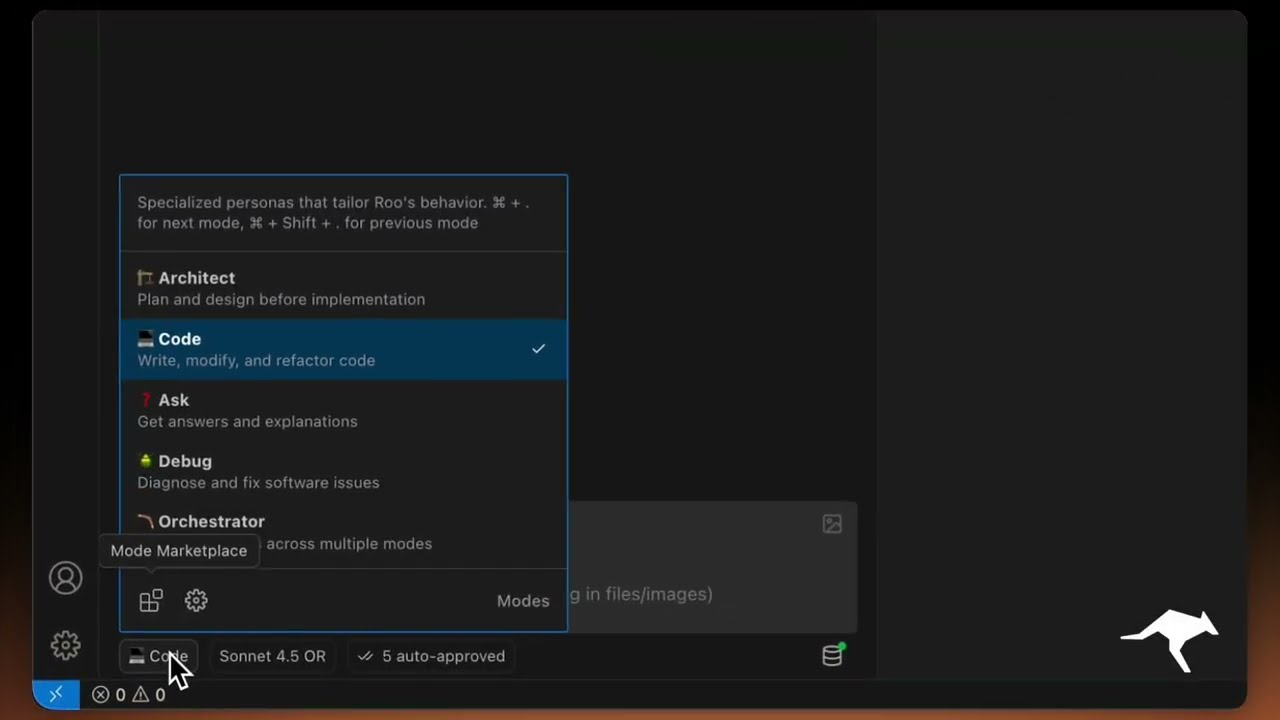

Custom Modes |

Checkpoints |

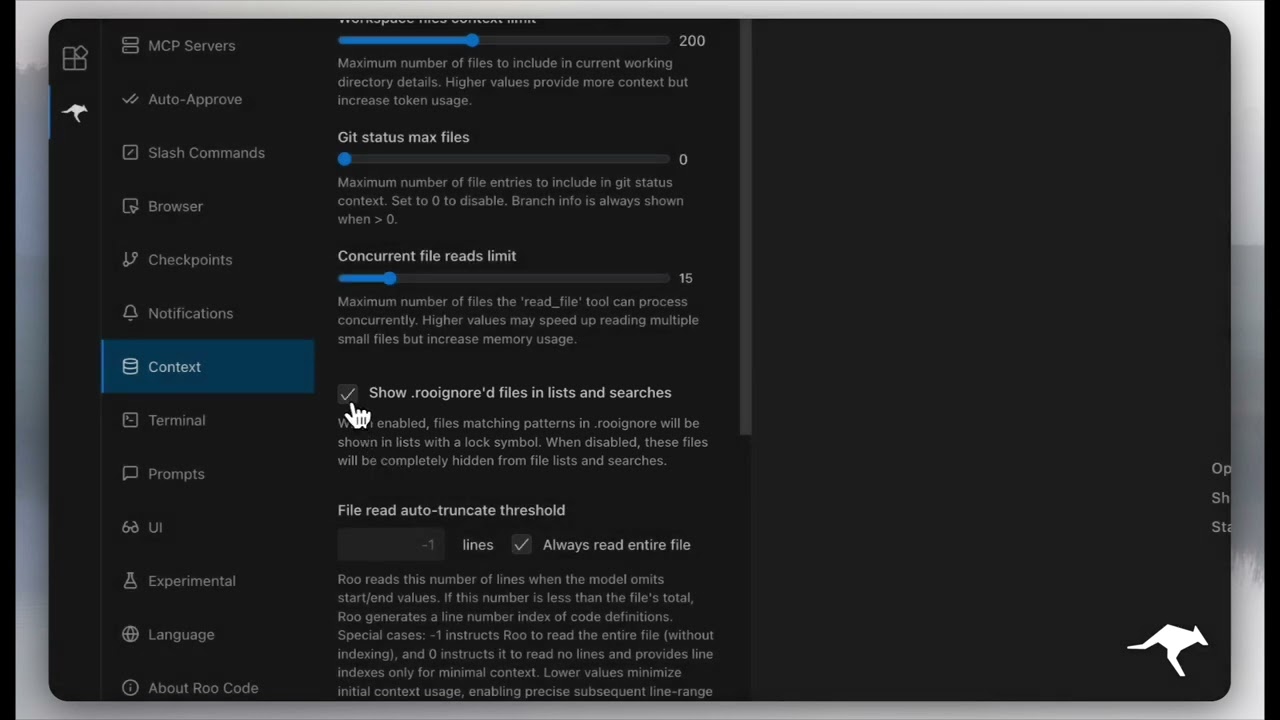

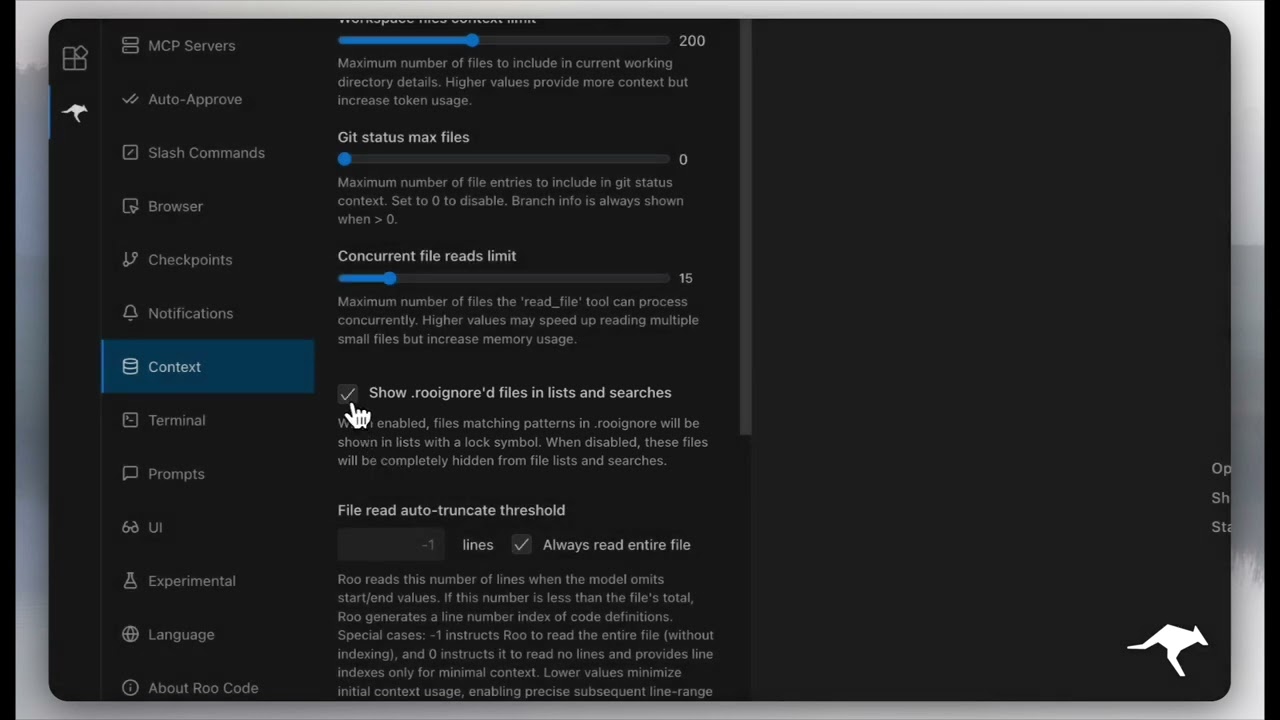

Context Management | +| | | | +| :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------------------: | +|

Installing Roo Code |

Configuring Profiles |

Codebase Indexing | +|

Custom Modes |

Checkpoints |

Context Management |