Integrate quickly using existing openai compatible chat completions api by just changing base url

import openai

api_key = "YOUR_OPENAI_KEY"

client = openai.OpenAI(api_key=api_key,base_url="https://dev.defendai.tech")

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Is this valid SSN 123-45-6789 ?"}

],

stream=False

)

print(response.choices[0].message["content"])Easily Add policies to Block/Alert/Anonymize Prompts or Responses by App and LLM Model

from defendai_wozway import Wozway

import os

api_key = "YOUR_OPENAI_KEY"

client = Wozway.Client(api_key=api_key,base_url="https://dev.defendai.tech")

policies = [

{

"policy_type": "Compliance",

"direction": "PROMPT",

"attributes": ["PII", "GDPR"],

"action": "ALERT",

"model": "gpt-4o",

"app": "CopilotApp"

},

{

"policy_type": "Security",

"direction": "PROMPT",

"attributes": ["Malware", "Phishing"],

"action": "BLOCK",

"model": "deepseek-r1",

"app": "Global"

},

{

"policy_type": "Privacy",

"direction": "RESPONSE",

"attributes": ["SSN"],

"action": "ANONYMIZE",

"model": "claude-sonet-3.7",

"app": "StockAgent"

}

]

client.apply(policies)This service allows developers to easily secure requests and responses between their chat apps and LLM cloud services like OpenAI, Groq, Gemini , Anthropic , Perplexity and more using policies through cloud driven UI or APIs available via the wozway sdk

- Introduction

- Features

- Architecture

- Prerequisites

- Installation

- Usage

- Developer SDK

- Provision Tenant Video

- UI Walkthrough Video

- Support

- License

Wozway by DefendAI provides a secure and efficient way to manage communication between your chat client applications and various LLM cloud services. By running a simple script, you can start a tenant that proxies your LLM requests and response through a policy driven llm proxy , adding an extra layer of security and abstraction. Each tenant gets a cloud domain with ability to control traffic through the service

- Secure proxying of requests to Multiple LLM Providers (OpenAI, Groq, etc.)

- Policy based enforcement using API or UI

- Support for Regex , Anonymization , Code , Phishing URL , Prompt and Response detection

- Similarity search with millions of malicious prompts database to block/alery admin

- Incident , Activities and Dashboard for monitoring usage across all Gen AI Apps

Wozway runs alongside client apps and intelligently re-routes only LLM bound traffic to DefendAI Cloud for policy enforcement and subsequent forward to LLM Providers like Open AI , Anthropic , Groq etc .. All other traffic is routed without any interference to its intended destination .. Wozway can intercept both prompt and response for running full security check

- Python 3.7+

- pip package manager

- API keys for the LLM services you intend to use (e.g., OpenAI or GROQ API key)

- Docker

-

Clone the repository

git clone https://github.com/Defend-AI-Tech-Inc/wozway.git cd wozway -

Install dependencies

pip install -r requirements.txt

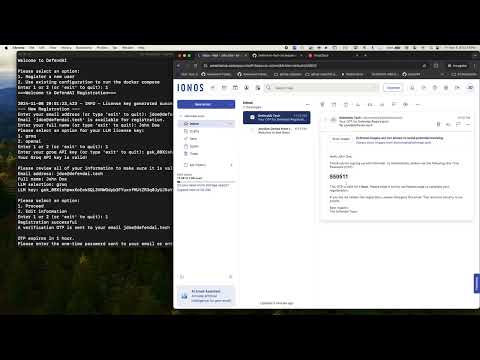

Run the following script to start your tenant:

python start_tenant.pyThis script will ask few questions to setup the cloud account and domain . It will start local version of openwebui integrated with wozway to proxy all requests throught the cloud proxy to the LLM Provider . Prompt and Responses are intercepted based on policies configured in cloud console

The SDK can be installed with either pip package manager.

pip install defendai-wozwayfrom defendai_wozway import Wozway

import os

with Wozway(

bearer_auth=os.getenv("WOZWAY_BEARER_AUTH", ""),

) as s:

res = s.activities.get_activities()

if res is not None:

for activity in res:

print(activity["prompt"])

passDetailed SDK documentation can be found here SDK Documentation

defendai_overview.mp4

If you encounter any issues or have questions, please open an issue on the GitHub repository or contact our support team at support@defendai.tech.

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.