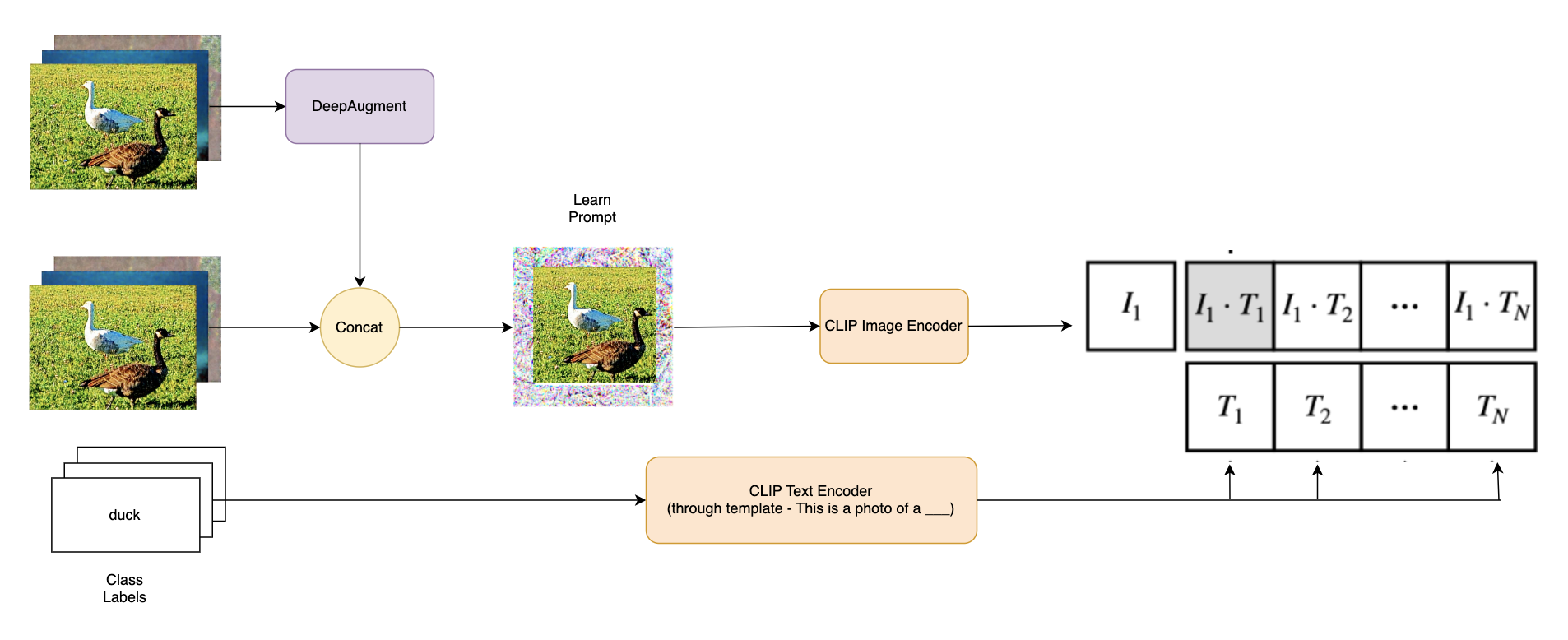

Visual prompting has recently gained traction in its ability to transfer the learnings of a model on a particular distribution to another out-of-distribution dataset. Moreover, one of the best models evaluated on the ImageNet-C dataset, which aims at testing robustness of image classification, uses augmentation techniques such as DeepAugment - an image-to-image neural network for performing data augmentation. In this work, we present a novel (to the best of our knowledge) technnique which combines visual prompting and DeepAugment to improve robustness of large language+vision models, specifically - CLIP. Apart from DeepAugment, we also perform composition with techninques like CutOut and CutMix and find that CutMix performs especially well on CIFAR-C, which serves as a measure for testing the model robustness to perturbations.

To run the requirements for running the code, you can clone the repo and install the requirements

pip install -r requirements.txt

To install the models run the download_models.sh file.

/DeepAugmentcontains scripts for evaluating deep augmented samples for ImageNet andn CIFAR-100./Evaluationcontains scripts for evaluating models on CIFAR-C dataset/ImageNetLoadercontains scripts for loading and manipulating ImageNet data/model_checkpoints_version_1contains checkpoints for our saved models/VisualPromptingcontains scripts for training Visual prompts

CUDA_VISIBLE_DEVICES=0 python3 DeepAugment/CAE_distort_cifar.py --total-workers=5 --worker-number=0

python VisualPrompting/main_clip_cutmix.py

To create the augmented images using cutout, run the following command:

python cutout/create_and_save_data.py --dataset cifar100 --data_augmentation --cutout --length 16 --batch_size 1

Once the dataset is created concatenated with the augmented data from CIFAR100, we run the prompt tuning stage to train the network to learn the parameters for the prompt. To tune the prompt for the CLiP model, run the following command:

python3 VisualPrompting/main_clip.py --dataset cifar100 --root ./data --train_folder [TRAIN_FOLDER_PATH_WITH_AUGMENTATIONS] --val_folder [VAL_FOLDER_PATHS_WITH_AUGMENTATIONS] --num_workers 1 --batch_size 64

We would like to thank the work done by the following:

- Hyojin Bahng, Ali Jahanian, Swami Sankaranarayanan, and Phillip Isola. Exploring visual prompts for adapting large-scale models, 2022. URL https://arxiv.org/abs/2203.17274

- Dan Hendrycks and Thomas Dietterich. Benchmarking neural network robustness to common corruptions and perturbations, 2019. URL https://arxiv.org/abs/1903.12261.

- https://github.com/hjbahng/visual_prompting

- https://github.com/hendrycks/imagenet-r

- https://github.com/ildoonet/cutmix

- https://towardsdatascience.com/downloading-and-using-the-imagenet-dataset-with-pytorch-f0908437c4be

- https://towardsdatascience.com/pytorch-ignite-classifying-tiny-imagenet-with-efficientnet-e5b1768e5e8f#:~:text=Tiny%20ImageNet%20is%20a%20subset,images%2C%20and%2050%20test%20images