-

-

Notifications

You must be signed in to change notification settings - Fork 782

Experiment with running tests in parallel on GHA #5211

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

5e32c22 to

12b2047

Compare

12b2047 to

d20ef53

Compare

|

I was hoping for a bigger performance improvements, but there doesn't appear to be all that much. |

d20ef53 to

8730a84

Compare

test. This way test method can run in a standalone fashion.

|

@amanda11 Just a heads up - 78baa3b. Trying to parallelize test run uncovered a small issue with that unit test which was added recently. The test case / method relies on a state (pack being registered) from a previous test method which is usually bad practice which results in tests which can't run in standalone fashion, etc. Similar "cross test pollution" has bitten us many times in the past already (and I'm sure will bite us many more times in the future). |

|

Don't think that was my test! But agree with point. |

|

OK so after some more runs, there are some speed gains. Around 8-9 minutes for parallelized runs vs ~13-15 minutes for non-parallelized. I was hoping for a bigger gain, but that's probably still better than nothing. If we do decide to go with this approach, I just need to confirm code coverage works correctly - codecov.io supports multiple submissions and incremental updates (aka each job submits partial coverage which it generated) so I believe it should work out of the box if the current setup worked before already. For OSS projects, Github claims they offer 20 concurrent jobs at once so until we migrate more projects to GHA I think we should try to utilize as much concurrency for this main workflow as possible to make tests faster. Granted end to end tests will still be slow, but we need to start somewhere and can't fix everything at once. |

evenly across different jobs and speed up the build.

|

I made a couple of more optimizations and improvements, including caching apt packages (since that step takes up to 50 seconds). On that note, I also noticed some weird issue going on with cache not being saved (" Unable to reserve cache with key , another job may be creating this cache.") - actions/toolkit#658 even when only a single job runs at once 🤷 |

549f2f9 to

d755ba1

Compare

9e04340 to

581a61e

Compare

|

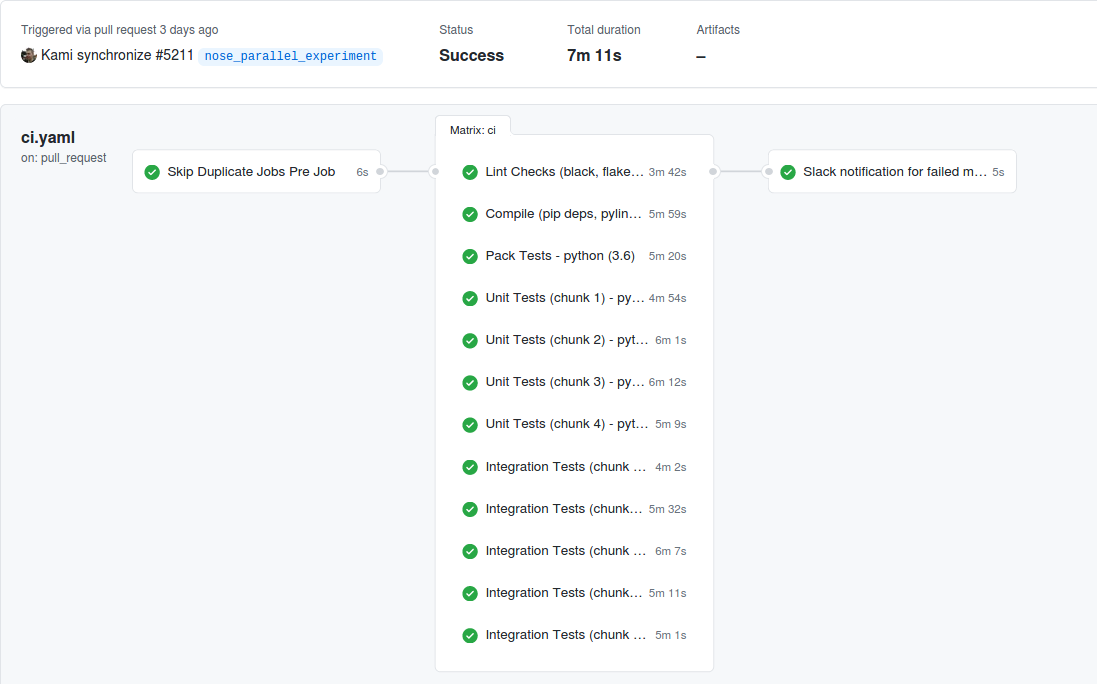

This is RFR now. Run time is now just under 8 minutes with primed cache (vs around 12-15 minutes before - e.g. https://github.com/StackStorm/st2/actions?query=event%3Apull_request+branch%3A5060_pip_to_latest, https://github.com/StackStorm/st2/actions?query=event%3Apull_request+branch%3Adependabot%2Fpip%2Fpyyaml-5.4). I'll update github checks after it's reviewed. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

With those changes, the whole workflow run now takes ~8-9 minutes instead of ~13-15 minutes.

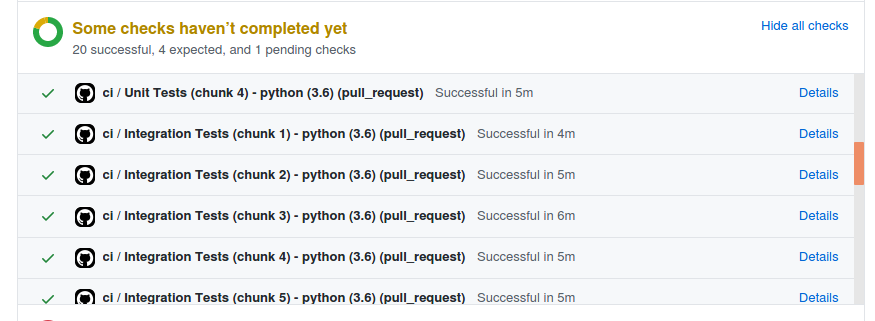

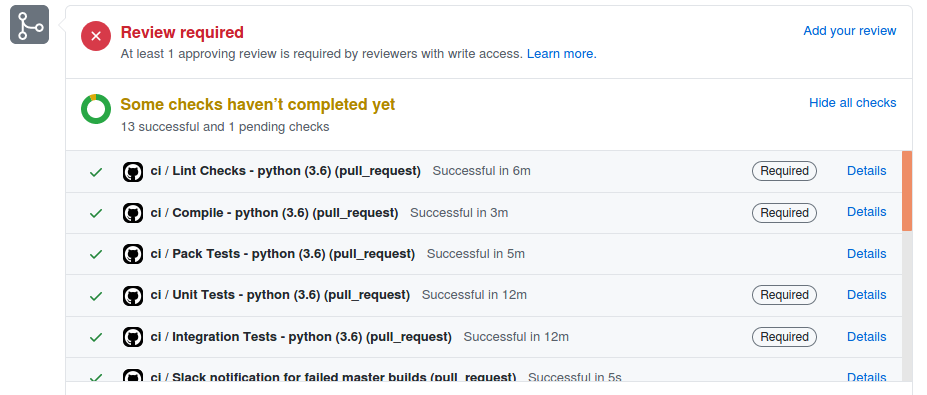

That's indeed an improvement in timing, but I'm wondering that multiplying the CI checks by such a significant number (x4-5) pollutes the UI here making scrolling excessive and indicators harder to observe:

.

.

Is that level of complexity and UX a good price to pay for a couple of minutes of improvement?

Another point, having the unit + integration tests optimized to sub 10mins, the bottleneck is still the CircleCI packaging which takes ~17 mins and e2e tests taking ~30mins. So it feels to me while the GH CI checks optimizations are nice, in reality, users still have to wait for other jobs to finish. Our CI is slow as the longest CI job on the checklist.

WDYT? Any ideas on how to find the balance here?

|

Yes, it does make UX a bit more complex, but I think it's a small price to pay (and well worth it) - scrolling couple of pages is still much faster than waiting multiple minutes on the test results :) And yes, sadly end to end tests will still be the bottleneck, but this doesn't mean we should not try to improve and speed up other checks. As far as packages go - IIRC, I already mentioned in the past that we should cache more things there (aka Python packages we download to speed up the build - |

|

I think splitting both Unit and Integration to |

|

@armab That wouldn't get much us much of a performance improvement (I started with 2). And again, I really don't think "CI checks spam" is a big issue - aka it's a compromise and it's still much better than the alternative (slower build). And yes, from a hypothetical "person who maybe contributes a couple of times per year" perspective, slightly longer build is not a big deal (and in that case people may be willing to trade off less checks for it), but for people who contribute on a regular basis, even a couple of minute speed up is well worth the compromise. Long term, if we want to reduce number of parallel jobs we can probably do that if we switch to self-hosted runners and use some faster CPU optimized VM / instance. |

|

I did (more than) my part - StackStorm/st2-packages#697 (comment). Now someone just needs to fix / improve end to end tests (as mentioned many times already, first step should be upgrading cicd server to 3.5dev packages which include my performance improvements - hoping that will speed things up a bit) :P |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

With the CircleCI optimizations you did (StackStorm/st2-packages#697) and now reducing the entire deb/rpm build time to ~ 10mins, let's please balance the build timing by lowering the Github Actions parallel number so the total wait time for all independent tasks is around 10mins and so it's practical.

With that, I think it's also a good time to remove the e2e checks for every PR and so it's not a blocker and we can request it on-demand via Github message/request in PR.

|

@armab Yeah, will remove 1 or 2 parallel jobs and see how it goes. As far as end to end tests go - I will still leave those enabled for the time being, until on demand workflow trigger via PR comment is implemented. |

|

@armab OK, so I removed two parallel jobs for each set of tests (integration, unit) and the overall workflow time was still around 8 minutes (with primed cache) which is still quite decent. I still hope that at some point in the future we will be able to utilize on demand runner on EC2 / Azure with a larger VM and faster CPU to speed things up even more. To put things into context - locally unit tests take around 5-6 minutes without any parallelization (granted, I do have a very fast CPU). |

|

I'll update github checks for master branch once this PR is merged. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM and thanks a lot for the optimization! 👍

36a4709 to

76d0422

Compare

…into nose_parallel_experiment

|

Merged into master, updated github checks. |

This pull request is an attempt to speed up unit and integration test runs on Github Actions CI by parallelizing the runs and utilizing multiple jobs.

Background, Context

Github actions offers 20 concurrent job runs (https://docs.github.com/en/actions/reference/usage-limits-billing-and-administration#usage-limits) for free plans / open source projects.

Since right now we only have a couple of jobs in this workflow, it makes sense to try to parallelize the tests to speed things. Slow tests are something which affects everyone, slow development cycle and in aggregate over time results in tons of wasted time waiting for CI.

This pull request tries to do that by utilizing nose parallel plugin and splitting tests across multiple jobs (we split both unit tests and integration tests runs into 4 chunks and jobs).

Another benefit with splitting tests into multiple chunks is identifying cross test pollution and dependencies which are not desired - we had a lot of issues in the past related to cross test pollution and state sharing (e.g. test X (usually not intentionally) relies on some state from test Y).

Having cross test dependencies means we can't run tests in isolation by itself which is not desired - each test should be able to run by itself and all the state should be created by the test itself and setUp / setUpClass methods.

This change already identified a couple of issues like that which I have fixed (this is also nothing new, we already fixed tons of issues like that in the past when we identified them - usually when manually trying to run some test in isolation).

Results

With those changes, the whole workflow run now takes ~8-9 minutes instead of ~13-15 minutes.

I was actually hoping for even a bigger speed up, but this is still better than nothing.

I'm not exactly sure why the speed up is not bigger and I need to dig in some more (there of course is some overhead involved with spinning up each job, running common steps, etc., but still), but even that is better than nothing and every minute counts.

It's worth noting that even if and when we start utilizing Github Actions for more projects, I don't think we should decrease number of concurrent job runs in this workflow - this is the primary and main workflow which runs the most and it needs to be fast. It doesn't matter that much if st2-rbac or some other repo job which runs maybe couple of times per month needs to wait a bit on the jobs from this workflow to finish.

Gotchas, etc.

I don't think there should be any gotchas and things should just work out of the box.

Only potential issue could be code coverage, but we utilize codecov.io which supports incremental result uploading and combining the results on the server side so as long as every test job uploads results to codecov.io, it should work fine without any issues.

TODO