We were among the 50 students across the nation to participate in the AI @ Meta Hackathon at Meta's global HQ in Menlo Park, CA, where we explored how VR, AI, and mixed reality can make life easier and more meaningful.

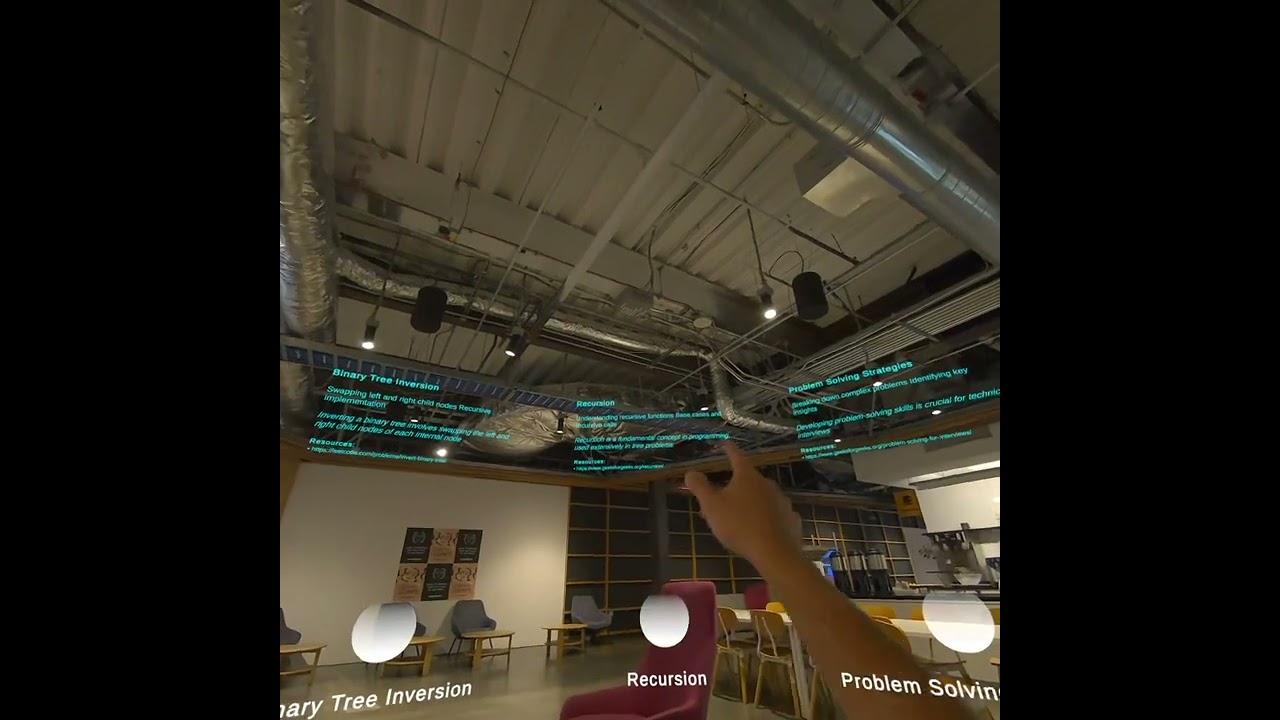

Spatial Scholar is a mixed reality learning companion built for the Meta Quest 3. It turns a spoken learning request into an interactive 3D concept map anchored in your real space, with an AI-guided explanation.

- Voice → lesson: you ask a question out loud (e.g., “Teach me BFS vs DFS”)

- Concept breakdown: the system generates a short explanation + a structured concept graph

- 3D visualization: nodes/edges render as a spatial graph in passthrough

- Speech + text: the explanation is spoken and saved for reuse

Learning content still lives on flat screens (docs, videos, chat windows). We wanted a way to:

- visualize abstract topics in 3D

- show relationships/prereqs spatially

- make explanations feel more “hands-on” and memorable

- Speech input in Unity

- A structured prompt is sent to Llama 4

- The model returns:

- a short explanation

- a JSON concept graph (nodes + relationships)

- Unity parses the JSON and renders a dynamic 3D graph in passthrough

- TTS reads the explanation out loud

- Meta Quest 3

- Unity (C#)

- Meta XR SDK (passthrough / spatial visualization)

- Llama 4 (reasoning + JSON graph generation)

- Hugging Face + ElevenLabs (model + speech integration)

- Custom agents:

LlmAgentSpeechToTextAgentTextToSpeechAgent

- JSON graph parser + runtime graph layout/renderer

- Voice-based requests

- LLM explanation output (speech + text)

- JSON concept graph generation

- 3D nodes + edges in passthrough

- Expandable/interactable graph layout

- Hand-based node expansion and richer visual subtopics

- Persistent “knowledge rooms”

- Multi-user sessions

- Light assessments (quick checks / recall prompts)

- Optional integrations (Canvas / Google Classroom)

Built by Ansh, Riten, Vinay, Ashlie, and Nathan