-

Notifications

You must be signed in to change notification settings - Fork 16.4k

Amazon Provider Package user agent #27823

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Two failing tests are also failing in main. |

|

This is my personal thoughts

|

Moved to a code comment so it can be a threaded conversation; see below |

9ff7e6b to

a5fc3bc

Compare

|

Any other thoughts on this? |

uranusjr

left a comment

uranusjr

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Pending the discussion on telemetry

potiuk

left a comment

potiuk

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some static checks need fixing, but otherwise it looks good.

a5fc3bc to

a5f4de0

Compare

|

CI build timed out. Going to bump it. |

|

Two tests are failing in |

They do not seem to fail on "canary builds" in CI: for main, so there must be something in your changes that cause it https://github.com/apache/airflow/actions/runs/3607606417 |

I don't see how that's possible. If I check out main, git pull --rebase, and run the tests, they fail. My code is all in a different branch. |

|

I am afraid you are one of the few unlucky ones who will have to deal with side-effects. It is very likely that those tests that fail, rely on side effects from other tests. It's also possible that your tests are clearing/removing those side effects - that's why those tests start to fail in your PR. Did you run all tessts from providers?

Those should run full "amazon providers" suite in the same sequence they are run in CI - run them on If you run those tests individually and they fail - it means they are relying on side effects from other tests. This is because most of our unit tests rely on dags/dagruns/connections etc. created in the unit test DB and the DB is re-used across all the tests. The only way to solve it for now is to investigate and fix those tests that indeed rely on side effects. That might involve writing a fixture or setUp method that restores the expected state of the DB records that the test expects - many tests have those, but some of them not and rely on the DB being populated from previous tests - and this is the problem. Yes. It's not your fault. But also yes - it, unfortunately, falls on your shoulders essentially if your newly added tests or the modified ones interfere with it. Ideally by finding and resolving the side-effects in the other tests so that the situation gets improved for the future. It happens rarely enough that it is not a "common" problem, but unfortunately, you fell a victim of it likely. And yes it is NOT how it should be. But - unfortunately, we are not living in perfect world and it is, what it is. And hopefully one day we will be able to solve it better and get rid even of the possibility of the side effects to happen, but that would likely require a lot of investment into complete refactoring on how 100s if not 1000s of tests are implemented, so it's totally not feasible to solve it now once and for all, I am afraid. We can complain about it (I do) but this is - unfortuntely - quite an effort to fix. And if anyone has an idea how to solve it quickly - that would be fantastic We have tried in the past to just clean the db for every unit tests but with many thousands of them this slows down the whole suite to a crawl. Hopefully some day we will figure how to fix it better. But I am afraid quoting BTW. This is actually one af a good ideas on how to make an overall improvement in our tests suite to fix this problem permanently. |

|

@ferruzzi

It might happen by two things:

|

I think in this case the side-effect is different than DB: Looks like the tests you added are creating (and not deleting) ~/.aws/credentials - and the failing test expected to raise "No Credentials" error. If my hypothesis is right, the fix is two fold:

On one hand the test did not have the proper "Setup" clearing the state before the test to one that was expected from the test, but on the other hand - it would be difficult to foresee when the test was being written that someone else will create and leave such credential file. Hard to blame anyone in particular, other than it's totally not feasible to run thousands of tests each in completely isolated and pristine environment without side-effects like that. This would be another side-effect example that you might stumble upon and it is something that will happen occasionally unfortunately. Can't imagine how to prevent those kind of problems. |

But they do, that's what I was saying. There are two tests in hooks/s3.py that fail when I run them locally even when I run them using breeze from main without my code. I can work on figuring out why and fixing them, but if they fail in breeze in main then I don't see how the code in this PR is the issue. That should be a separate PR to fix them. FAILED tests/providers/amazon/aws/hooks/test_s3.py::TestAwsS3HookNoMock::test_check_for_bucket_raises_error_with_invalid_conn_id (Note, this one is no longer failing in the CI and I have not caught up on my messages from yesterday or this morning, this may already be fixed)

Yup, that looks right. I propose that if that test expects to not find one, then it should mock the check to see if there is one and return false. Does that sound like the fight fix to that one to you two? If we like that solution I can make the fix but I think it belongs in a different PR. FAILED tests/providers/amazon/aws/hooks/test_s3.py::TestAwsS3Hook::test_create_bucket_no_region_regional_endpoint When run directly (not in breeze) locally it returns an exception that the bucvket already exists, when run in breeze it fails witht he message |

|

Hey @ferruzzi - please rebase now. #28129 that @Taragolis implemented added extra protection for your local env files and moved the test elsewhere. the problem was real, but you likely have not seen it locally, becuse you have AWS environment variables in your breeze configuration |

Pushing now. |

a5f4de0 to

98ceb88

Compare

|

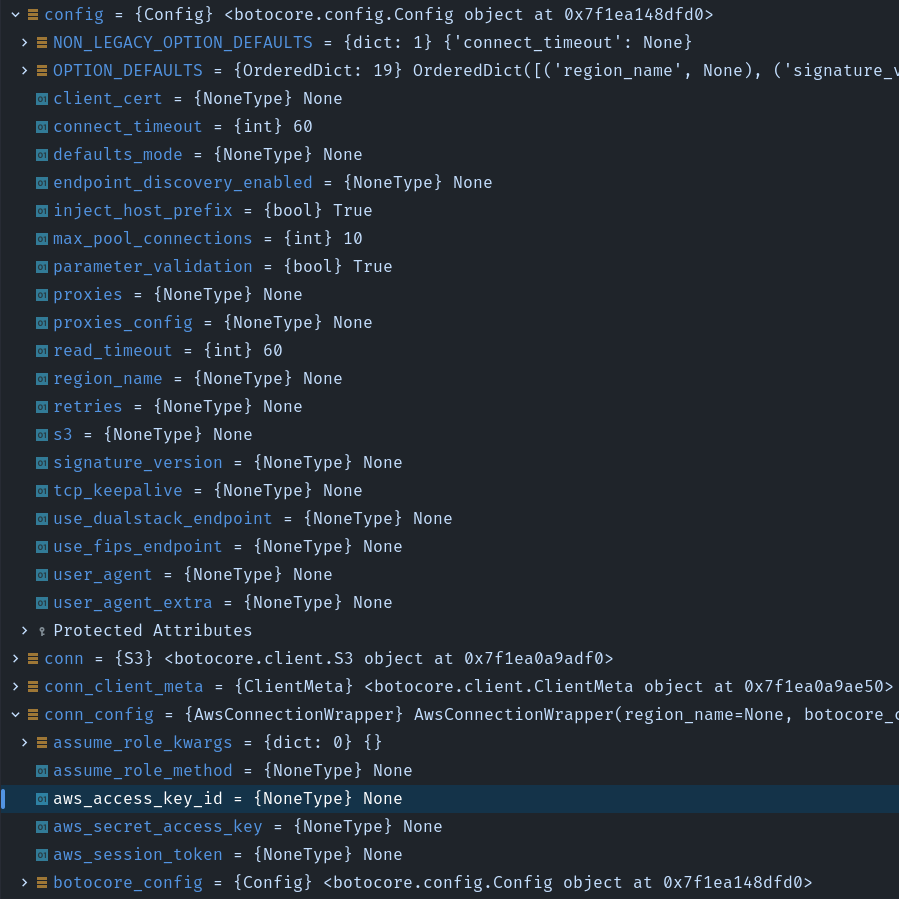

Looks like that commit from @Taragolis fixed one of them. Looking at the other one, it's throwing When the test calls State of the config object at the API call: With all those "None" values, I rather think it looks like a mocking issue to me, and we need to set some return value that's been missed, but maybe I'm wrong. [EDIT] I dropped some debugger traces in there and the config merge looks like it is working as expected; pretty sure it isn't that. I'll have to look more tomorrow. [EDIT 2] Figured out the difference, hope to have a fix up shortly. When run in |

|

Sorry for the holdup, this should pass now. All tests are passing on my side now in Breeze. Here's what I came up with: on L414 here I was defaulting to an empty Config() thinking that would be 'falsy' here in the Connection wrapper, but it isn't 'falsy' so it overrides the user-provided values if the user creates a Connection. So I moved the default to the BaseAws config property and removed the option for that to be None. |

| except Exception: | ||

| # Under no condition should an error here ever cause an issue for the user. | ||

| return "00000000-0000-5000-0000-000000000000" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Small nitpick

- Seems like there is only KeyError might happen here

- Some fixes in nil-uuid

| except Exception: | |

| # Under no condition should an error here ever cause an issue for the user. | |

| return "00000000-0000-5000-0000-000000000000" | |

| except KeyError: | |

| # Under no condition should an error here ever cause an issue for the user. | |

| return "00000000-0000-0000-0000-000000000000" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The 5 is a fixed bit in UUID to show the version and the unit tests check for it to confirm the format so that either has to stay or the unit tests need to be changed. I'd like to keep it, but if you have a strong opinion here or a reason it should be changed I'll update the unit tests too.

For the exception, yeah you are right but I did it for consistency since the others all go by the policy that "nothing should possibly bubble up". I can change it to IndexError if you still want, I just figured I would explain my reason.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nil UUID is special form of UUID, it is not include any specific data, see: https://datatracker.ietf.org/doc/html/rfc4122.html#section-4-1-7

So it is good point to check if any exception happen then all bits are 0

from uuid import UUID

nil = "00000000-0000-0000-0000-000000000000"

assert UUID(int=0) == UUID(nil)There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

And actually I thought we do not need any regex. We could just pass value to UUID object and compare with version

from uuid import UUID

random = "80eb85a0-5aef-45b6-abbb-f16d62d3db42"

uuid_v5 = "bf428e1d-f221-55de-a77f-a61755a4d727"

nil = "00000000-0000-0000-0000-000000000000"

assert UUID(random).version == 4

assert UUID(uuid_v5).version == 5

assert UUID(nil).version is NoneAnd nil uuid not possible to get by use uuid5 however in theory (and infinity time) it is possible to get 00000000-0000-5000-0000-000000000000

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm just running the static checks locally and I'll push the no-regex version. I actually didn't know about the UUID().version check, that's very handy. 👍

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I didn't know that one of the bit is actually a version until you told that ¯\_(ツ)_/¯ 👍

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Alright, I made a small tweak and rerunning the tests locally, but what I ended up with is assert UUID(dag_run_key).version in {5, None}. UUID().version also verifies that it is a valid format so that will catch a poorly formed UUID or anything thast is a valid UUID but not v5 or nil

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

and then realized that without mocking the environment variable that generated the UUID, it's always returning the exception case so I've parameterized the test so it's testing both cases. Tests should be done in a sec.

|

@Taragolis and @potiuk Thank you both for your help on this one. 👍 |

Adding a couple of fields to the boto3 user agent will allow the AWS team to better understand which services and operators to focus improvements on in the future. This is similar to the user agent fields added by Databricks, Google, Yandex, and others.