-

Notifications

You must be signed in to change notification settings - Fork 4k

ARROW-15061: [C++] Add logging for kernel functions and exec plan nodes #12100

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Cool! The macro definitions look fairly useful. |

|

If you have a screenshot or any quick example of the output to share here it would also be useful, I think. |

lidavidm

left a comment

lidavidm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, this looks good overall. I left some small comments.

lidavidm

left a comment

lidavidm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for doing this. Once we get this in, I'm pretty excited to see what sorts of analysis/visualization we can do.

| MARK_SPAN(target_span, st); \ | ||

| END_SPAN(target_span); \ | ||

| return st; \ | ||

| }) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This macro really makes me think we should consider just adding Span as a part of Future (and see how it impacts performance). That can be done later, though, I think we can get this in first and continue refining how we use OpenTelemetry.

There are still some comments from @lidavidm that I need to address, but I can do that tomorrow. |

|

No further comments from me - let's merge once OrderBySinkNode is fully instrumented. |

|

I want to base #11964 on top of this since the helpers here will simplify it. |

|

@westonpace @lidavidm this is now ready for a final review. |

lidavidm

left a comment

lidavidm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for working through this!

|

(For the record, edited description to remove username pings; those get put into the commit message and then generate a lot of notification spam.) |

|

Benchmark runs are scheduled for baseline = 74deb45 and contender = 9b53235. 9b53235 is a master commit associated with this PR. Results will be available as each benchmark for each run completes. |

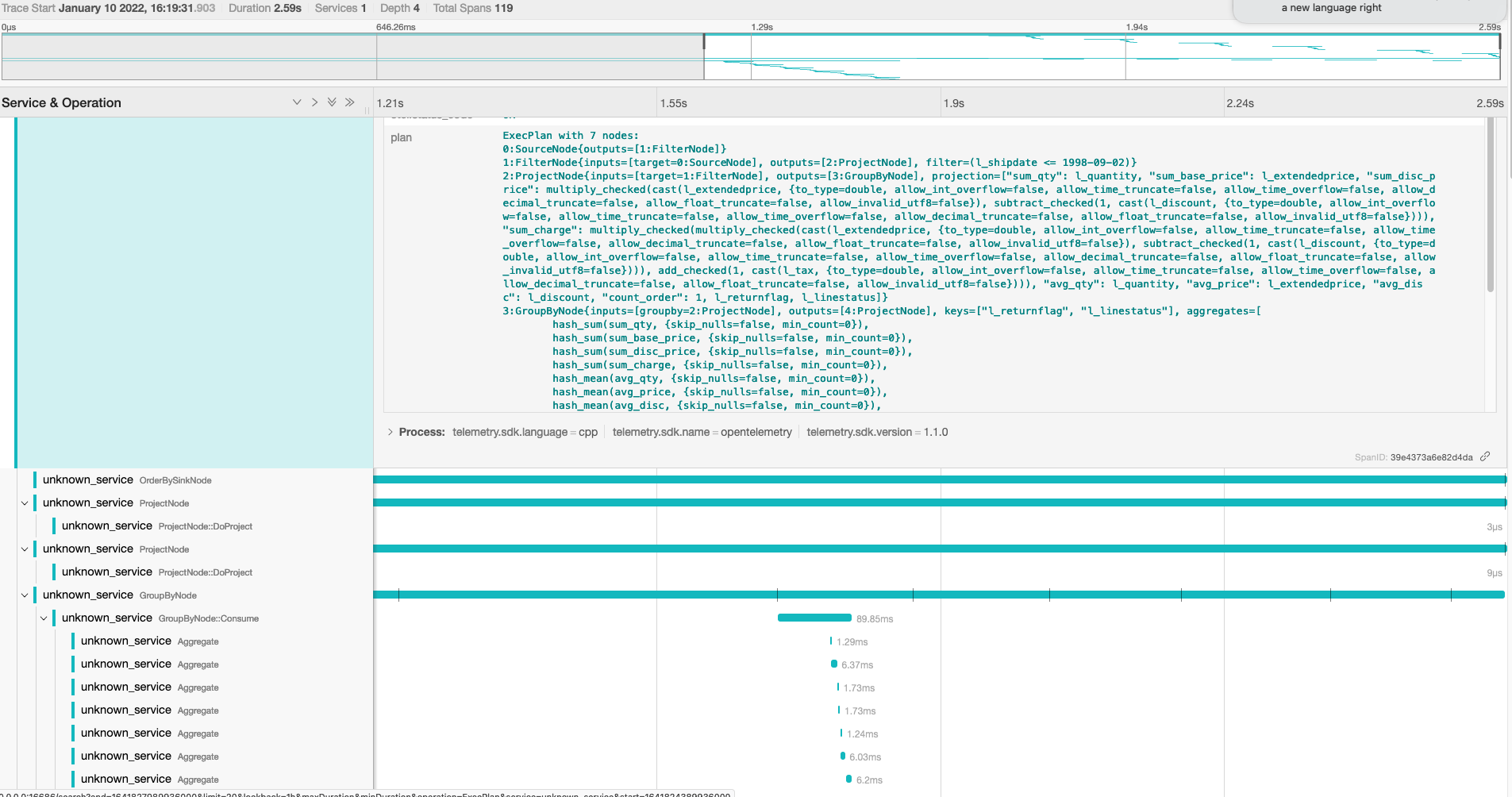

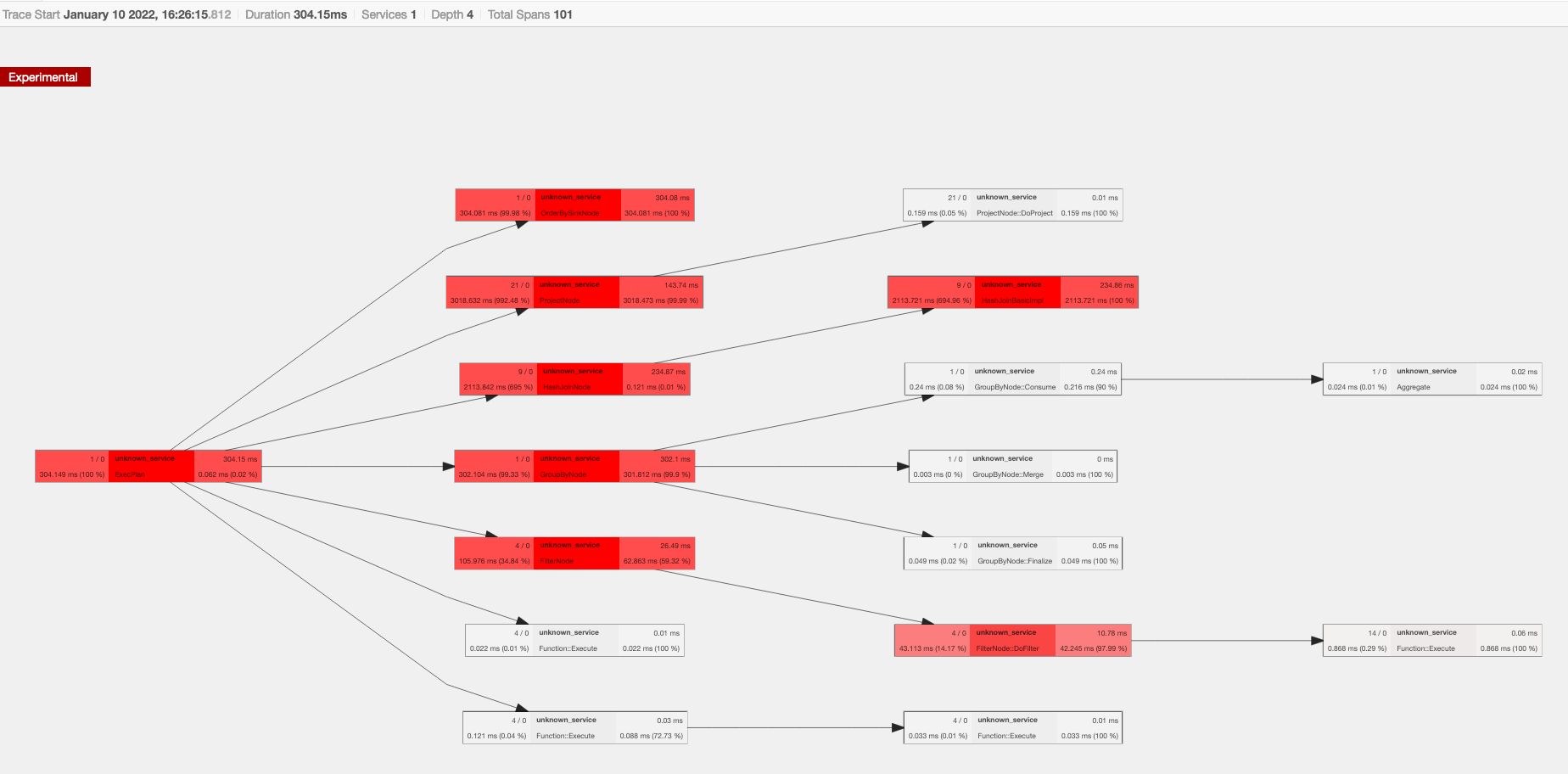

Adds spans and events for exec plan and exec nodes.

I use the following setup to debug traces:

docker-compose.yml:otel-collector-config.yaml:prometheus.yaml:Start the services and then use the instructions from #11906.