-

Notifications

You must be signed in to change notification settings - Fork 505

METRON-2053: Refactor metron-enrichment to decouple Storm dependencies #1368

Conversation

Testing Plan

Setup Test Environment

Verify BasicsVerify data is flowing through the system, from parsing to indexing

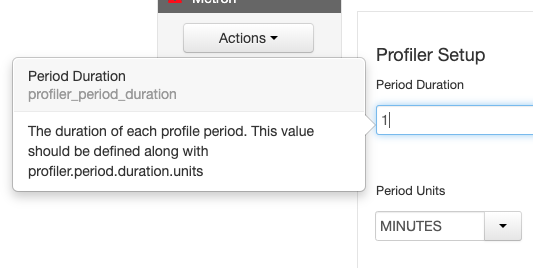

ProfilerVerify profiler still works in Storm and the REPL. Pulled from https://github.com/apache/metron/blob/master/metron-analytics/metron-profiler-storm/README.md

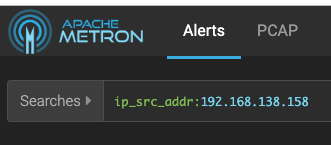

PCAPSteps adapted from #1157 (comment) Get PCAP data into Metron:

Run a fixed filter query

Flatfile loaderMake sure no classpath issues have broken it. Steps adapted from #432 (comment) Preliminaries

The extractor.json will get used by flatfile_loader.sh in the next step Import from HDFS via MRStreaming Enrichment

|

|

I ran through the full test script provided above and everything works as expected. Things to note

|

|

The SLF4J logger warnings in REST are preexisting to this PR. |

|

|

||

| <p><b>Configuration</b></p> | ||

| <p>The first argument to the logger is a java.util.function.Supplier<Map<String, Object>>. The offers flexibility in being able to provide multiple configuration “suppliers” depending on your individual usage requirements. The example above, taken from org.apache.metron.enrichment.bolt.GenericEnrichmentBolt, leverages the global config to dymanically provide configuration from Zookeeper. Any updates to the global config via Zookeeper are reflected live at runtime. Currently, the PerformanceLogger supports the following options:</p> | ||

| <p>The first argument to the logger is a java.util.function.Supplier<Map<String, Object>>. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Probably don't want to change the site book.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hm, that's odd. Must have been an IntelliJ mixup.

| <groupId>org.apache.httpcomponents</groupId> | ||

| <artifactId>httpclient</artifactId> | ||

| <version>${global_httpclient_version}</version> | ||

| <!--<scope>test</scope>--> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Commented out bits

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| * { | ||

| * "parserClassName": "org.apache.metron.parsers.csv.CSVParser", | ||

| * "writerClassName": "org.apache.metron.enrichment.writer.SimpleHbaseEnrichmentWriter", | ||

| * "writerClassName": "org.apache.metron.writer.hbase.SimpleHbaseEnrichmentWriter", |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe this would constitute a breaking change. Need to add a note on this in the Upgrading doc.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| </exclusion> | ||

| </exclusions> | ||

| </dependency> | ||

| </dependency>--> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not needed

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| <type>test-jar</type> | ||

| <scope>test</scope> | ||

| </dependency> | ||

| </dependency>--> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not needed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| </resource> | ||

| <resource> | ||

| <directory>${metron_dir}/metron-platform/metron-enrichment/target/</directory> | ||

| <directory>${metron_dir}/metron-platform/metron-enrichment/metron-enrichment-common/target/</directory> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we need a deployable artifact for metron-enrichment-common? Wouldn't the deployable bits come from metron-enrichment-storm alone?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Took me a minute to remember why I did this. It seems like that would be correct, but then I saw that we had metron-parsers-common. I wasn't entirely sure why we need common as a separate deployable unit, but I found that it's what we use for zk_load_configs.sh. For enrichment, I put the Zookeeper configs into metron-enrichment-common because it could be used independent of metron-enrichment-storm (say we were to add Spark support, for instance). So the rpm/deb files will lay down the common bits that will include the enrichment configs.

Apparently the flux files got mixed up in there, so I'm removing those references.

%package enrichment-common

Summary: Metron Enrichment Common Files

Group: Applications/Internet

Provides: enrichment-common = %{version}

%description enrichment-common

This package installs the Metron Enrichment Common files

%files enrichment-common

%defattr(-,root,root,755)

%dir %{metron_root}

%dir %{metron_home}

%dir %{metron_home}/bin

%dir %{metron_home}/config

%dir %{metron_home}/config/zookeeper

%dir %{metron_home}/config/zookeeper/enrichments

%{metron_home}/bin/latency_summarizer.sh

%{metron_home}/config/zookeeper/enrichments/bro.json

%{metron_home}/config/zookeeper/enrichments/snort.json

%{metron_home}/config/zookeeper/enrichments/websphere.json

%{metron_home}/config/zookeeper/enrichments/yaf.json

%{metron_home}/config/zookeeper/enrichments/asa.json

%{metron_home}/flux/enrichment/remote-splitjoin.yaml

%{metron_home}/flux/enrichment/remote-unified.yaml

%attr(0644,root,root) %{metron_home}/lib/metron-enrichment-common-%{full_version}-uber.jar

Woops...

%{metron_home}/flux/enrichment/remote-splitjoin.yaml

%{metron_home}/flux/enrichment/remote-unified.yaml

# rpm -qa|grep metron

metron-data-management-0.7.1-201904050407.noarch

metron-parsing-storm-0.7.1-201904050407.noarch

metron-profiler-repl-0.7.1-201904050407.noarch

metron-pcap-0.7.1-201904050407.noarch

metron-config-0.7.1-201904050407.noarch

metron-common-0.7.1-201904050407.noarch

metron-metron-management-0.7.1-201904050407.noarch

metron-parsers-0.7.1-201904050407.noarch

metron-enrichment-0.7.1-201904050407.noarch

metron-profiler-spark-0.7.1-201904050407.noarch

metron-indexing-0.7.1-201904050407.noarch

metron-solr-0.7.1-201904050407.noarch

metron-rest-0.7.1-201904050407.noarch

metron-alerts-0.7.1-201904050407.noarch

metron-performance-0.7.1-201904050407.noarch

metron-parsers-common-0.7.1-201904050407.noarch

metron-profiler-storm-0.7.1-201904050407.noarch

metron-elasticsearch-0.7.1-201904050407.noarch

metron-maas-service-0.7.1-201904050407.noarch

# grep parsers-common bin/*

bin/zk_load_configs.sh:export JAR=metron-parsers-common-$METRON_VERSION-uber.jar

…hrowing java.nio.channels.ClosedByInterruptException

…f Caffeine. Excluding.

|

@nickwallen I believe I've addressed all of your comments so far. I also finished refactoring the enrichment documentation. |

|

+1 Looks good. I ran through all the test scenarios and did not encounter any problems. |

Contributor Comments

https://issues.apache.org/jira/browse/METRON-2053

The rationale for this work is to decouple the platform from Storm dependencies and enable us to integrate with other streaming frameworks. Of particular interest is Spark, but I'll save the meat of that conversation for a proper DISCUSS thread.

Pull Request Checklist

For all changes:

For code changes:

Have you included steps to reproduce the behavior or problem that is being changed or addressed?

Have you included steps or a guide to how the change may be verified and tested manually?

Have you ensured that the full suite of tests and checks have been executed in the root metron folder via:

Have you written or updated unit tests and or integration tests to verify your changes?

n/a If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under ASF 2.0?

Have you verified the basic functionality of the build by building and running locally with Vagrant full-dev environment or the equivalent?

For documentation related changes:

Have you ensured that format looks appropriate for the output in which it is rendered by building and verifying the site-book? If not then run the following commands and the verify changes via

site-book/target/site/index.html:Note:

Please ensure that once the PR is submitted, you check travis-ci for build issues and submit an update to your PR as soon as possible.

It is also recommended that travis-ci is set up for your personal repository such that your branches are built there before submitting a pull request.