-

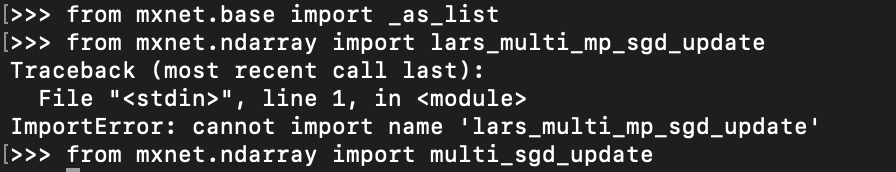

DescriptionIn the submission of mxnet in mlperf training v0.7 benchmark, mxnet used a optimizer kernel call "lars_multi_mp_sgd_update" or "lars_multi_mp_sgd_mom_update". Mxnet used this optimizer and got great results in mlperf v0.7, and I would like to try it in my project. Error MessageWhat have you tried to solve it?i had tried with the master branches of v1.6 and v1.7 |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments

-

|

Welcome to Apache MXNet (incubating)! We are on a mission to democratize AI, and we are glad that you are contributing to it by opening this issue. |

Beta Was this translation helpful? Give feedback.

-

|

You can use |

Beta Was this translation helpful? Give feedback.

You can use

LARSoptimizer - it uses the same kernels as the MLPerf submission (they are named slightly differently -preloaded_multi_mp_sgd_update). The NVIDIA NGC MXNet container, which was used for that submission, had the LARS optimizer before the upstream and the names were changed as part of the upstreaming process.