-

Notifications

You must be signed in to change notification settings - Fork 6.7k

[poc][v1.x] ONNX Add an option to un-interleave BERT #20249

base: v1.x

Are you sure you want to change the base?

Conversation

|

Hey @Zha0q1 , Thanks for submitting the PR

CI supported jobs: [clang, miscellaneous, unix-gpu, edge, centos-cpu, unix-cpu, centos-gpu, windows-cpu, website, windows-gpu, sanity] Note: |

| # coding: utf-8 | ||

| """ONNX export op translation""" | ||

|

|

||

| from . import _gluonnlp_bert |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would this logic benefits specifically for TRT? If so, shall we consider to name it like '_gluonnlp_bert_trt'?

|

It probably makes more sense to have gluonnlp export a BERT graph that doesn't involve interleaving than doing it in mxnet. framework shouldn't have knowledge about or rely on the implementation of its ecosystem packages. |

| model_specific_logics : str | ||

| Specifies if model-specific conversion logic should be used. Refer to ./_op_translations/ | ||

| cheat_sheet : dict of str to str | ||

| This is a dict that stors some hyperparameters values or additional info about the model that | ||

| would be used in model-specific conversion functions |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

these options are semantically unclear and hard to maintain

I agree. Alternatively we might be able to make mx2onnx support loading custom conversion functions dynamically and put these functions to gluonnlp? Or if even that is too hacky this pr can serve as a poc to evaluate the performance benefit with trt 8.0 by un-interleaving the matrix multiplication |

|

@Zha0q1 What is the difficulty in doing this transformation after the export? You should be able to modify the ONNX graph itself, right? |

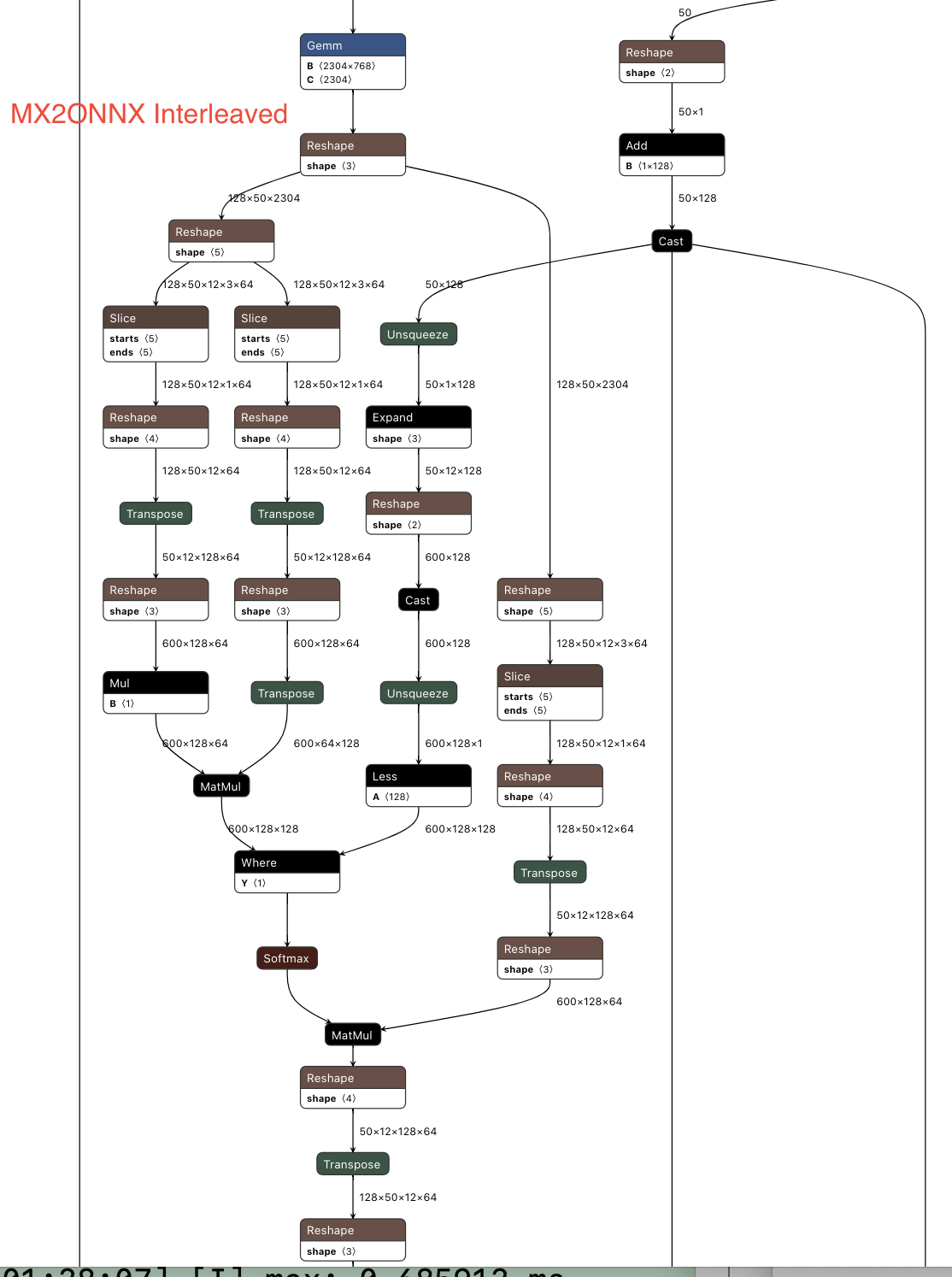

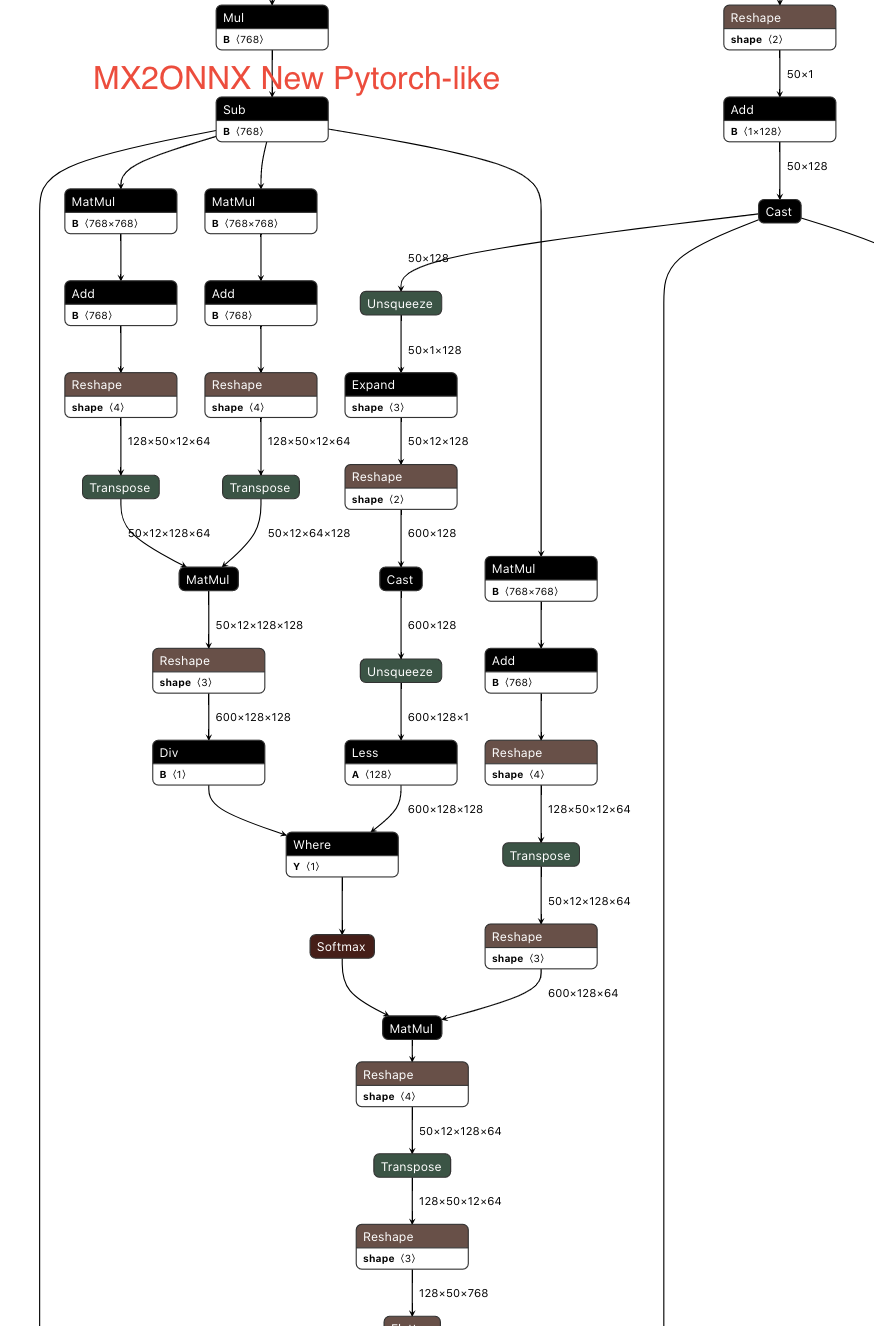

Add model (BERT) specific logic to un-interleave the self attention mat mul. This can potentially speed up inference with trt 8.0 whose compiler can recognize the new pattern.

default usage

model_specific_logics='gluonnlp_bert'When the model is not bert base (meaning hidden != 768 or num_heads != 12), e.g. bert large

the usage is:

This option to un-interleave self-attention would also work with bert-variants such as

roberta,distilbert, and 'ernie'The first screenshot is the old graph, the second is the new graph. Note that use of

onnx-simis required@TristonC @MoisesHer @waytrue17 @josephevans