-

Notifications

You must be signed in to change notification settings - Fork 3.7k

[fix][broker][functions-worker] Ensure prometheus metrics are grouped by type (#8407, #13865) #17618

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…metheusMetricsTest.java Co-authored-by: Dave Maughan <davidamaughan@gmail.com>

|

/pulsarbot run-failure-checks |

|

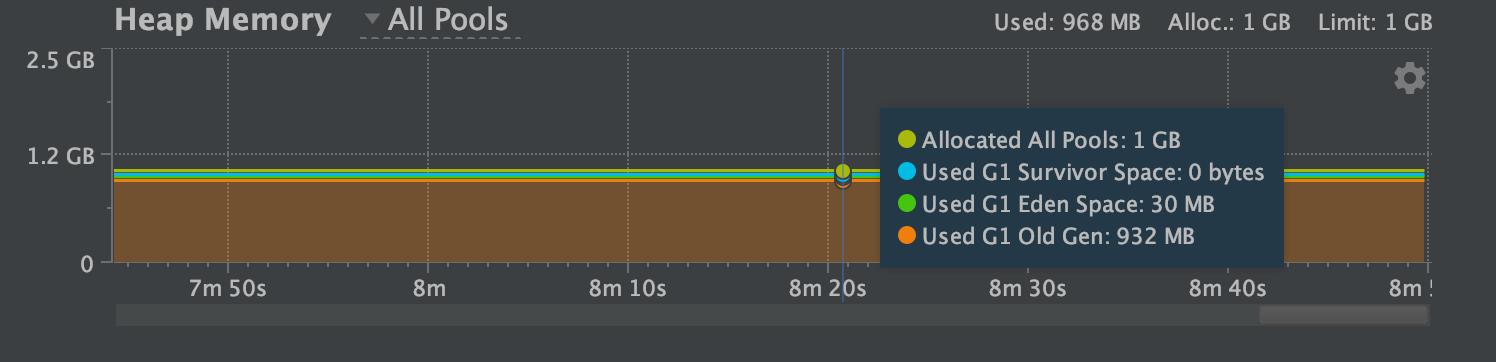

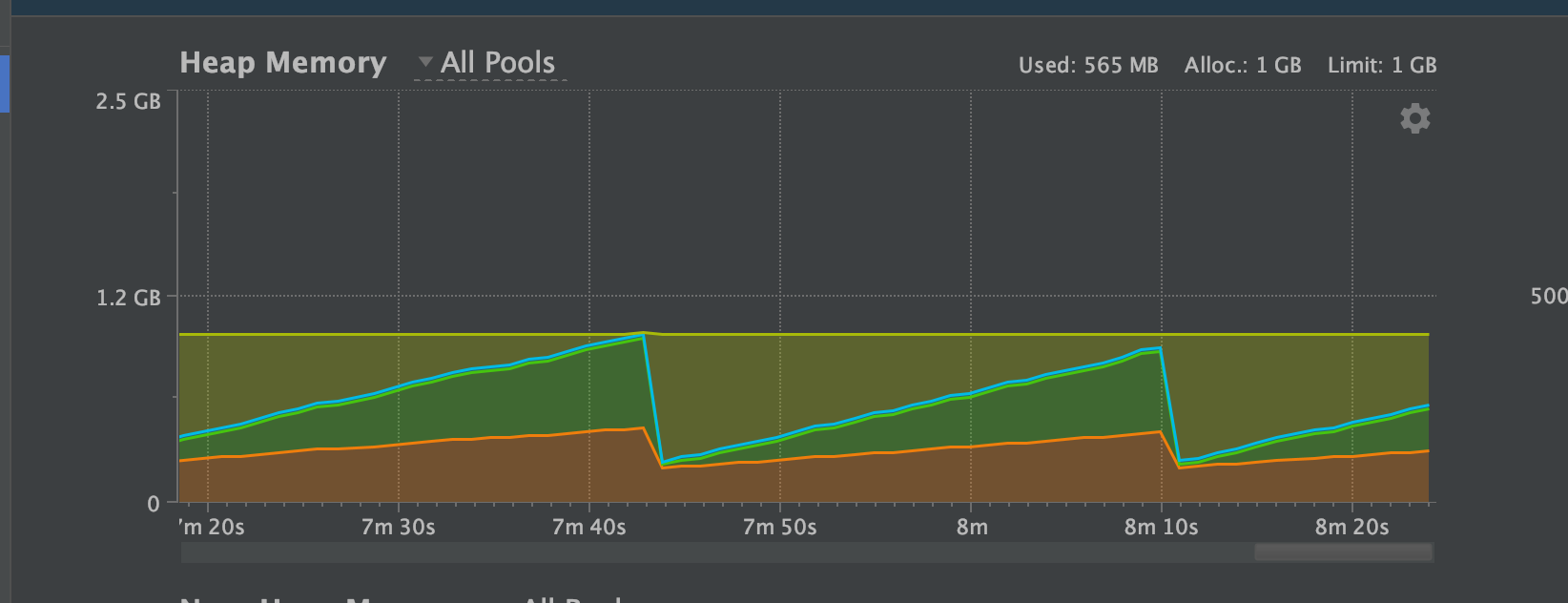

@marksilcox Sorry, I have to revert this PR since it introduced a memory leak that was detected by a long-running continuous verification test. The reproduction steps:

for i in {1..1000}

do

curl -L localhost:8080/metrics/

doneAfter reverting this PR, the memory leak issue has been fixed. I'm not sure where is the root cause yet. Since many people are built based on the Pulsar release branches, so we'd better revert first and then fix the memory issue and create a new PR again. @marksilcox If you need help with the memory leak issue, I believe @tjiuming or @asafm can provide some insight here. |

|

I can't reproduce the issue on the master branch with PR (#15558); it looks like the memory leak issue only happened on branch-2.9 But it's better to have someone to double check. |

|

@codelipenghui The implementation between 2.9 and master are indeed different, hence the reason why the memory leak was introduced only in 2.9 - I checked it. |

Fixes #8407

Fixes #13865

Motivation

Current broker prometheus metrics are not grouped by metric type which causes issues in systems that read these metrics (e.g. DataDog).

Prometheus docs states "All lines for a given metric must be provided as one single group" - https://github.com/prometheus/docs/blob/master/content/docs/instrumenting/exposition_formats.md#grouping-and-sorting

Modifications

Updated the namespace and topic prometheus metric generators to group the metrics under the appropriate type header.

Updated function worker stats to include TYPE headers

Verifying this change

This change added tests and can be verified as follows:

Added unit test to verify all metrics are grouped under correct type header

Does this pull request potentially affect one of the following parts:

Documentation

Need to update docs?

doc-not-neededChanges to match prometheus spec