-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-21574][SQL] Point out user to set hive config before SparkSession is initialized #18769

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #80042 has finished for PR 18769 at commit

|

|

Test build #80050 has finished for PR 18769 at commit

|

|

retest this please |

|

Test build #80051 has finished for PR 18769 at commit

|

|

Please describe the issue to fix in the PR description, rather than just the codes to reproduce. |

| conf.get(key, defaultValue) | ||

| } | ||

|

|

||

| override def setConf(key: String, value: String): Unit = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Add clientLoader.synchronized?

| /** Returns the configuration for the given key in the current session. */ | ||

| def getConf(key: String, defaultValue: String): String | ||

|

|

||

| /** Set the given configuration property. */ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: ... in the current session.

| val sourceTable = "sourceTable" | ||

| val targetTable = "targetTable" | ||

| (0 until cnt).map(i => (i, i)).toDF("c1", "c2").createOrReplaceTempView(sourceTable) | ||

| sql(s"create table $targetTable(c1 int) PARTITIONED BY(c2 int)") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: Usually we use upper case for SQL keywords like SELECT, CREATE TABLE, etc.

|

@viirya Spark does not support that. see:#17223 (comment) |

|

Yep. The workaround has been the solution for this. |

|

|

|

Test build #80114 has finished for PR 18769 at commit

|

|

From the screenshot, it can't tell if the setting works or not. Please explicitly log that the setting doesn't work. |

|

Test build #80155 has finished for PR 18769 at commit

|

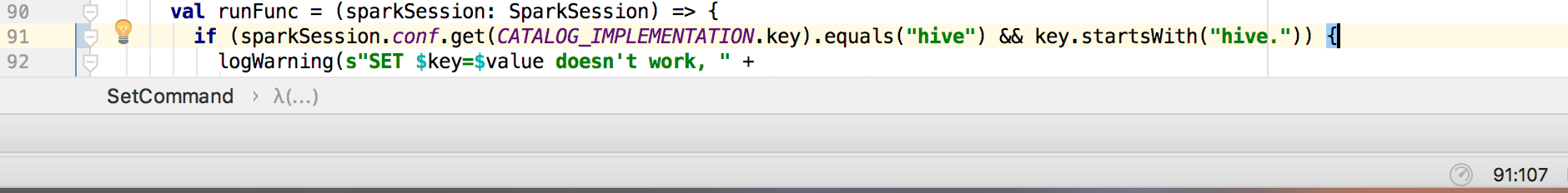

| case Some((key, Some(value))) => | ||

| val runFunc = (sparkSession: SparkSession) => { | ||

| if (sparkSession.conf.get(CATALOG_IMPLEMENTATION.key).equals("hive") | ||

| && key.startsWith("hive.")) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: Could you move this && to the line 91?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

if (sparkSession.conf.get(CATALOG_IMPLEMENTATION.key).equals("hive") &&

key.startsWith("hive.")) {

| logWarning(s"SET $key=$value doesn't work, " + | ||

| s"because Spark doesn't support set hive config dynamically. " + | ||

| s"Please set hive config through " + | ||

| s"--conf spark.hadoop.$key=$value before SparkSession is initialized.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How about?

'SET $key=$value' might not work, since Spark doesn't support changing the Hive config dynamically. Please passing the Hive-specific config by adding the prefix

spark.hadoop(e.g.,spark.hadoop.$key) when starting a Spark application. For details, see the link: https://spark.apache.org/docs/latest/configuration.html#dynamically-loading-spark-properties.

|

@wangyum Could you help us submit a PR to fix the doc generation in https://spark.apache.org/docs/latest/configuration.html? See many annoying syntax issues. |

|

OK, I will try. |

| s"Please set hive config through " + | ||

| s"--conf spark.hadoop.$key=$value before SparkSession is initialized.") | ||

| if (sparkSession.conf.get(CATALOG_IMPLEMENTATION.key).equals("hive") && | ||

| key.startsWith("hive.")) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: could you add two more spaces before key?

| s"the Hive config dynamically. Please passing the Hive-specific config by adding the " + | ||

| s"prefix spark.hadoop (e.g.,spark.hadoop.$key) when starting a Spark application. " + | ||

| s"For details, see the link: https://spark.apache.org/docs/latest/configuration.html#" + | ||

| s"dynamically-loading-spark-properties.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Except the line 93 and 95, the other lines do not need s

| key.startsWith("hive.")) { | ||

| logWarning(s"'SET $key=$value' might not work, since Spark doesn't support changing " + | ||

| s"the Hive config dynamically. Please passing the Hive-specific config by adding the " + | ||

| s"prefix spark.hadoop (e.g.,spark.hadoop.$key) when starting a Spark application. " + |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please add a space after e.g.,

|

You can refer the description in |

|

LGTM pending a few minor comments. |

|

Test build #80297 has finished for PR 18769 at commit

|

|

Test build #80298 has finished for PR 18769 at commit

|

|

retest this please |

|

Test build #80299 has finished for PR 18769 at commit

|

|

retest this please |

|

Test build #80302 has finished for PR 18769 at commit

|

|

Thanks! Merging to master. |

|

@gatorsmile Docs syntax issues was fixed by #18793. |

What changes were proposed in this pull request?

Since Spark 2.0.0, SET hive config commands do not pass the values to HiveClient, this PR point out user to set hive config before SparkSession is initialized when they try to set hive config.

How was this patch tested?

manual tests