ZOOKEEPER-4293: Lock Contention in ClientCnxnSocketNetty#1917

ZOOKEEPER-4293: Lock Contention in ClientCnxnSocketNetty#1917anmolnar merged 1 commit intoapache:masterfrom

Conversation

012a45b to

3ae920b

Compare

3ae920b to

0c4be56

Compare

|

|

b4797a9 to

052cce2

Compare

|

@eolivelli , @maoling - please take a look when you have a chance. This replaces #1713 that you looked at last year. |

|

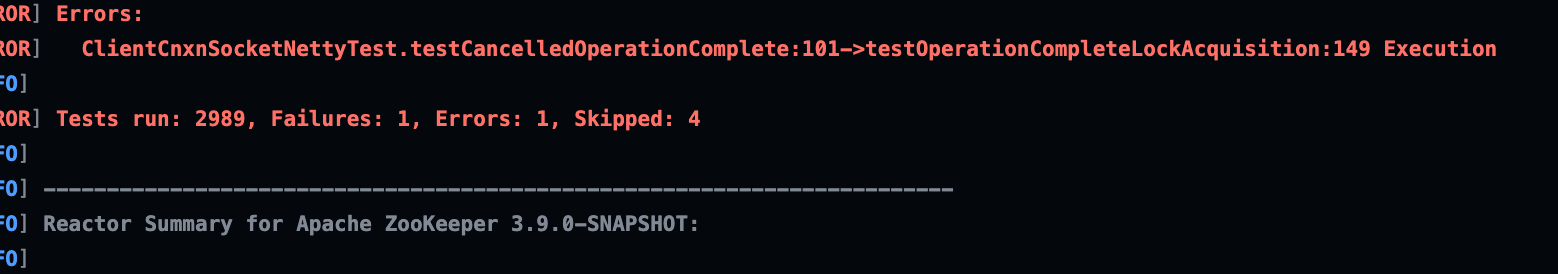

it looks some test related to this patch failed on CI can you please take a look @MikeEdgar ? |

|

@eolivelli , I've made a change that should address the NPE in the new test. Question.. I see errors locally around |

4f2d753 to

b24ffb0

Compare

|

@eolivelli , please take another look. |

|

@eolivelli @anmolnar @phunt @symat PTAL. The CI has not yet run with the test fix. Please also note my earlier question regarding |

kezhuw

left a comment

kezhuw

left a comment

There was a problem hiding this comment.

I submit a reproducible test in kezhuw@0f2610b. From the test case, I think we should fix two sub issues:

- Deadlock due to synchronous close and only one event loop thread.

- Prevent completion of old connection from interfering current connection.

I have left comments for these two.

I hope this test case could help in reviewing.

| afterConnectFutureCancel(); | ||

| } | ||

| if (channel != null) { | ||

| channel.close().syncUninterruptibly(); |

There was a problem hiding this comment.

I think it is a good to move this blocking syncUninterruptibly part out of lock scope. This could also solve the deadlock issue.

| connectLock.lock(); | ||

| try { | ||

| if (connectFuture != null) { | ||

| connectFuture.cancel(false); |

There was a problem hiding this comment.

After taking a look at netty code AbstractNioChannel::connect, AbstractEpollChannel::connect and AbstractKQueueChannel::connect, I think this statement connectFuture.cancel(false) cloud be the root cause.

Basically, a connecting future is uncancellable which means connectFuture.cancel(false) could be a nop. So, the old connectFuture could still pending for completion. When we cleanup new connectFuture and old connectFuture completes, they are stuck due to leak of event threads to complete channel.close().syncUninterruptibly()(invoke close in event thread).

I think we could also wait connectFuture to complete in cleanup, but I don't think it is a good. Connection timeout is a possible reason for us to fall here. It is nonsense to block next connection for the same reason.

I have watched this issue for a while, and could not image a situation where there is single connectFuture and this stuck emerged. The channel.close().syncUninterruptibly() means its connectFuture already completed successfully.

| } | ||

| } | ||

|

|

||

| boolean cancelled(ChannelFuture channelFuture) { |

There was a problem hiding this comment.

I think we could concentrate more on identity of channelFuture and connectFuture. This will make it clear that channelFuture could come from a old connection. Better to document a bit.

It is also possible that new connection is not completed yet. In this case, old connection should be simply filtered out, otherwise we could introduce inconsistence with outside world.

|

@eolivelli , what are you thoughts on @kezhuw's comments? Does this PR need additional updates or can it be accepted as-is? |

kezhuw

left a comment

kezhuw

left a comment

There was a problem hiding this comment.

I think the fix clould be as simple as droping syncUninterruptibly from channel.close().syncUninterruptibly(). This way we break the deadlock chain.

netty/netty#13849 probably "fixed" this. But I think it is still vital for us to realize that completed channelFuture may belong to old dangling connection. So I am tend to fix this in our side (also).

| if (!channelFuture.isSuccess()) { | ||

| LOG.warn("future isn't success.", channelFuture.cause()); | ||

| return; | ||

| } else if (connectFuture == null) { |

There was a problem hiding this comment.

How about simple channelFuture != connectFuture in earlier stage ? This should cover connectFuture == null. In case of channelFuture != connectFuture we should do nothing but simple return.

| afterConnectFutureCancel(); | ||

| } | ||

| if (channel != null) { | ||

| channel.close().syncUninterruptibly(); |

There was a problem hiding this comment.

How about drop .syncUninterruptibly() part ? This way we will not wait for channel close completion callback which could deadlock with old connectFuture's completion callback.

I confirmed this with kezhuw@0f2610b. After netty/netty#13849, Though, I am still prefer to fix this in ZooKeeper side. |

|

@kezhuw , @eolivelli , I'm not in a position at the moment to devote much time to reworking and testing this PR, especially in an integrated environment where the issue originally occurred for us. I wonder if it needs the adjustments proposed by @kezhuw, whether it should be a separate PR and just close this one. Please let me know your thoughts. |

Calling a sync method in an async operation inside a lock is a code smell I believe, so I tend to prefer this solution over what the patch suggests. Is this |

|

The jira ZOOKEEPER-4293 has the deadlock stack dump. Below is what kezhuw@0f2610b reproduced:

graph TD;

SendThread -- blocks on --- ChannelClosing[channel closing];

ChannelClosing[channel closing] -- waits for event loop --- ConnectFutureCancellation[old connection cancellation];

ConnectFutureCancellation[old connection cancellation] -- blocks on lock --- SendThread;

I think the bug is multi-folds:

Theoretically, we can fix the deadlock by breaking any of the above. netty/netty#13849 breaks the third. I do think bump netty version cloud fix this deadlock. But I also think it might be fragile to depend on bump dependency version to fix bug as netty version could be pinned/upgraded independently. So, personally I prefer to fix it in our side. cc @anmolnar |

|

Thanks for the clarification @kezhuw , I appreciate your nice diagram.

Thoughts? |

|

@anmolnar Good to go. |

|

@MikeEdgar What do you think? |

b24ffb0 to

0e4df9f

Compare

anmolnar

left a comment

anmolnar

left a comment

There was a problem hiding this comment.

Sorry I meant we didn't need this modification at all. Instead just do the 2 things that was mentioned in the previous comment.

Signed-off-by: Michael Edgar <medgar@redhat.com>

0e4df9f to

3ed97e4

Compare

@anmolnar - done |

anmolnar

left a comment

anmolnar

left a comment

There was a problem hiding this comment.

lgtm.

@eolivelli @kezhuw PTAL.

| } | ||

|

|

||

| if (bytesRead == litmus.length && SslHandler.isEncrypted(Unpooled.wrappedBuffer(litmus))) { | ||

| if (bytesRead == litmus.length && SslHandler.isEncrypted(Unpooled.wrappedBuffer(litmus), false)) { |

There was a problem hiding this comment.

This variant is brand new in netty-4.1.113.Final, so this patch will enforce netty version. Given my comments #1917 (comment), I am +1 to this. So, client will have to bump its netty version to match this patch in compilation.

netty commit: netty/netty@dc30c33#diff-963dd9b8e77e913395273812889e9b6f2f449fe55e2b748b95311b219d1c58faR1286

There was a problem hiding this comment.

Isn't that a problem if we backport this to 3.9? (I want to)

Can we just use another variant which is backward compatible?

There was a problem hiding this comment.

The only other variant is the deprecated one, which fails to compile due to the -Werror flag. This file change could possibly be left out of a backport which uses an older netty version.

There was a problem hiding this comment.

No worries, we'll upgrade on 3.9 too.

|

cppunit tests are stuck here too |

…ly (#1917) Reviewers: anmolnar, kezhuw Author: MikeEdgar Closes #1917 from MikeEdgar/ZOOKEEPER-4293

|

Merged. Thanks @MikeEdgar ! |

…nterruptibly (apache#1917) Reviewers: anmolnar, kezhuw Author: MikeEdgar Closes apache#1917 from MikeEdgar/ZOOKEEPER-4293 (cherry picked from commit 9df310a)

…nterruptibly (apache#1917) Reviewers: anmolnar, kezhuw Author: MikeEdgar Closes apache#1917 from MikeEdgar/ZOOKEEPER-4293 (cherry picked from commit 9df310a)

…ly (apache#1917) Reviewers: anmolnar, kezhuw Author: MikeEdgar Closes apache#1917 from MikeEdgar/ZOOKEEPER-4293

…adlock) (branch-3.8 backport) ZOOKEEPER-4293: Bump netty to 4.1.113.Final, remove syncUninterruptibly (#1917) Reviewers: anmolnar, kezhuw Author: MikeEdgar Closes #1917 from MikeEdgar/ZOOKEEPER-4293 Reviewers: kezhuw Author: anmolnar Closes #2284 from anmolnar/ZOOKEEPER-4293_38

…ly (apache#1917) Reviewers: anmolnar, kezhuw Author: MikeEdgar Closes apache#1917 from MikeEdgar/ZOOKEEPER-4293

Check for failed/cancelled ChannelFutures before acquiring connectLock. This prevents lock contention where cleanup is unable to complete.

Replaces #1713 (target

masterrather thanbranch-3.5)