-

Notifications

You must be signed in to change notification settings - Fork 45

Add support for running compute worker with other container engines (Podman) #772

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

This is in preparation for generalizing container engine support to allow the use of podman.

Add support for configuring the container engine through an environment variable ( CONTAINER_ENGINE_EXECUTABLE ).

Docker will create them, but other container engines like podman may not.

This Containerfile allows rootless Podman in Podman (PINP).

The podman container is built as root

Add support for running compute worker with other container engines

|

@Didayolo Not sure what happened to my PR? Merged into another branch? I have a couple more commits I would like to push. |

|

OK, I see you are running the CI, can I push my additional commits? |

Hi Chris, indeed that is a bit a confusing move. In the future, it may be a better practice to keep external contributions in the forks of the repository. If you do new commits to your branch in your repository, we can simply merge it to this new branch. |

|

@Didayolo I just pushed two more commits to my branch. |

Podman | New commits from cjh1 repository

|

Done. In the future, it'll be interesting to know if there is a way to launch CI tests directly from an external repository. |

|

We could have tested the feature locally on our test environment with "git fetch origin pull/763/head:podmanchris" and "git checkout podmanchris". We can also run our tests locally within django container launching the py.test command from it. I think this is a better workflow for next time. |

|

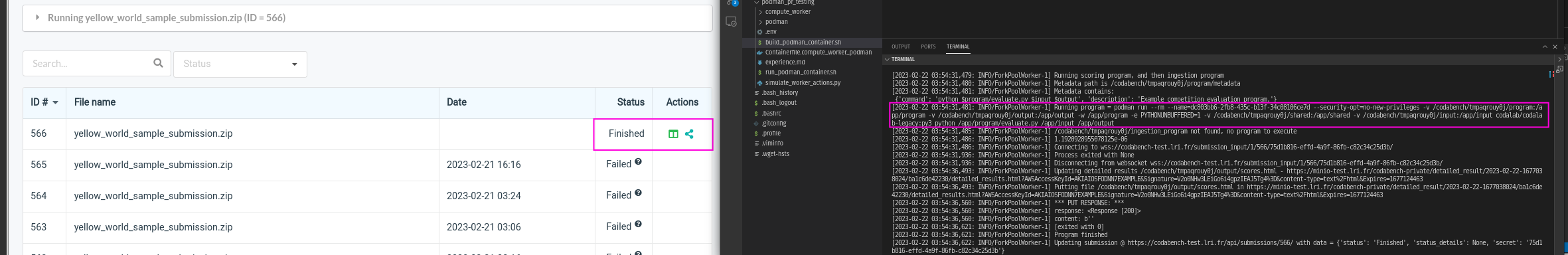

Hi all, I've been messing around with this this weekend and first I want to say Anne-Catherine's suggestion "git fetch origin pull/763/head:podmanchris" works fantastically and I believe that gives the effect we saw at first where the repo chris made called "podman" was effectively cloned to a local branch we had on the test VM (codabench-test.lri.fr). Anyways the rest of my experience is encapsulated below: |

Notes on PR: #772First Steps:create a queue# podman_queue_cpu

BROKER_URL=pyamqp://82801eec-626e-4448-98ef-0340cf22ab17:c0b6d3d9-931f-4612-8289-b021fccff340@codabench-test.lri.fr:5672/740536a7-1183-4169-990f-9bc950e0c9cbCopy necessary files from codabench repo to personal working directory and make necessary files:# as user bbearce

cd /home/bbearce;

mkdir podman_pr_testing;

cp /home/codalab/codabench/podman /home/bbearce/podman_pr_testing/podman;

cp /home/codalab/codabench/compute_worker /home/bbearce/podman_pr_testing/compute_worker;

cp /home/codalab/codabench/Containerfile.compute_worker_podman /home/bbearce/podman_pr_testing/Containerfile.compute_worker_podman;

touch podman_pr_testing/.env;Fill .env with this text: Build podman imageCreate these files:

build_podman_container.sh podman build -f Container.compute_worker_podman -t codalab/codabench_worker_podman .This creates a container named as follows (localhost/codalab/codabench_worker_podman):

bbearce@codabench-test-acl-220909:~/podman_pr_testing$ podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/codalab/codabench_worker_podman latest 3560a6e0c8c2 12 minutes ago 747 MBCreate worker usersudo useradd workerContents of /etc/passwd: ...

dtran:x:1004:1004:,,,:/home/dtran:/bin/bash

acl:x:1005:1005::/home/acl:/bin/bash

worker:x:1006:1006::/home/worker:/bin/shrun_podman_container.sh

# notice the "localhost" that is necessary

podman run -d \

--env-file .env \

--name compute_worker \

--security-opt="label=disable" \

--device /dev/fuse \

--user worker \

--restart unless-stopped \

--log-opt max-size=50m \

--log-opt max-file=3 \

localhost/codalab/codabench_worker_podmanThe container launches successfully and I get this in the logs: bbearce@codabench-test-acl-220909:~/podman_pr_testing$ podman logs -f compute_worker

-------------- compute-worker@767974418f5b v4.4.0 (cliffs)

--- ***** -----

-- ******* ---- Linux-5.10.0-17-cloud-amd64-x86_64-with-glibc2.34 2023-02-21 03:04:50

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: __main__:0x7ff99a447130

- ** ---------- .> transport: amqp://82801eec-626e-4448-98ef-0340cf22ab17:**@codabench-test.lri.fr:5672/740536a7-1183-4169-990f-9bc950e0c9cb

- ** ---------- .> results: disabled://

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> compute-worker exchange=compute-worker(direct) key=compute-worker

Run submission on hello world challengesWhen running a submission I get these logs: [2023-02-21 03:06:13,526: INFO/ForkPoolWorker-1] Running pull for image: codalab/codalab-legacy:py3

time="2023-02-21T03:06:13Z" level=error msg="running `/usr/bin/newuidmap 16 0 1000 1 1 1 999 1000 1001 64535`: newuidmap: write to uid_map failed: Operation not permitted\n"

Error: cannot set up namespace using "/usr/bin/newuidmap": should have setuid or have filecaps setuid: exit status 1

[2023-02-21 03:06:13,589: INFO/ForkPoolWorker-1] Full command ['podman', 'pull', 'codalab/codalab-legacy:py3']

[2023-02-21 03:06:13,589: INFO/ForkPoolWorker-1] Pull for image: codalab/codalab-legacy:py3 returned a non-zero exit code! Full command output ['podman', 'pull', 'codalab/codalab-legacy:py3']

[2023-02-21 03:06:13,589: INFO/ForkPoolWorker-1] Updating submission @ https://codabench-test.lri.fr/api/submissions/563/ with data = {'status': 'Failed', 'status_details': 'Pull for codalab/codalab-legacy:py3 failed!', 'secret': 'afef73e2-9939-41f5-a804-9dc383e50eb9'}

[2023-02-21 03:06:13,612: INFO/ForkPoolWorker-1] Submission updated successfully!

[2023-02-21 03:06:13,612: INFO/ForkPoolWorker-1] Destroying submission temp dir: /codabench/tmpvvmn6l16

[2023-02-21 03:06:13,614: INFO/ForkPoolWorker-1] Task compute_worker_run[155e6621-6190-4480-a073-2d6872af80ca] succeeded in 0.24267210997641087s: NoneI can shell into the container as so: podman exec -it compute_worker bashOnce there I can simulate the same actions with (simulate_worker_actions.py): # simulate_worker_actions.py

from subprocess import CalledProcessError, check_output

CONTAINER_ENGINE_EXECUTABLE = "podman"

image_name = "codalab/codalab-legacy:py3"

cmd = [CONTAINER_ENGINE_EXECUTABLE, 'pull', image_name]

# cmd = [CONTAINER_ENGINE_EXECUTABLE, 'pull', "docker://"+image_name]

# cmd = ["sudo", CONTAINER_ENGINE_EXECUTABLE, 'pull', "docker://"+image_name]

container_engine_pull = check_output(cmd)I get the following output: >>> container_engine_pull = check_output(cmd)

ERRO[0000] running `/usr/bin/newuidmap 42 0 1000 1 1 1 999 1000 1001 64535`: newuidmap: write to uid_map failed: Operation not permitted

Error: cannot set up namespace using "/usr/bin/newuidmap": should have setuid or have filecaps setuid: exit status 1

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/lib64/python3.11/subprocess.py", line 466, in check_output

return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib64/python3.11/subprocess.py", line 571, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['podman', 'pull', 'codalab/codalab-legacy:py3']' returned non-zero exit status 125.

>>> I believe there is something wrong the the created user "worker" that was made outside the image (on host server) and the one made in the Podmanfile (Dockerfile like file) "Container.compute_worker_podman". It seems the created worker user doesn't have the right permissions on the server to do a podman pull command. I followed this guide: https://github.com/codalab/codabench/wiki/Compute-worker-installation-with-Podman

'['podman', 'pull', 'docker://codalab/codalab-legacy:py3']' |

|

I can confirm that I was NOT on that commit haha. I think we originally made this branch and pulled your code before you added this. I deleted the branch we had and pulled the latest pr and voila! At this point, it works for CPU for sure and I bet for GPU too, but I still need to test that. Maybe Anne-Catherine tried that. I can try if she has not yet. Also, I need to review the code changes briefly but my first pass seemed pretty reasonable. |

|

@bbearce Great, I was hoping that was the case 😄. So the GPU version was added by @dtuantran ( I made two updates to get it working ), however, we will not be using it for deployment at NERSC as we have infrastructure that injects the correct drivers and libraries for the GPUs. So if testing that out is holding up this PR I would say we move it to a separate PR and get the CPU worker merged. |

GPU TestingHello. So I may have configured something incorrectly but it was a VM we have provisioned for GPU testing. Tuan already:

Initial Stepscd /home/ubuntu

mkdir podman_pr_testing

cd podman_pr_testing

mkdir gpu_worker

git clone https://github.com/codalab/codabench.git

cd codabench

# Note I installed "gh" which is a github cli (new?)

# https://github.com/cli/cli/blob/trunk/docs/install_linux.md

gh pr checkout 772

git branch

develop

* podman

cd ../Check everything is ok

sudo podman run --rm -it \

--security-opt="label=disable" \

--hooks-dir=/usr/share/containers/oci/hooks.d/ \

nvidia/cuda:11.6.2-base-ubuntu20.04 nvidia-smi

# Without sudo I get this error

...

Error: writing blob: adding layer with blob "sha256:846c0b181fff0c667d9444f8378e8fcfa13116da8d308bf21673f7e4bea8d580": Error processing tar file(exit status 1): potentially insufficient UIDs or GIDs available in user namespace (requested 0:42 for /etc/gshadow): Check /etc/subuid and /etc/subgid: lchown /etc/gshadow: invalid argumentI checked these files and they have the worker user (this is on the gpu VM itself not a container) vim /etc/subuid

#dtran:110000:65536

#worker:175536:65536

vim /etc/subgid

#dtran:110000:65536

#worker:175536:65536

sudo vim /etc/gshadow

#...

#worker:!::

#...

...

# Setup user

RUN useradd worker; \

echo -e "worker:1:999\nworker:1001:64535" > /etc/subuid; \

echo -e "worker:1:999\nworker:1001:64535" > /etc/subgid;

...Setup worker in gpu_worker folder$ cp -r /home/ubuntu/podman_pr_testing/codabench/podman /home/ubuntu/podman_pr_testing/gpu_worker/podman;

$ cp -r /home/ubuntu/podman_pr_testing/codabench/compute_worker /home/ubuntu/podman_pr_testing/gpu_worker/compute_worker;

$ cp -r /home/ubuntu/podman_pr_testing/codabench/Containerfile.compute_worker_podman /home/ubuntu/podman_pr_testing/gpu_worker/Containerfile.compute_worker_podman;

$ touch /home/ubuntu/podman_pr_testing/gpu_worker/.env;

$ cd gpu_workerBuild podman imagesudo podman build -f Containerfile.compute_worker_podman -t codalab/codabench-worker-podman-gpu:0.2 .Start it# podman run -d \ # doesn't work; Sample logs without sudo below

sudo podman run -d \

--env-file .env \

--privileged \

--name gpu_compute_worker \

--device /dev/fuse \

--user worker \

--security-opt="label=disable" \

--restart unless-stopped \

--log-opt max-size=50m \

--log-opt max-file=3 \

--hooks-dir=/usr/share/containers/oci/hooks.d/ \

localhost/codalab/codabench-worker-podman-gpu:0.2

# Sample logs if not using sudo:

# Trying to pull localhost/codalab/codabench-worker-podman-gpu:0.2...

# WARN[0000] failed, retrying in 1s ... (1/3). Error: initializing source docker://localhost/codalab/codabench-worker-podman-gpu:0.2: pinging container registry localhost: Get "https://localhost/v2/": dial tcp 127.0.0.1:443: connect: connection refused

# Stop it

sudo podman stop gpu_compute_worker; sudo podman rm gpu_compute_worker;Debugsudo podman ps

sudo podman exec -it gpu_compute_worker bash

Competition ImageRun from inside worker as that is where the scoring program is run from. Check that it works:

# Actual command

[worker@3d177f2552ce compute_worker]$ podman run --rm \

--name=4570aed8-bd0a-439d-add2-8e6bfbcff315 \

--security-opt=no-new-privileges \

-v /codabench/tmpu6ajrbi0/program:/app/program \

-v /codabench/tmpu6ajrbi0/output:/app/output \

-w /app/program \

-e PYTHONUNBUFFERED=1 \

-v /codabench/tmpu6ajrbi0/shared:/app/shared \

-v /codabench/tmpu6ajrbi0/input:/app/input \

pytorch/pytorch:1.12.1-cuda11.3-cudnn8-runtime python /app/program/evaluate.py /app/input /app/output

# Effectively the same thing

[worker@3d177f2552ce compute_worker]$ podman run --rm \

-it \

--security-opt=no-new-privileges \

pytorch/pytorch:1.12.1-cuda11.3-cudnn8-runtime bash

# Outupt of nvidia-smi

root@3d177f2552ce:/workspace# nvidia-smi

bash: nvidia-smi: command not found

# My scoring program therefore cannot sense the gpu...

# import torch

# device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# submission_answer = 'cuda' if torch.cuda.is_available() else 'cpu'

# submission_answer ends up being 'cpu'

# With sudo (outside gpu_compute_worker)

sudo podman run --rm -it --security-opt=no-new-privileges pytorch/pytorch:1.12.1-cuda11.3-cudnn8-runtime bash

root@d714d157aae0:/workspace# nvidia-smi

Sun Mar 12 21:48:35 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.85.12 Driver Version: 525.85.12 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... On | 00000000:01:00.0 Off | N/A |

| 27% 26C P8 20W / 250W | 1MiB / 11264MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+Did I miss something? I feel like I expect to be able to see the gpu while inside the worker, but I can't "see" it. Is there a chance my setup is wrong? |

|

@bbearce You wouldn't expect to see any GPU processes unless there is code running that is using the GPU. In the invocation you show above there would be nothing running as you are just run bash and nvidia-smi. |

|

Hi all, Having a chance to review this with Tuan the issue was that I logged in originally as user "ubuntu". I used Once I did that Tuan and Anne-Catherine reminded me I don't need to clone the codabench directory and build a GPU worker image. The design is so that users can clone our pre-built image for simplicity. After taking all of this into account, the new worker that was spun up did not need sudo rights and connected perfectly. It could see the GPU and we could run GPU submissions. I feel confident now that this is fully tested and verified. My last note is we should mention this login situation in the wiki post PR acceptance. |

|

Ready for merge! |

@bbearce we have the following CircleCI test failing: _ ERROR collecting src/apps/competitions/tests/test_legacy_command_replacement.py _

ImportError while importing test module '/app/src/apps/competitions/tests/test_legacy_command_replacement.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/local/lib/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

src/apps/competitions/tests/test_legacy_command_replacement.py:2: in <module>

from docker.compute_worker.compute_worker import replace_legacy_metadata_command

E ModuleNotFoundError: No module named 'docker'Also we should add tests / edit tests linked to this new feature. |

| chown worker:worker -R /home/worker/compute_worker && \ | ||

| pip3.8 install -r /home/worker/compute_worker/compute_worker_requirements.txt | ||

|

|

||

| CMD celery -A compute_worker worker \ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dtuantran How much space does this save? Just wondering if it's worth it given the loss of readability and ease of development, by having multiple steps.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It doesn't save much space, sorry that I push it too quick, I've reverted back this file.

|

@bbearce good catch, the CircleCI bug was due to a renaming of files. |

We merged these changes in the

podmanbranch of this repository in order to run the automatic tests.Here are the details of the original PR:

#763