-

Notifications

You must be signed in to change notification settings - Fork 45

leaderboard scores limited to provided decimal points. #802

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

….yaml file, for each column a new variable 'precision' (integer) can be added

|

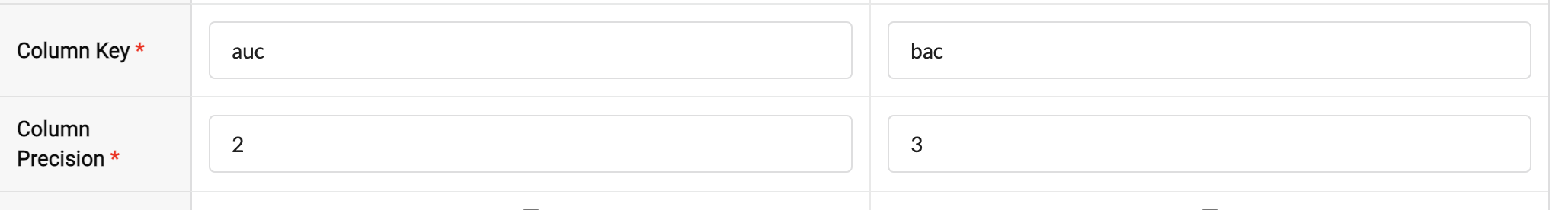

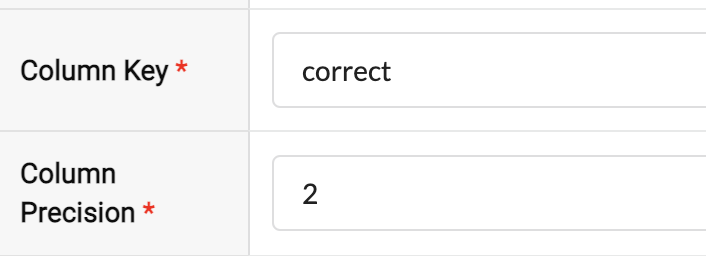

Do we have also a new field in the editor for the precision? |

…board > add column

…lab/codabench into leaderboard_score_precision

|

|

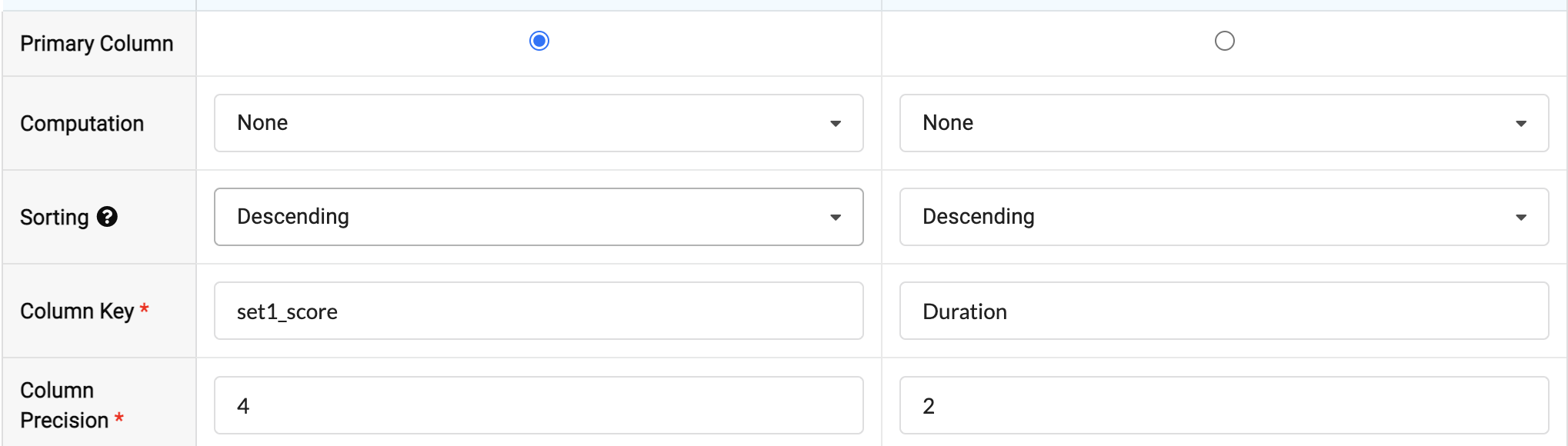

I followed these steps and I can see precision :

A bit confused about the problem in V1 bundle |

|

Last point: updating the documentation https://github.com/codalab/codabench/wiki/Yaml-Structure |

|

@Didayolo I think I have covered all the points

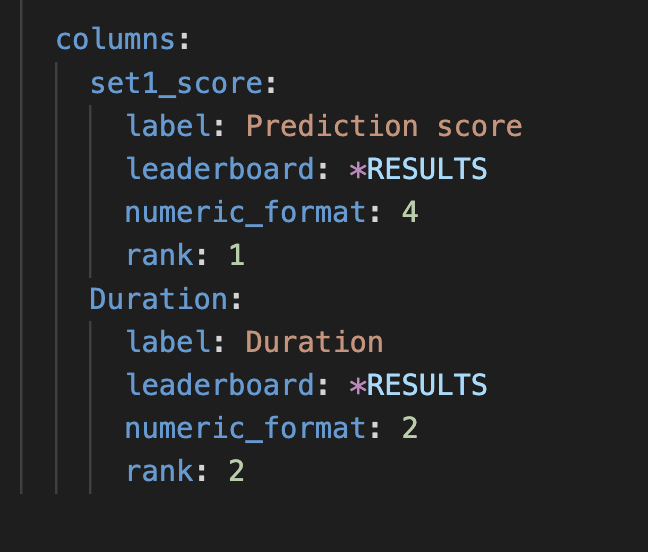

YAML from Iris bundle: |

|

The rounding to 2 decimal places we added fails in this situation because one of the V1 bundle has What should be done in this case? |

|

@ihsaan-ullah Your last commit seems a bit strange.

|

|

I changed this function Now in the specific case of bundle v15, I passed I just checked the same file here: #828 and it looks like the difference is just the addition of My changes to the test will not affect the new additions as these will use the default parameter To clarify a bit more, the precision passed as a parameter was already there but was hardcoded like this: assert Decimal(self.find('leaderboards table tbody tr:nth-of-type(1) td:nth-of-type(3)').text) == round(Decimal(prediction_score), 2)And now this is changed to assert Decimal(self.find('leaderboards table tbody tr:nth-of-type(1) td:nth-of-type(3)').text) == round(Decimal(prediction_score), precision) |

@ mention of reviewers

@Didayolo @bbearce

A brief description of the purpose of the changes contained in this PR.

In leaderboard scores are rounded to

precisiondecimal points.precisioncan be provided in the yaml file as belowIssues this PR resolves

Solving the long decimal number for scores in leaderboard

Screenshot of solved problem

A checklist for hand testing

Checklist