-

-

Notifications

You must be signed in to change notification settings - Fork 748

Hold event loop while evicting #6174

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

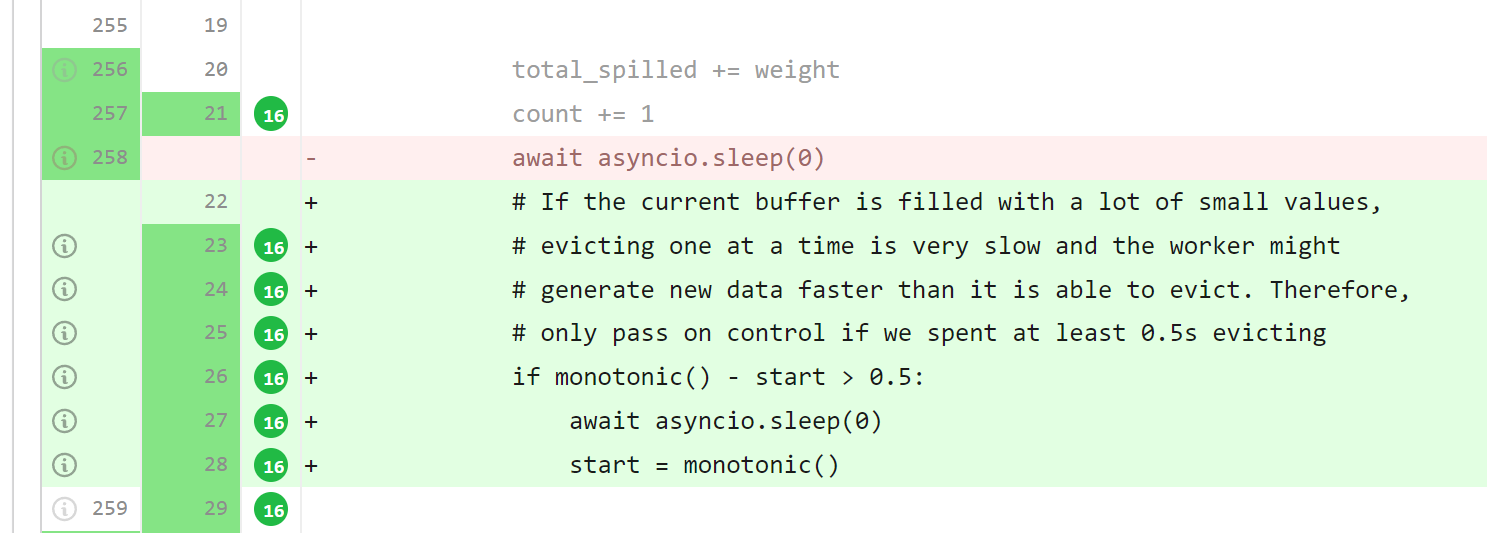

| # only pass on control if we spent at least 0.5s evicting | ||

| if monotonic() - start > 0.5: | ||

| await asyncio.sleep(0) | ||

| start = monotonic() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm confused by both the old and the new solution here. It seems like we're staying stuck in a while loop burning the CPU at 100%. Is this correct? If so, I'm against this design generally. (although I'm not objecting to this change, since it doesn't necessarily make things worse from my perspective.

Is my understanding correct here that we're blocking the event loop?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My understanding was flawed here. @crusaderky and @fjetter set me straight here. I didn't realize that data.evict was blocking.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, it's blocking the event loop. No, it's not burning CPU at 100%.

I think the idea here is that it's a crude form of asyncio scheduling. You're basically saying "stop everything! there's nothing that could possibly be more important to do (launch tasks, fetch data, send data, talk to scheduler, etc.) than dumping data to disk right now." That's a bit of a dramatic approach, but not too wrong for this situation. Spilling to disk should probably be the highest priority thing we do at this moment. And ideally starting new tasks, fetching new data, or anything else that could produce memory probably should pause until eviction is done.

This only works because we don't have async disk #4424. With async writes, we'd have to figure out how to actually pause other subsystems without blocking the whole event loop in this situation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, I don't disagree with any of that. My previous understanding was flawed. Please ignore my previous comment. I'm good here.

I'm totally fine passing control periodically.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, it's not burning CPU at 100%.

This is hardly surprising - when a write() is stuck waiting on a slow network file system, it shouldn't burn CPU.

|

Are we ok merging this before the next release? I asked this in a recent meeting and @crusaderky and @fjetter both said "we'd like some time to look at this, but if by next friday nothing has changed then yes, merging makes sense" (paraphrasing) |

|

I find it a bit odd that all the tests still pass with this change. Clearly this code path is not really being tested? Since it was previous behavior for a while, it's probably safe to merge if nothing has changed. But I'd also hope we can look at it more first. |

|

Yeah, I'm happy leaving it in this state for a few days while folks poke around. Mostly I'm just giving advance warning that I plan to push this through if nothing happens over the next week. |

Why wouldn't they? Only a test with a single sleep(0) in it would be impacted.

It was before this PR. Somewhat worryingly, codecov is saying that there's at least one unit test somewhere that is spilling for more than 0.5s: https://app.codecov.io/gh/dask/distributed/compare/6174/changes Maybe it's worth writing one that doesn't rely on sluggish host performance? class C:

def __getstate__(self):

time.sleep(0.6)

return {} |

| from collections.abc import Callable, MutableMapping | ||

| from contextlib import suppress | ||

| from functools import partial | ||

| from time import monotonic |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

On Windows, this has a granularity of 15ms. Could you use #6181 instead?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe distributed.metrics.time would suffice?

|

The only thing that I can think of here is that the worker goes from 70% to well beyond 100% in less than 200ms ( Do the AWS hosts mount a swap file? If yes, is it on EBS? Would it be possible to unmount the swap file and see if we start seeing MemoryError instead? |

|

I would also like to see if the issue disappears or is heavily mitigated by reducing |

@crusaderky agreed. What do you think about #6177?

Definitely. Once we have that and async disk fixed, we could probably just pause here. |

|

....oh, crud. |

|

Superseded by #6189 |

Squashed commit of the following: commit e036bb0 Author: crusaderky <crusaderky@gmail.com> Date: Mon Apr 25 22:09:01 2022 +0100 High-res monotonic timer on windows commit 137a4f5 Merge: a27d869 b7fc7be Author: crusaderky <crusaderky@gmail.com> Date: Mon Apr 25 22:07:46 2022 +0100 Merge branch 'main' into pause_while_spilling commit a27d869 Author: crusaderky <crusaderky@gmail.com> Date: Mon Apr 25 21:59:22 2022 +0100 Code review commit 7d99d1a Merge: b9b945c 198522b Author: crusaderky <crusaderky@gmail.com> Date: Mon Apr 25 21:48:33 2022 +0100 Merge branch 'main' into pause_while_spilling commit b9b945c Author: crusaderky <crusaderky@gmail.com> Date: Mon Apr 25 11:37:42 2022 +0100 harden test commit 3ffa721 Merge: 8156313 b934ae6 Author: crusaderky <crusaderky@gmail.com> Date: Sun Apr 24 21:38:15 2022 +0100 Merge branch 'main' into pause_while_spilling commit 8156313 Author: crusaderky <crusaderky@gmail.com> Date: Sun Apr 24 21:37:54 2022 +0100 Fix test commit c150bd7 Author: crusaderky <crusaderky@gmail.com> Date: Sun Apr 24 21:33:29 2022 +0100 Merge in dask#6174 commit bf66a7c Merge: 87b534e 63622b3 Author: crusaderky <crusaderky@gmail.com> Date: Sun Apr 24 20:59:20 2022 +0100 Merge remote-tracking branch 'gjoseph92/worker-memory-hold-event-loop' into pause_while_spilling commit 87b534e Author: crusaderky <crusaderky@gmail.com> Date: Sun Apr 24 20:44:55 2022 +0100 Pause in the middle of spilling commit 63622b3 Author: Gabe Joseph <gjoseph92@gmail.com> Date: Thu Apr 21 14:47:02 2022 -0700 Hold event loop while evicting

#5878 undid #3424; this brings it back. This resolves #6110, but needs more discussion around whether this is actually the right way to fix it.

pre-commit run --all-files