-

Notifications

You must be signed in to change notification settings - Fork 5.3k

Removed scope pooling and added diagnostics #51163

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

- The ConcurrentQueue showed a 48% regression on AMD scenarios and a 4% regression on citrine linux. - Removed interface dispatch on clear

|

Tagging subscribers to this area: @eerhardt, @maryamariyan Issue Details

Fixes dotnet/aspnetcore#31703

|

src/libraries/Microsoft.Extensions.DependencyInjection/src/ScopePool.cs

Outdated

Show resolved

Hide resolved

src/libraries/Microsoft.Extensions.DependencyInjection/src/ScopePool.cs

Outdated

Show resolved

Hide resolved

- Cache 100 scoped entries maximum - Keep track of the maximum capacity up to a limit

src/libraries/Microsoft.Extensions.DependencyInjection/src/ScopePool.cs

Outdated

Show resolved

Hide resolved

src/libraries/Microsoft.Extensions.DependencyInjection/src/ScopePool.cs

Outdated

Show resolved

Hide resolved

|

Unfortunately this regresses #47607 |

|

As we have discussed offline, the pooling here has problems that include regressing performance of heteregenous services that process requests with varying number of services, or increasing GC pause times due to introducing Gen2 -> Gen0 references. I am sorry I cannot signoff on this change. I believe that the use of pooling here is a bad idea and it should be reverted. Feel free to overrule me and get somebody else to sing-off on it. |

|

Thanks for the feedback @jkotas, my take is that infrastructure shouldn't cost more than the work it's supporting. The change was motivated by the fact that orchard pays for the overhead of DI as being the top allocation. I think I've struck a reasonable balance based on your feedback and have adjusted numbers appropriately. I think one last tweak would be to tweak the maximum capacity so the worst case behavior of completely heterogeneous scopes is reasonable (but I think that scenario is more rare). In a typical ASP.NET Core app, the scopes are per request and are similar in size. The trick here is to find limit that cover reasonable size apps but don't harm other use cases. I think ~50 scoped services and a max of 100 initialCapacity is good for this (I will change the current numbers). |

src/libraries/Microsoft.Extensions.DependencyInjection/src/ScopePool.cs

Outdated

Show resolved

Hide resolved

|

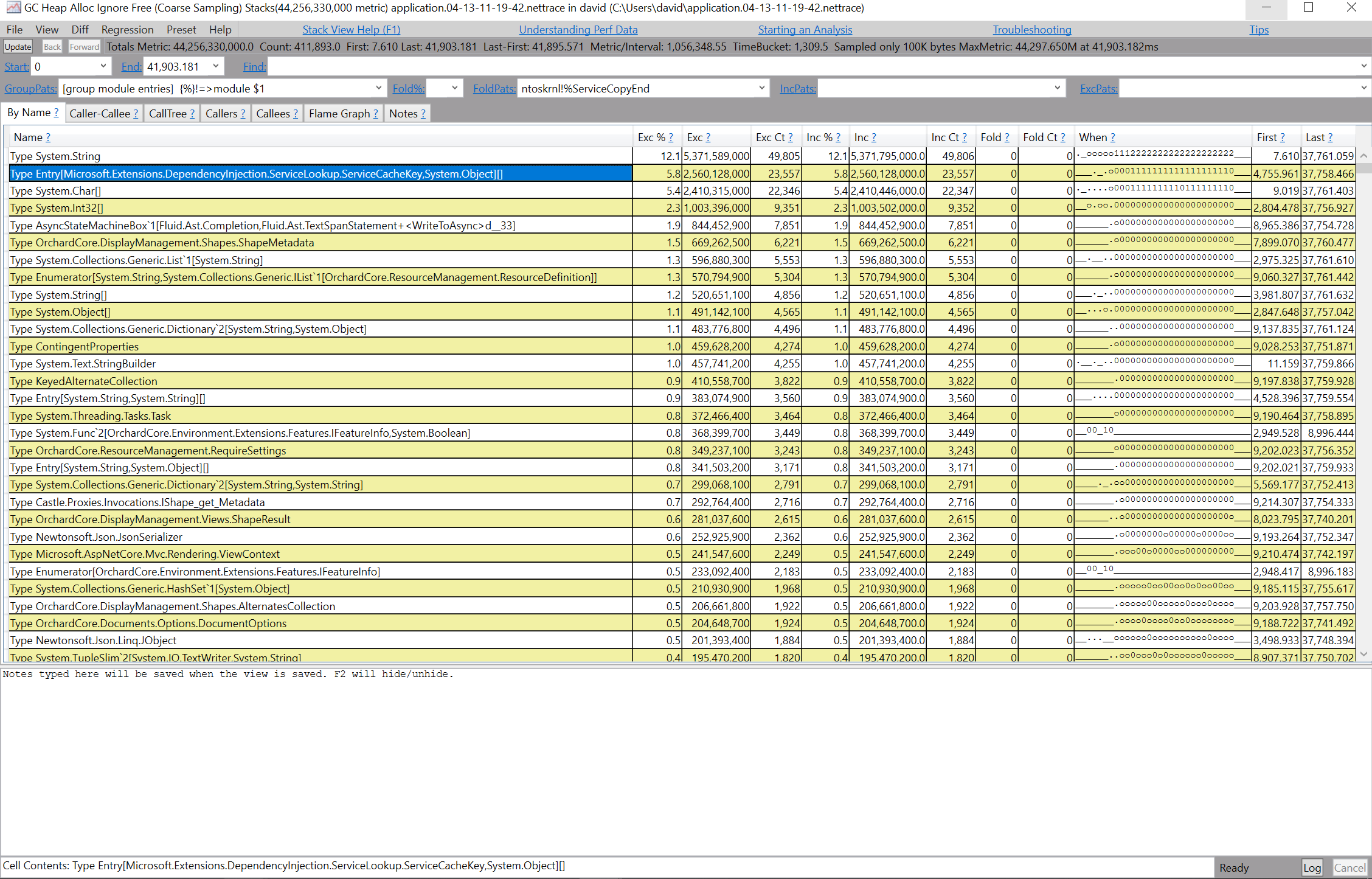

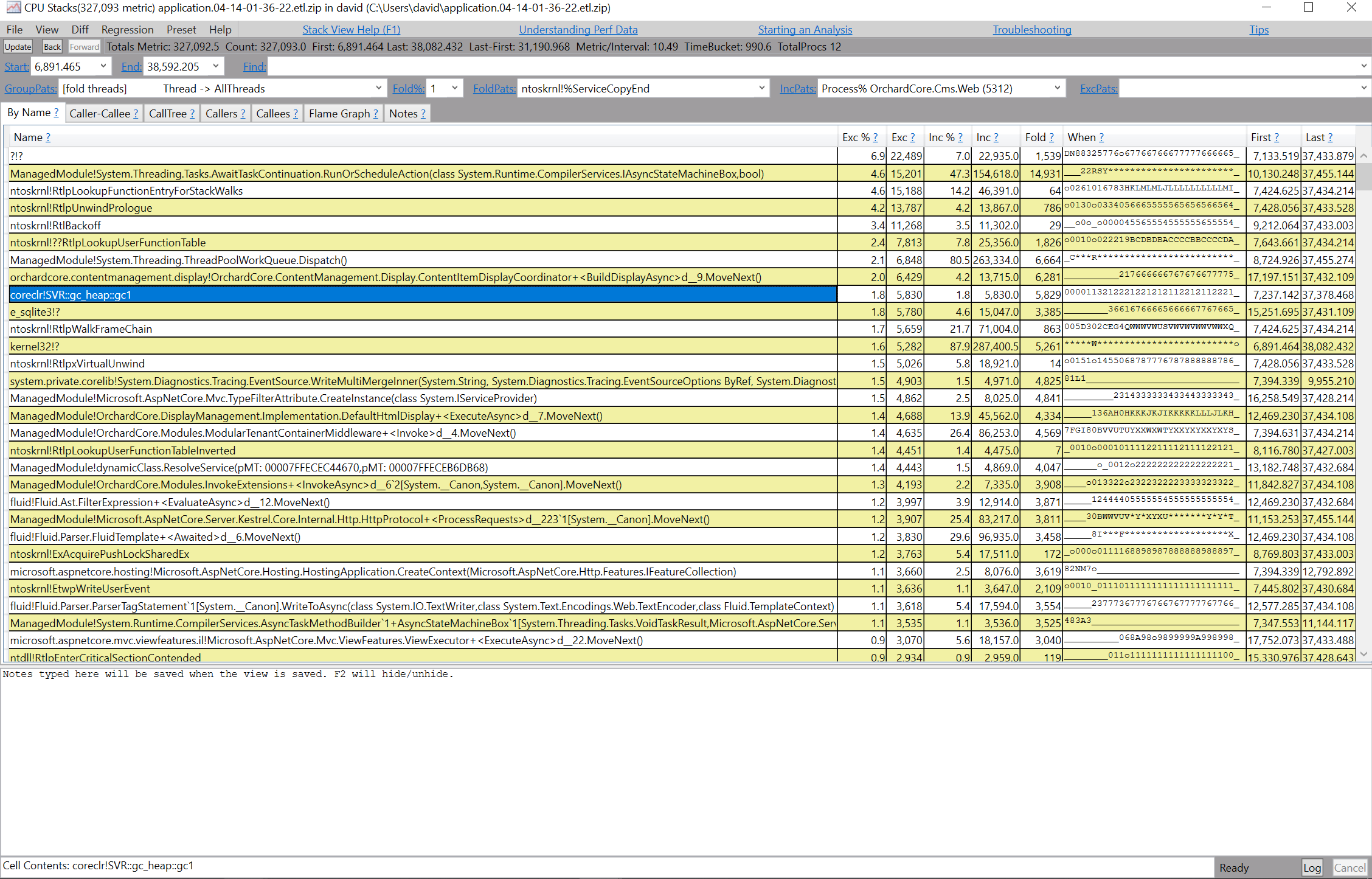

OK I did a bunch of work here to figure out if the GC is a problem in the original issue where this was filed. Initially there was an allocation profile only but without a matching CPU profile or GC trace to see whether it made sense to remove allocations. A CPU profile on the same benchmark shows this: There's 1.8% spent in the gc1 function (I have no idea what this does specifically cc @Maoni0). 1.8% isn't the end of the world in the grand scheme of things but orchard allocates a crapload of things per request and we started to look at reducing this and DI is one of the top allocations (see #47607). It's possible that orchard is "mis-uing" DI but this felt like something we should try to tackle. If that helps with the motivation. |

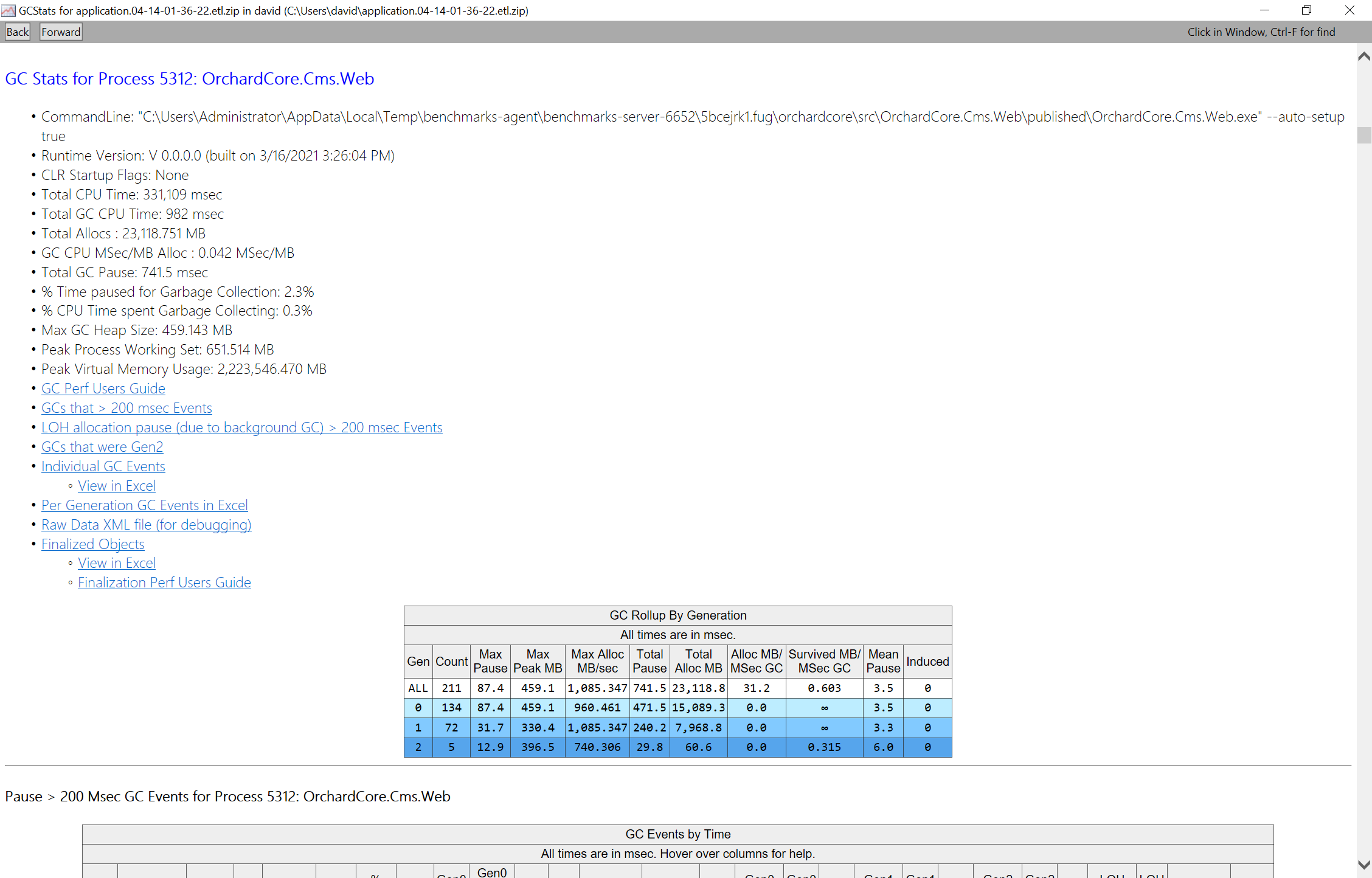

Does this pooling change actually decrease time spent in the GC? The Gen2 -> Gen0 references that this pooling introduces add more work that the GC has to do. It is not obvious to me that the reduced allocation will pay for this extra work. |

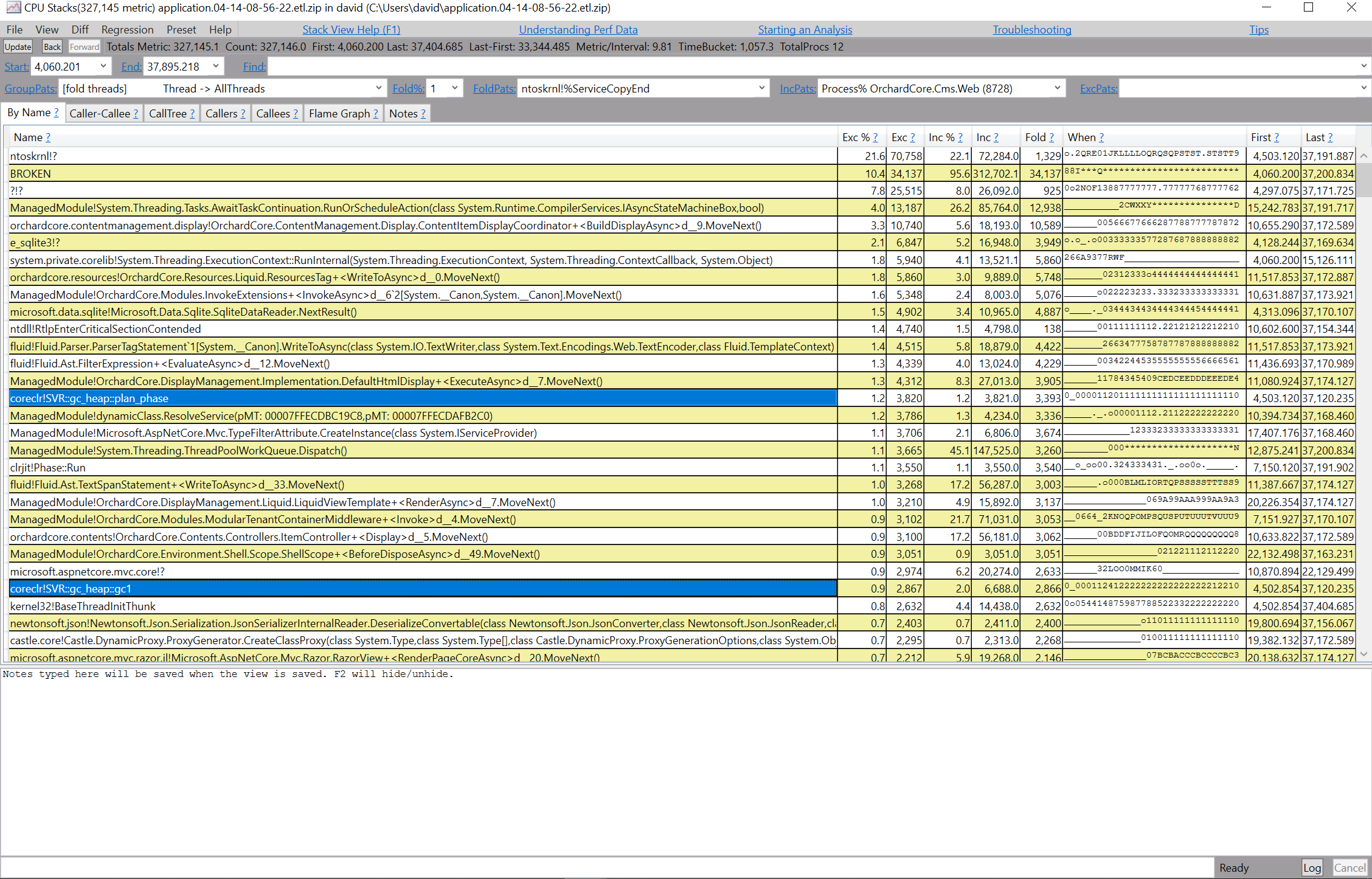

Looks worse, the number of requests isn't exactly the same, but the time looks split now between the plan phase and gc1 |

|

I removed the pooling and am currently testing out this change that keeps track of the maximum number of services resolved per scope (up to some limit). |

|

@davidfowl the profiles you showed seem odd... gc1 is the method that actually does the GC internal work and it mostly just calls other methods (that aren't inlined) so you'd see exc is usually 0 and inc is some non zero number. but yours just show the same for both... you might want to expand it callees and see what's up. if you want to see how much work GC is doing due to the generational aspect, ie, marking objects because they are held live by objects in the generations that aren't getting collecting, this explains how to do that. |

- Added an event that we can ask customers to turn on to see if scope in real applications are mostly homogeneous

… container instance.

...ies/Microsoft.Extensions.DependencyInjection/src/ServiceLookup/ServiceProviderEngineScope.cs

Outdated

Show resolved

Hide resolved

src/libraries/Microsoft.Extensions.DependencyInjection/src/ScopeTracker.cs

Outdated

Show resolved

Hide resolved

eerhardt

left a comment

eerhardt

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM - I like that we have the ability to get more information from the system now. It should help in diagnosis in the future.

Ran it on orchard: |

|

Thanks for all the feedback folks! I learned alot |

|

@Maoni0 I still have the traces so I'll spend some time looking at them and let you know what I find. |

Please do this here (I'd like to learn too...) |

linux.

Fixes dotnet/aspnetcore#31703

Citrine:

AMD