-

Notifications

You must be signed in to change notification settings - Fork 64

Run MakeBinaryPackage on r6i.4xlarge EC2 instances instead of m5.4xlarge #272

Conversation

|

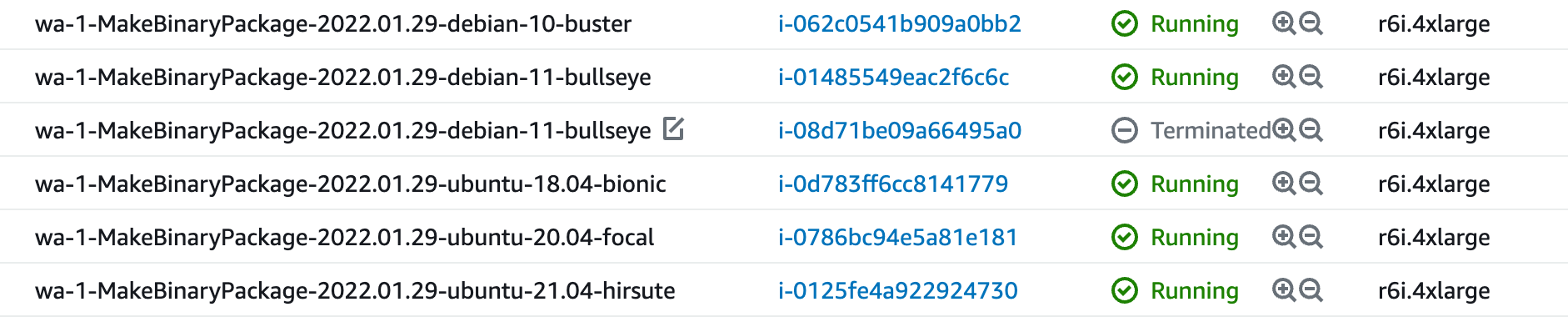

I have deployed the step functions for test |

|

I triggered a previously failed build |

|

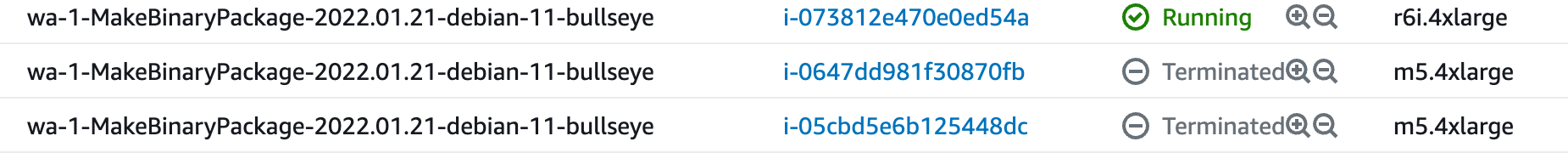

Sorry, deploying the step functions do not take effect. I just deployed the lambdas instead, and triggered a previously failed build bin/build-on-aws 2022.01.20 debian-11-bullseye for testing this PR. |

|

Nice, the m* used to be the high memory tier, and I assumed that had continued Given we don't need these jobs to be super fast, we should also try lower options in the r6i range - changing the cores:RAM usage should fix the problem - it's not just a matter of more RAM. Worth trying r6i.xlarge, and seeing what the build times are like. -- As a concrete example, we were building with 32GB up until August last year (a4c60f8) until some OOMs started - doubling the cores and doubling the RAM did not fix the problem. |

If it still needs an OOM retry, the problem exists; sometimes, with the previous settings, it succeeds with 0 retries. "Fewer retries" is extremely low signal. I think the next step is to enable atop to figure out what's going on; I suspect cargo may be parrelising to NCORES in combination with HHVM's own parellization, but we need more data |

|

Even though it's a weak signal, we did find less failures recently. Shall we merge this PR? |

According to https://aws.amazon.com/ec2/instance-types/,

r6i.4xlargedoubles the memory in comparison tom5.4xlarge. Hopefully it would reduce the out of memory errors.