Conversation

There was a problem hiding this comment.

Looks good!

I found a failed workflow run below. There are two warns about unexpected input(s), did you look into them?

Could you also send a link to a successful workflow run example?

| - name: Generate test Ion Data | ||

| run: java -jar target/ion-java-benchmark-cli-0.0.1-SNAPSHOT-jar-with-dependencies.jar generate -S 500 -T string -f ion_text testWorkflow.ion | ||

| run: | | ||

| mkdir -p testData |

There was a problem hiding this comment.

Quick questions since there is a -p option here. Is it possible that the directory (e.g., testData) has already existed so we used -p to avoid failures here?

There was a problem hiding this comment.

Quick questions since there is a

-poption here. Is it possible that the directory (e.g., testData) has already existed so we used-pto avoid failures here?

Yes, because it's the start of the workflow and no existing directory, even there is the "-p" option can help to avoid failure.

| env: | ||

| regression_detect: ${{steps.regression_result.outputs.regression-result}} | ||

| if: ${{ env.regression_detect == 'false' }} | ||

| run: exit 1 No newline at end of file |

There was a problem hiding this comment.

Let's add a new line at the end of the file.

There was a problem hiding this comment.

Let's add a new line at the end of the file.

Changes will be updated

| run: | | ||

| cd benchmarkResults | ||

| result=true | ||

| for FILE in *; do message=$(java -jar /home/runner/work/ion-java/ion-java/target/ion-java-benchmark-cli-0.0.1-SNAPSHOT-jar-with-dependencies.jar compare --benchmark-result-previous $FILE/previous.ion --benchmark-result-new $FILE/new.ion --threshold /home/runner/work/ion-java/ion-java/tst/com/amazon/ion/benchmark/threshold.ion $FILE/report.ion | tee /dev/stderr) &&if [ "$message" != "Regression not detected" ]; then result=false;fi; done |

There was a problem hiding this comment.

&&if->&& if

Changes will be updated.

| cd benchmarkResults | ||

| result=true | ||

| for FILE in *; do message=$(java -jar /home/runner/work/ion-java/ion-java/target/ion-java-benchmark-cli-0.0.1-SNAPSHOT-jar-with-dependencies.jar compare --benchmark-result-previous $FILE/previous.ion --benchmark-result-new $FILE/new.ion --threshold /home/runner/work/ion-java/ion-java/tst/com/amazon/ion/benchmark/threshold.ion $FILE/report.ion | tee /dev/stderr) &&if [ "$message" != "Regression not detected" ]; then result=false;fi; done | ||

| echo "::set-output name=regression-result::$result" |

There was a problem hiding this comment.

Since we used a for loop to iterate all files, is it possible to know which file(s) fail the workflow?

There was a problem hiding this comment.

Since we used a for loop to iterate all files, is it possible to know which file(s) fail the workflow?

Yes, the output message will contain these information, i.e. Including the test data and parameters we use which can help users to map with the benchmark results directory.

| path: benchmarkResults | ||

|

|

||

| - name: Clean maven dependency repository | ||

| run : rm -r /home/runner/.m2 |

There was a problem hiding this comment.

This comment is about line72,

We only cleaned up the maven dependencies above but not ion-java right? So it might not necessarily checkout the ion-java again. Do you think we could just git reset to the commit we want and then mvn clean install instead?

There was a problem hiding this comment.

This comment is about line72,

We only cleaned up the maven dependencies above but not ion-java right? So it might not necessary to checkout the ion-java again. Do you think we could just

git resetto the commit we want and thenmvn clean installinstead?

Yes, that's a good idea, changes will be updated in the following commit.

| run: java -jar target/ion-java-benchmark-cli-0.0.1-SNAPSHOT-jar-with-dependencies.jar generate -S 500 -T string -f ion_text testWorkflow.ion | ||

| run: | | ||

| mkdir -p testData | ||

| java -jar target/ion-java-benchmark-cli-0.0.1-SNAPSHOT-jar-with-dependencies.jar generate -S 5000 --input-ion-schema ./tst/com/amazon/ion/benchmark/testStruct.isl /home/runner/work/ion-java/ion-java/testData/testStruct.10n |

There was a problem hiding this comment.

Is there a specific reason to use a relative path for --input-ion-schema option but an absolute path for testStruct.10n? Using relative path for all of them seems a good idea here.

There was a problem hiding this comment.

Is there a specific reason to use a relative path for

--input-ion-schemaoption but an absolute path fortestStruct.10n? Using relative path for all of them seems a good idea here.

In the following commit, these paths will be changed to the relative paths.

Thanks for catching it, the warning will be fixed in the next commit. And the next commit will be the example of running workflow successfully. |

linlin-s

left a comment

linlin-s

left a comment

There was a problem hiding this comment.

The second commit fixed all problems mentioned in the first commit.

|

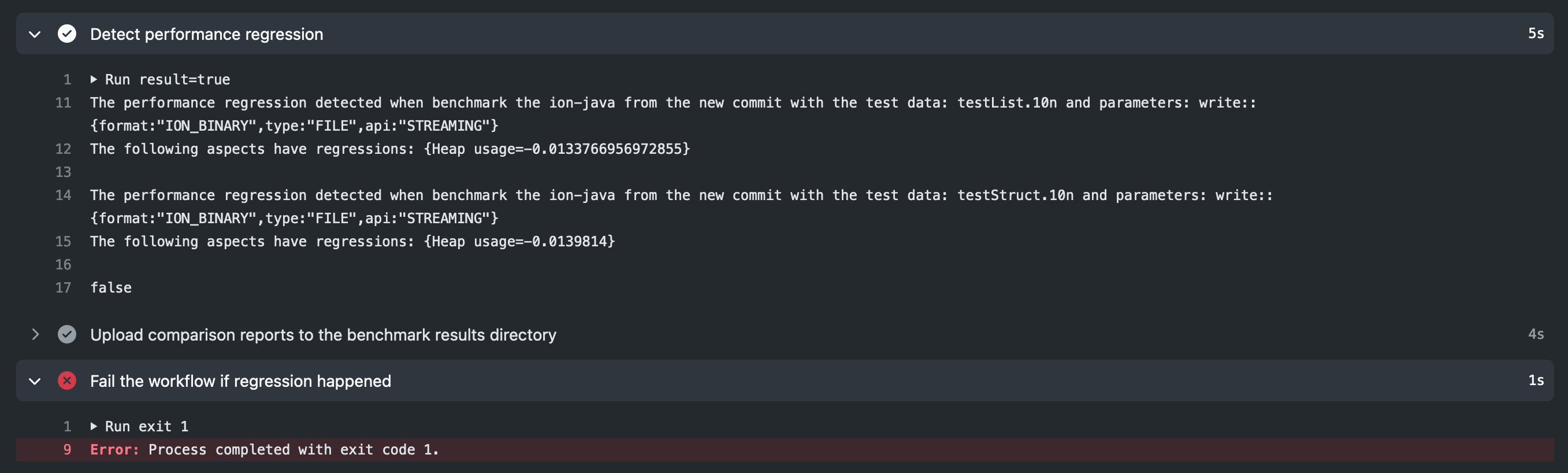

Performance detected example: https://github.com/linlin-s/ion-java/runs/3283356569?check_suite_focus=true |

Description of changes:

This PR adds the ability to detect the performance regression of ion-java from the new commit.

Review guide:

Lines 47- 56:

After building ion-java and ion-java-benchmark-cli, more steps were added to generate test Ion data which will be used in benchmark process and be uploaded to artifacts.

Lines 58 - 67:

Get benchmark results of ion-java from the existing commit by using the test data generated from the last step and save these results into one directory named benchmarkResults, then upload the directory to artifacts.

Lines 92 - 107:

Download the data from artifacts and save them into the directory with the same file name as the one during the uploading process.

Lines 109 - 116:

Get benchmark results of ion-java from the new commit and add them in to the existing directory benchmarkResults, then upload the directory with additional benchmark results to artifacts.

Lines 118 - 124:

Inside of the directory benchmarkResults, benchmark results from the same ion-java-benchmark-invoke will be saved to the same sub-directory named with the command line. This step will iterate all sub-directories in benchmarkResults and use API 'compare' to detect if performance regression happened. There variable $result will be set to 'false' if performance regression happened during the iteration process.

Lines 126 - 130:

After using API 'compare', one comparison report will be generated under each sub-directory in benchmarkResults. This step upload the final results with additional comparison reports to artifacts.

Lines 132 - 136:

Get variable $result from the previous step, if this value equal to 'false' then the performance regression detected, the workflow should be failed.

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license.