-

-

Notifications

You must be signed in to change notification settings - Fork 1.5k

MRG, BUG: Updated Current Source Density #7863

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@alexrockhill can you touch the example file so we see how it looks now on the circleci artifact? |

|

Happy to help make it more efficient, etc. but we need to get the test files over to |

|

Oh wait, I see ... actually these are small enough that they can probably just stay here. I'll fix the code |

|

can you compress the files a bit? maybe ship them as npz?

|

Oof actually I didn't realize that |

|

(I will probably also just save it as |

|

+1

… |

|

@alexrockhill I pushed a commit to:

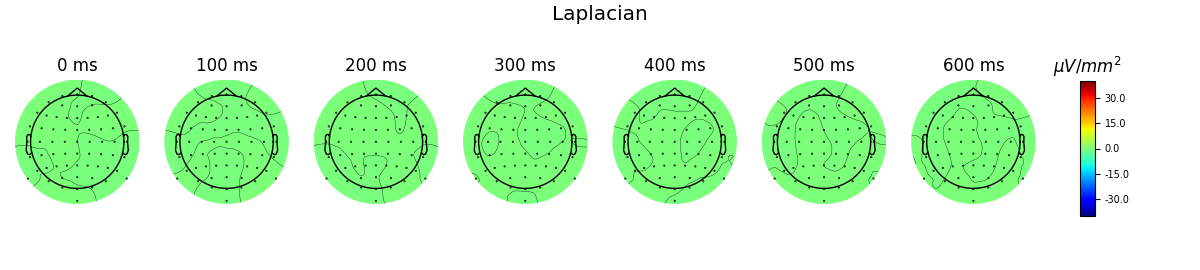

The CSD example looks bad now. Also, I noticed that the norm of the matrix On |

|

... one thing that might help would be also saving the |

Thanks so much, I am definitely not as skilled at vectorizing. I think maybe |

|

Pushed one more efficiency commit, no more redundant This might make it more difficult to debug, though, so feel free to go back to your old code for |

|

Ahh indeed |

|

But |

|

BTW you can see in the output of this example that something is broken (and that |

|

Hmmm yeah changing to mm and then changing 85 radius to 0.085 didn't work, I agree on it not being scale invariant. Not sure what's going on here though. |

|

Although the tests are passing and the evoked data is the same, when I went back and plotted this example https://github.com/alberto-ara/Surface-Laplacian/blob/master/surface%20laplacian%20example.ipynb the topomap it was uniform... I think the tests aren't covering the issue for some reason |

|

Maybe the Also, is it possible that the electrode locations are supposed to be projected onto a sphere? They currently are not, unless by happenstance you are using a spherical montage with zero origin. |

|

... based on code comments for the old |

|

Okay this might have fixed things, let me push... |

|

Ahh I think you're right the the error tolerance is meaningless. The scale of the example data is 1e-6 to 1e-7 so etol would have to be much lower. |

|

If I go back to c2586be (when you opened the PR) and fix the path problems and use a sensible So maybe go back to that commit and fix things first? Then you can force-push and I can cherry-pick my commits on top... |

|

Totally my fault I just realized the test data is likely the before data and not the after laplacian data because of a very stupid switching return error, my bad!!! |

|

We can update the testing data to fix it, if you want to work from c2586be and push-force over my changes I can fix the testing data. But we should wait until we have something that works with a reasonable |

|

Ok I plotted and double checked and that should be the right comparison csd data and everything should hopefully make more sense in a minute. |

|

If the CSV you pushed is the one to compare against and it passes on c2586be, I can take care of the rest of the annoying steps. |

|

... with that file on top of c2586be I get: So hopefully there is more to come and I just should have been more patient :) |

|

I can go back and fix tests, sorry I may need a bit of time to clean it all up from where it worked |

|

Sounds good, as I said feel free to force push over my changes, it's possible that my calculations are not equivalent since I was assuming the tests would tell me if something broke. Once you have something that matches, knowing the efficiency bits and the project-to-sphere-for-cosine-distance things I think I can reincorporate my stuff fairly easily! |

|

Here is the relevant code from the original MATLAB source as far as I can tell DetailsJust so that this is here as reference. |

|

(FYI, fortunately we got permission from Jurgen a couple of years ago to relicense his implementation under BSD, otherwise we could not use / look at this snippet because of the GPL license) |

Great I think Dennis had mentioned that earlier. Thanks so much for finishing everything @larsoner! It might be worth also citing:

The formatting for that looks complex, I'll have to look into the documentation on how citations are done now. |

|

Just add entries like I did already in this PR. It's standard bibtex entries, should be easier rather than more difficult |

|

Ok doing now. Where are you on projecting onto the sphere or not? Is it just preliminary commenting it out? |

| # from scipy.spatial.distance import squareform, pdist | ||

| # cos_dist = 1 - squareform(pdist(pos, 'sqeuclidean')) / 2. | ||

| # Project onto the sphere and do the distance (effectively) | ||

| pos /= np.linalg.norm(pos, axis=1, keepdims=True) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@alexrockhill this implicitly projects to a unit sphere. It preserves the phi/theta but sets r=1, which is the same thing as doing cart2sph, set r=1, then sph2cart...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ok so ideally the sphere would have the radius of the head? Is that what's done in the original?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In Mike and Jurgen's code they both effectively do this (at least for a spherical montage it's equivalent), and based on computing a cosine similarity I think it's the right thing to do.

Mike never uses an actual radius, but Jurgen does (divides by head * head effectively), which conceptually makes sense to me.

|

Okay @alexrockhill I think I'm happy with our code now, having read through Mike's and Jurgen's code. |

FIX: Try again ENH: More efficient FIX: Test broken but ex works better [skip travis]

|

Sorry @alexrockhill I had to rebase, so while I was in there I squashed our commits, hope that's okay. This one can be rebase+merged to preserve history if whoever hits the green button can remember to do so... |

Of course, that's great. Thanks for doing the hard parts :) |

|

Thanks @alexrockhill ! |

|

@larsoner you merged this without squashing so we now have in the history the big CSV files that were pushed by @alexrockhill in the first commits.... grrrrr I saw this as it required 15s for me to fetch master :( rewriting history to remove these files may put a mess on the current PRs... @larsoner wdyt? |

|

0f8788f is the commit now in master |

|

Argh sorry forgot about those. The rebase and merge was intentional to preserve code history/blame, but I forgot about the file commits. Thanks for push forcing to fix it |

|

@cbrnr @larsoner @drammock @alexrockhill @hoechenberger @GuillaumeFavelier FYI I force pushed to master to remove the 60MB of csv files that were commited by mistake from this PR. I hope it does not create too much of a mess on your PRs.... |

Addresses #7862.

Passes all tests and matches example from https://github.com/alberto-ara/Surface-Laplacian but testing data needs to be added to mne-testing-data and some code review would be appreciated. I think the for loops could or mostly all be changed to matrix multiplication or called through numpy/scipy but I'll need to look into that. Thanks @mservantufc for bringing this to attention. It appears as if the previous version was not working.

Maybe @larsoner and/or @jasmainak might have a minute to review since they were nice enough to help me with the original PR :) ?