-

Notifications

You must be signed in to change notification settings - Fork 150

Operator status revisions squash #142

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Operator status revisions squash #142

Conversation

| operatorsv1 "github.com/openshift/api/operator/v1" | ||

| "github.com/openshift/library-go/pkg/operator/v1helpers" | ||

| ) | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Detailing out the purpose behind the status/conditions here to ensure we get it right.

|

/retest |

|

I think tests are frozen right now on: |

|

/retest |

|

|

|

/retest |

|

Network operator failed in one test, Console in the other. Not sure if flakes or related.

|

|

/retest |

|

no |

|

/retest |

2 similar comments

|

/retest |

|

/retest |

|

|

|

/retest |

5 similar comments

|

/retest |

|

/retest |

|

/retest |

|

/retest |

|

/retest |

67b79d7 to

09284a8

Compare

|

rebased |

| // To use when another more specific status function is not sufficient. | ||

| // examples: | ||

| // setStatusCondition(operatorConfig, Failing, True, "SyncLoopError", "Sync loop failed to complete successfully") | ||

| func (c *consoleOperator) SetStatusCondition(operatorConfig *operatorsv1.Console, conditionType string, conditionStatus operatorsv1.ConditionStatus, conditionReason string, conditionMessage string) *operatorsv1.Console { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't see how this is better than calling v1helpers.SetOperatorCondition directly. It actually seems worse to me since it would be easy to mix up the order of the arguments.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I also don't see where this is used.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Its not used at this point, its been factored out.

I'll remove it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It was a step to initially condense the inline noise:

if aBadThing {

logrus.Errorf("Bad things are happening")

// logic....

v1helpers.SetOperatorCondition(&operatorConfig.Status.Conditions, operatorsv1.OperatorCondition{

Type: BadType,

Status: BadStatus,

Reason: "ABadThing",

Message: "a bad thing",

LastTransitionTime: metav1.Now(),

})

// oh my if more than one....

// do other logic

}

// to a one-liner

setStatusCondition(operatorConfig, Failing, True, "SyncLoopError", "Sync loop failed to complete successfully")That said, I agree, it was still too many things to take care of. Thats when I moved to

// one condition or 3, still one line... not 7,14,21, etc.

co.ConditionABadThing(operatorConfig)There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The right way to do this is probably for:

// operator.go ideally could deal with all the status stuff, when it calls sync, and not have it

// scattered across multiple files:

structuredThing, err := sync_v400()

// that we could instead handle it all in one place in operator.go

handleConditions(structuredThing, err)Tech debt...

| v1helpers.SetOperatorCondition(&operatorConfig.Status.Conditions, operatorsv1.OperatorCondition{ | ||

| Type: operatorsv1.OperatorStatusTypeFailing, | ||

| Status: operatorsv1.ConditionFalse, | ||

| LastTransitionTime: metav1.Now(), |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ideally we'd add messages to all these, but OK as a follow on.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Failing:False is the desired state, along with Progressing:False and Available:True. I assumed if in the desired state no other information should be needed, but I'm open to adding info if we feel it is necessary or helpful.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I assumed if in the desired state no other information should be needed, but I'm open to adding info if we feel it is necessary or helpful.

I think it would make things more clear if we add a message saying things are good. Particularly because you have to think through a double negative like failing: false. The messages are displayed on the cluster settings page in the UI.

(For a follow on)

| return operatorConfig | ||

| } | ||

|

|

||

| func (c *consoleOperator) ConditionResourceSyncSuccess(operatorConfig *operatorsv1.Console) *operatorsv1.Console { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How is this different than ConditionNotFailing?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It is not at this point. Initially I was considering putting the rest of this logic (sync_v400 line 110) in this function as it may have been necessary to set more than one status.

I may be willing to eliminate this wrapper as I believe it won't be a "set multiple statuses" kind of function.

0787a25 to

fdc153a

Compare

fdc153a to

873f74b

Compare

|

/retest |

2 similar comments

|

/retest |

|

/retest |

spadgett

left a comment

spadgett

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

/lgtm

| // To use when another more specific status function is not sufficient. | ||

| // examples: | ||

| // setStatusCondition(operatorConfig, Failing, True, "SyncLoopError", "Sync loop failed to complete successfully") | ||

| func (c *consoleOperator) SetStatusCondition(operatorConfig *operatorsv1.Console, conditionType string, conditionStatus operatorsv1.ConditionStatus, conditionReason string, conditionMessage string) *operatorsv1.Console { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We should remove if unused, but we can do that as a follow on if this passes CI.

| // the operand is in a transitional state if any of the above resources changed | ||

| // or if we have not settled on the desired number of replicas | ||

| if toUpdate || actualDeployment.Status.ReadyReplicas != deploymentsub.ConsoleReplicas { | ||

| co.ConditionResourceSyncProgressing(operatorConfig, "Changes made during sync updates, additional sync expected.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We might revisit this message, but OK for now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agree, its not the best message. Progressing is quite fast, so its unlikely to be seen, but still, can def revisit.

|

[APPROVALNOTIFIER] This PR is APPROVED This pull-request has been approved by: benjaminapetersen, spadgett The full list of commands accepted by this bot can be found here. The pull request process is described here DetailsNeeds approval from an approver in each of these files:

Approvers can indicate their approval by writing |

/retest |

|

/retest Died before generating any artifacts due to: |

|

/retest |

|

woohoo, one set succeeded this time... |

|

/retest |

1 similar comment

|

/retest |

|

Nice. |

/retest |

|

Bah, looked like they passed. |

Yeah, they have to run again since another PR merged to master in between |

|

e2e-aws passed, waiting on e2e-aws-operator |

|

all green! |

|

Fantastic. |

Based on #139

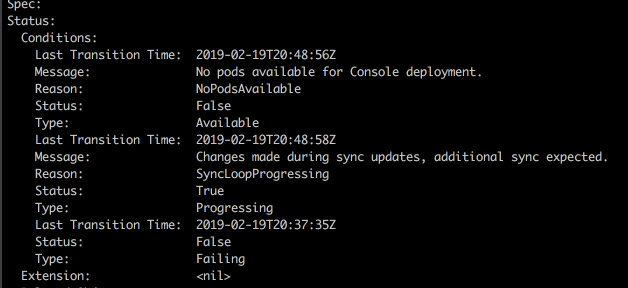

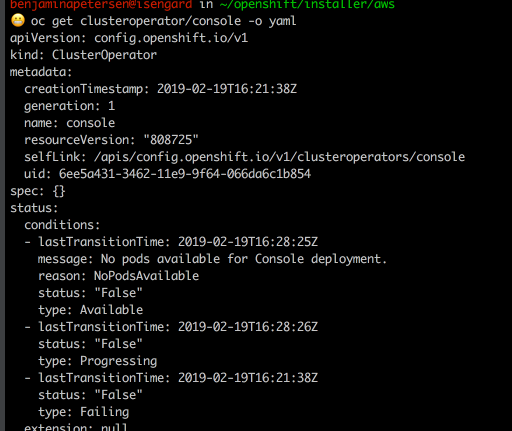

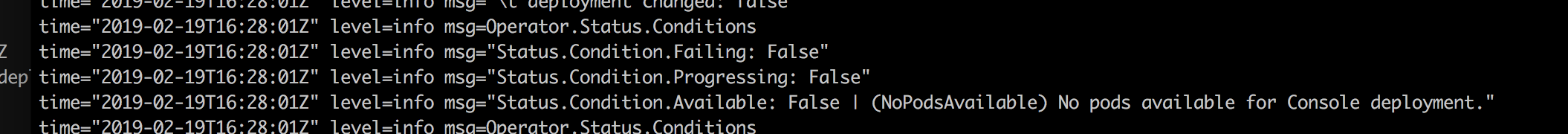

Some screenshots: