pkg/profiler: Use pool of gzip writers#1161

Conversation

52be736 to

1bb121f

Compare

|

may be send uncompressed data is ok? |

|

It's a trade-off. If it's only compiled processes I would agree, but we also resolve perf-maps and kernel symbols. For those types of workloads it is a good idea to use some compression. I'd be ok with having an option to turn off compression as a flag if people want that for their setup, I would still say the default should be to have compression on. We actually went with exactly the same thing in Prometheus. |

1bb121f to

68f366c

Compare

kakkoyun

left a comment

kakkoyun

left a comment

There was a problem hiding this comment.

This is very close to merging. Let's not ignore errors. And we can merge this PR.

Thanks for this work.

pkg/profiler/profile_writer.go

Outdated

| return &RemoteProfileWriter{ | ||

| profileStoreClient: profileStoreClient, | ||

| pool: sync.Pool{New: func() interface{} { | ||

| z, _ := gzip.NewWriterLevel(nil, gzip.BestSpeed) |

There was a problem hiding this comment.

Let's not ignore errors :)

There was a problem hiding this comment.

It's safe enough because gzip.NewWriter() does the same thing https://github.com/klauspost/compress/blob/master/gzip/gzip.go#L58. There will be no error since gzip.BestSpeed is a constant.

There was a problem hiding this comment.

I hear you, however, the underlying implementation can change, and I don't feel comfortable ignoring errors. We can change the API and return the error to the caller and let it handle it.

There was a problem hiding this comment.

Oh, sync.Pool.New accepts func() interface{}, so either an error or *gzip.Writer can be returned. Then that value returned from rw.pool.Get() can be checked.

There was a problem hiding this comment.

If there is an error, then a pool would return nil and type casting will panic rw.pool.Get().(*gzip.Writer), so the error will be spotted immediately. Additionally, we can pass a logger to NewRemoteProfileWriter() and log an error so it will be easier to figure out why sync.Pool.New returned a nil.

46219dd to

b10ec6e

Compare

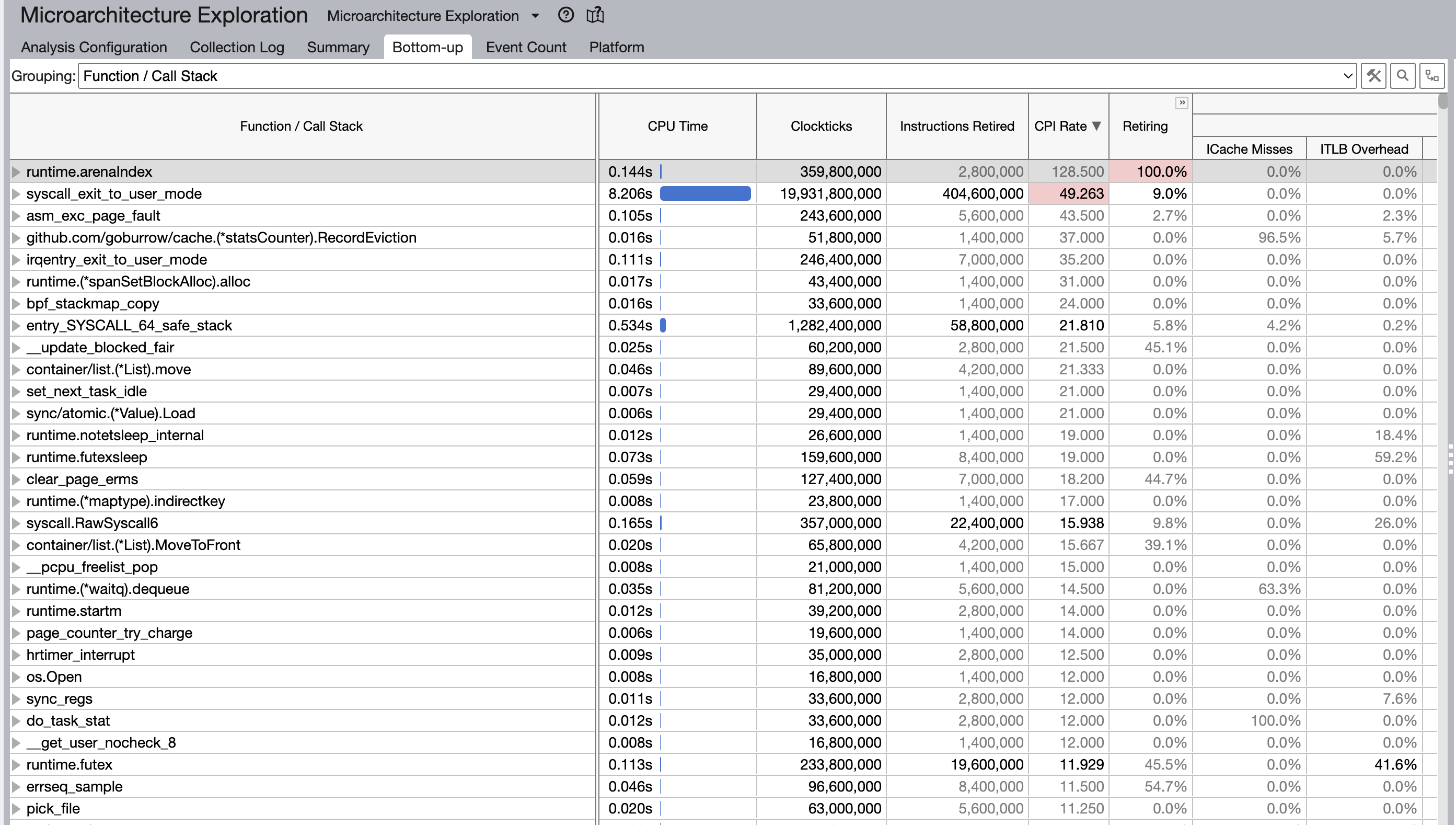

klauspost's Stateless gzip compressor allowed to reduce CPU consumption by 20%. After profiling Parca Agent with Vtune I found an elevated CPI rate (124.4) in flate/stateless.go. The author recommends to use a pool of writers to get 2x-3x speed up.

b10ec6e to

af1aa94

Compare

klauspost's Stateless gzip compressor allowed to reduce CPU consumption by 20%, see #1065.

After profiling Parca Agent with Vtune I found an elevated CPI rate (124.4) in flate/stateless.go klauspost/compress#717. The author recommends to use a pool of gzip writers to get 2x-3x speed up.

new

old

I am yet to check the CPI rate after introducing the pool.