-

Notifications

You must be signed in to change notification settings - Fork 349

Description

Is your feature request related to a problem? Please describe.

Feature is related to the IPC4 refactoring. Currently IDC for zephyr platforms uses zephyr p4wq abstraction to schedule IDC messages, while IPC4 for zephyr platforms uses SOF list_item primitive supported by k__work__delayable zephyr abstraction.

Pointing out further issues:

- IPC synchronization is scattered among zephyr ipc driver and sof code (we use a

ipc.task_listfor messaging in sof code but there is alsodevdata->tx_ack_pendingvariable fixing up synchronization in thezephyr\soc\xtensa\intel_adsp\common\ipc.cfile. - IPC operates on

sof_taskabstraction, while IDC uses only k_p4wq_work as single unit of work (single message handling). - IPC operates on EDF scheduler that had never been truly finished (since it lacks any deadlines calculation and does not provide any preemption).

Moreover, IPC4 code for Zephyr platforms is very scattered so understanding the implementation takes ages.

Motivation to use SOF Scheduler abstraction

- IPC code is using SOF tasks now that are strongly composed into common SOF IPC code (ipc3/ipc4 commons).

It would be very hard to cut out ipc task abstraction away from common code (for IPC4/zephyr and replace with something else). SOF tasks are managed by SOF schedulers. - AMS uses LL scheduler (

src\lib\ams.c) for its task realization, what is technically wrong. At the time of implementation it was the only one scheduler working on secondary cores. Using new comm_scheduler for AMS tasks will provide them with

clear priority placement (highest task priority group - AMS tasks will be always placed closest to the p4wq queue HEAD). - Clear prioritization of all communication tasks (IPC, IDC, AMS). Right now it is not clear how those tasks are prioritized.

While we can claim that IPC responses have higher priority than IPC notifications (by the implementation of how elements are added toipc.msg_list), we can not claim that AMS messages that use IDC have higher priority than IPC that also use IDC.

Also we also can not claim that IDC has higher priority than IPC since they both use the same thread priority of

#define EDF_ZEPHYR_PRIORITY 1but completely separate queue implementations. - IPC synchronization code in Zephyr Intel ADSP IPC driver added in soc: intel_adsp: ipc: Do not send message until previous one is acked zephyrproject-rtos/zephyr#51894 , can be removed and placed into the new comm_scheduler (that will make use of the

k_p4wq_work.done_semprimitive). This way we will handle IPC messaging synchronization in one place (not scattered among sof & zephyr code). To use mentioned semaphore we need a wrapper code (that conceptually fits the scheduler abstraction). - Easy expandability of new "system middle priority" tasks. When all communication tasks are placed into single scheduler with clean priorities and synchronization rules, we can easily add another sof tasks of middle system priority and decide their priority in comparison to other tasks.

- New scheduler provides an interface for tasks deadlines. Each tasks type will have own deadline that will be passed to

k_p4wq_workitem.

Describe the solution you'd like

Introduce new type of SOF scheduler - "comm_scheduler".

It would be responsible for scheduling IPC and IDC messages using zephyr treads, priorities, preemption and deadlines.

It would be a wrapper for p4wq abstraction.

Each core would run its own "comm_scheduler" instance.

EDIT: In IPC scheduling and execution refactor rev1.1 model we decided to use single comm_scheduler instance for all cores.

It will have inside CORE_COUNT * p4wq queues. Each queue managed by 1 thread pinned to each core.

comm_scheduler would operate on sof_task abstraction while mapping those to underlying k_p4wq_work items managed by the k_p4wq_work.

New scheduler would work on the single Zephyr preemptive thread of the priority index = 0.

This would place it in the whole system as "middle priority tasks" scheduler (since the LL schedulers work on the Zephyr cooperative threads that have priority index < 0 and the new DP scheduler has priority index > 0.

Also this change would cause IDC messages to be assigned to sof_task abstraction so it may be managed by the generic scheduler interface.

Model prepared

Prepared a model of the proposed changes.

Model consists of the "class diagram like" static description of data structures along with comments on their runtime uses/behavior.

It describes the existing IPC scheduling mechanism and the new scheduler model.

There are also two "sequence diagram like" graphs describing a flows on the comm_scheduler instances.

First sequence diagram shows scenario when the IPC message is received from Host by interrupt, IPC target is Core0 and the response send.

Second sequence diagram shows a scenario when IPC message is received from Host by interrupt, IPC target is Core1, so the message is redirected to Core1 using IDC messaging. It also shows new improved synchronization mechanisms (synchronization of IPC messages with each other, and synchronization of IPC messages with IDC completion).

Activity diagram describes a scenario "Received IPC host interrupt to create a pipeline on Core1". It describes scheduler

implementation in detail presenting threads, synchronization points and correlation of task with p_k4wq_work object.

Model revisions

IPC & IDC scheduling and execution refactor rev1.0.pdf

IPC & IDC scheduling and execution refactor rev1.1.pdf

IPC & IDC scheduling and execution refactor rev1.2.pdf

Change list between model rev1.0 and rev.1.1:

- Addressed issues indicated by @mmaka1 to make the diagram more readable and consistent:

- Added information on what is a thread in the communication model and some legend with symbols explanation.

- In the communication diagrams removed "interrupt context" as object representation and replaced it with message as it should be from the start.

- Added information on budget to communication model and the legend.

Change list between model rev1.1 and 1.2:

- Added more elements to class diagram to describe static correlation of

taskobject withk_p4wq_workobject:- turned out that there is new requirement to the task1 from the roadmap - "Expand functionality of P4WQ so it may add tasks to the queue in the specified order":

k_p4wq_workstruct needs to have additionalvoid *priv_datafield to provide a correlation withtaskstruct items. - added new struct

comm_scheduler_task_datathat maps structtasktok_p4wq_workitems. - added new function

static void comm_task_handler()that is a callback executed byk_p4wq_workitems and contains completion, rescheduling and synchronization logic for tasks executed at given time.

- turned out that there is new requirement to the task1 from the roadmap - "Expand functionality of P4WQ so it may add tasks to the queue in the specified order":

- Added new activity diagram that describes in detail how implementation of the new scheduler would work:

- diagram describes interaction between tasks, work items and

static void comm_task_handler()function. - diagram shows what actions are executed on what thread and synchronization points (colors of arrows).

- diagram describes interaction between tasks, work items and

Please share your feedback on the implementation idea. In this issue I would like to discuss all possible matters and doubts before starting works on this feature. Moreover, I would like to create a reasonable roadmap of works to be done (how to split this on PRs).

Tasks roadmap to implement the feature

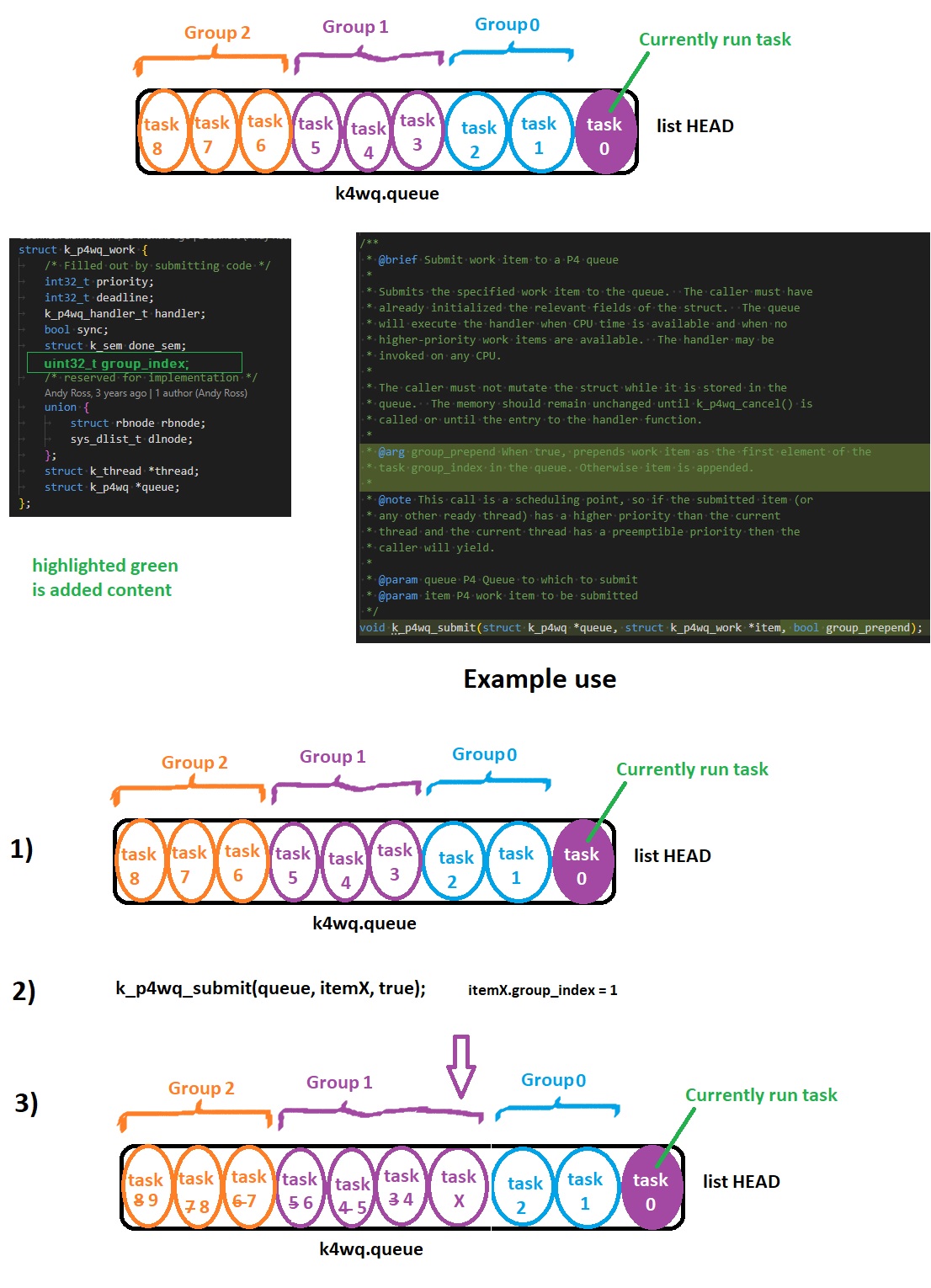

- Expand functionality of P4WQ so it may add tasks to the queue in the specified order (by group prepend and append).

- Implement new

comm_scheduleralong with unit tests - Refactor SOF IPC4 for Zephyr platforms and Zephyr Intel ADSP IPC driver to use new

comm_scheduler

(src\ipc\ipc-zephyr.cand many ipc-common source files). - Refactor IDC for Zephyr platforms so it uses new

comm_scheduler(src\idc\zephyr_idc.c) and Async Messages Service - Remove EDF scheduler if no dependencies to non Zephyr platforms