-

Notifications

You must be signed in to change notification settings - Fork 1.4k

New simplelayer HilbertTransform implemented, and wrapped in new intensity transform DetectEnvelope. #1287

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

1a08092 to

770b58c

Compare

|

looks like torch.fft has some breaking changes in torch1.5 - 1.7, perhaps you can use this pattern as a workaround: MONAI/monai/transforms/spatial/array.py Lines 51 to 58 in 9f51893

|

Thanks @wyli, I'll try that. There are a couple of mypy issues to fix too. Once all the tests (apart from DCO) have passed I'll rebase to a single signed commit. |

|

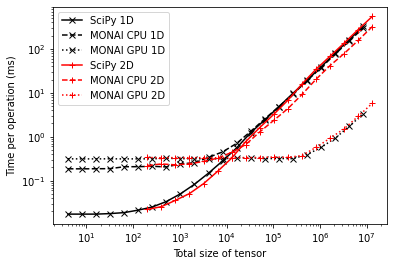

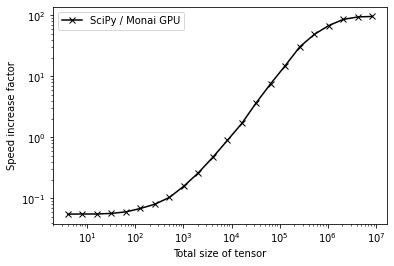

@crnbaker Nice graphs! From a quick look at it, the 10^4 performance crossing point refers to the number of pixels across the full image / tensor, rather than the size along the transformed dimension, right? Looking at the performance crossing point in terms of number of points within an A-line, does the number of lines one has in the image/tensor influence it much? If the crossing point is always around 200 samples / A-line, I guess it means the GPU implementation should be the best performing one in most real-life applications. Correct? |

|

@wyli I've used the EDIT: I think I can just use |

|

I think you can declare the type like this one MONAI/monai/transforms/spatial/array.py Line 49 in 9f51893

you could also put *in the docker |

0ab2a30 to

14bdaea

Compare

|

the current PR works fine with torch1.6+cu10.2. for torch1.7+cu11.1 only if I change the version conditions in the utils to if tuple(int(s) for s in torch.__version__.split(".")[0:2]) <= (1, 7): # line 22

if get_torch_version_tuple() > (1, 7): # line 248 |

|

Is it possible for me to get the Dockerfiles so that I can set up the containers that are failing and debug on my system? |

|

that's not yet implemented, for now perhaps you could refer to the configuration which are essentially bash commands MONAI/.github/workflows/pythonapp.yml Line 149 in fd24c44

|

|

Thanks. I'm starting to realise that! Also, complex datatypes have only been recently introduced. |

|

I had another look at this at the end of last week. To debug, I need a system with PyTorch 1.6 and CUDA 11.0. There are no windows binaries available for this combination via pip or conda. There is a Docker image available, but Docker on Windows doesn't support CUDA. So I need a Linux system. I'd use WSL but that doesn't work with CUDA without installing a preview release on Windows... so I think I need to set up dual boot which I'd like to do anyway. Or as @tvercaut suggested, could we make this feature only available on PyTorch 1.7 somehow? |

|

I think we should go for pytorch 1.7+ , in the unit tests we need a new decorator similar to this one, to skip the tests on torch < 1.7 Line 69 in f01994d

|

|

Great, thanks @wyli I'm nearly there with this now. Any suggestions on what exception should be raised if the function is called with PyTorch < 1.7.0? I could defined a new exception, something like: Is there anywhere in particular this should go? |

14bdaea to

1e7013e

Compare

|

It turns out the PT17+CU110 check uses an NVIDIA container image which is based on a pre-release version of PyTorch 1.7 (commit 7036e91 from 15th September) that doesn't yet have the FFT module. Apparently the FFT module was added sometime between then and the 1.7.0 release on 27th October. I suppose others may also be using this NVIDIA image, so I'll need to add in something that not only checks the PyTorch version, but also whether the FFT module is available. EDIT: I'll use |

45eff8f to

41788f7

Compare

|

All sorted. Just failing the flake8 check for some reason... seems to be raising an exception related to the multiprocessing module. Have you seen that before @wyli? |

interesting, I've never seen this before I'm looking into this... |

|

not sure if this is relevant but I got these errors on mac, perhaps remove the shebang line in the file? |

8116943 to

d1b1ca0

Compare

|

/integration-test I think it's a bug from the flake8 plugin xuhdev/flake8-executable#15 need to make the py files not executable |

e576d8d to

ba5bcd9

Compare

…ed. Project-MONAI#1210. Requires torch.fft module and PyTorch 1.7.0+. Signed-off-by: Christian Baker <christian.baker@kcl.ac.uk> * New tests/utils.py unittest decorators added: SkipIfModule(), SkipIfBeforePyTorchVersion() and SkipIfAtLeastPyTorchVersion Signed-off-by: Christian Baker <christian.baker@kcl.ac.uk> * New unit tests added test_hilbert_transform.py and test_detect_envelope.py Signed-off-by: Christian Baker <christian.baker@kcl.ac.uk> * New exception InvalidPyTorchVersionError implemented in monai/utils/module.py Signed-off-by: Christian Baker <christian.baker@kcl.ac.uk> * New class HilbertTransform implemented in monai/networks/layers/simplelayers.py Signed-off-by: Christian Baker <christian.baker@kcl.ac.uk> * New class DetectEnvelope implemented in monai/transforms/intensity/array.py, uses HilbertTransform Signed-off-by: Christian Baker <christian.baker@kcl.ac.uk>

ba5bcd9 to

aa75c5b

Compare

|

Good to go! |

wyli

left a comment

wyli

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

thank you!

I didn't aware of this issue earlier, monai's docker image also uses PyTorch Version 1.7.0a0+7036e91... |

Yes and I've just seen it's failed the Docker check, which seems bizarre considering it passed with the same version of PyTorch here... I'll look into it. |

|

no problem I pushed a quick fix Lines 281 to 287 in 5c75dcb

|

Fixes #1210

Description

A new class

HilbertTransformhas been implemented inmonai/networks/layers/simplelayers.pyusing thetorch.fftmodule. Another new classDetectEnvelope, which wrapsHilbertTransform, has been implemented inmonai/transforms/intensity/array.py. Unit testing ofDetectEnvelopeis implemented intests/test_detect_envelope.py. Documentation has been updated and tested locally. Tests have been passed locally. Commits have been squashed.Status

Ready

Types of changes

./runtests.sh --codeformat --coverage../runtests.sh --quick.make htmlcommand in thedocs/folder.Details

Running on a Google CoLab VM with a GPU,

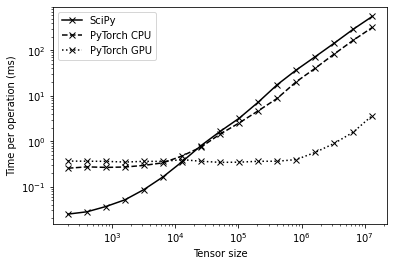

HilbertTransformis faster than the SciPy equivalent for Tensors with more than about 10^4 elements:Notebook demonstrating this is here.

I also implemented a Hilbert transform using

torch.nn.functional.conv1dbut its performance was poor compared to the FFT implementation.