-

-

Notifications

You must be signed in to change notification settings - Fork 782

Fix (workaround) for very slow test runs and timeouts on Travis CI #4505

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| raise | ||

|

|

||

| # -1, 0 and 1+ are handled properly by eventlet.sleep | ||

| self._logger.debug('Received RabbitMQ server error, sleeping for %s seconds ' |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Having this log message here would save me a lot of time trying to track down the root cause.

|

On a related note - I disabled the "bandit" check since it's too slow and provides little value (it takes 10+ minutes). |

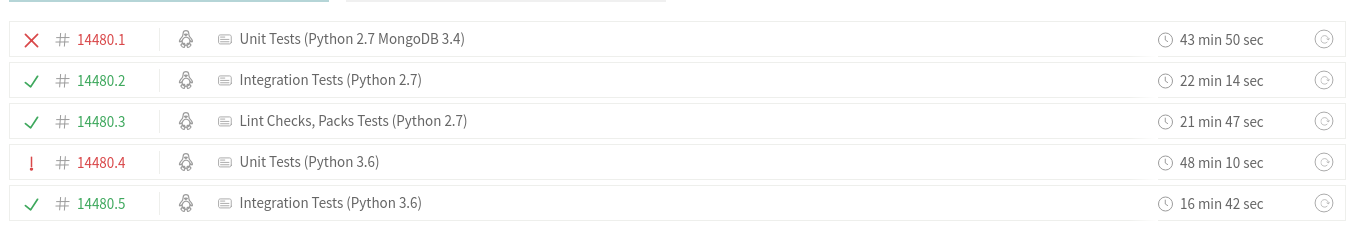

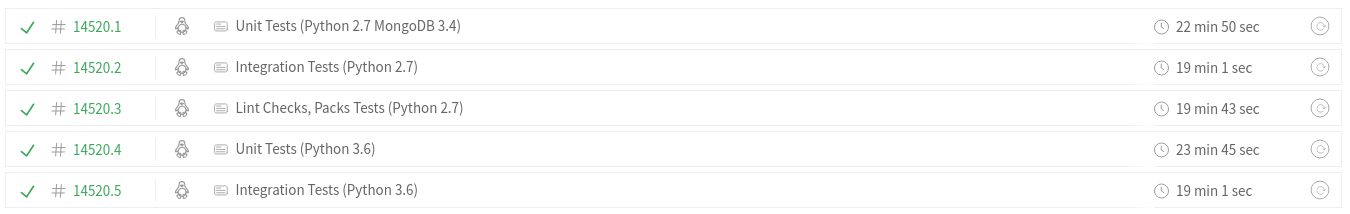

|

To give you the idea on the build timing info: Before: After: And subsequent runs should be even faster because the build will be able to utilize the dependency cache (I was also working on moving from Precise to Xenial change which nuked the dependency cache). I will go ahead and merge this once the Travis build passed to unblock myself (tons of PR and changes have been blocked because of those issues). |

arm4b

left a comment

arm4b

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good to see the root cause resolved 👍

|

I also cherry picked this in v2.9.3 to avoid very slow test issues on Travis. |

After spending a lot of time on Saturday and today I believe I managed to finally track down the issue with very slow tests on Travis.

It turns out that on Travis RabbitMQ throws a non-fatal

Channel.open: (504) CHANNEL_ERROR - second 'channel.open' seenerror when using our connection retry wrapper code which causes the code to "hang" and sleep for 10 seconds here https://github.com/StackStorm/st2/blob/master/st2common/st2common/transport/connection_retry_wrapper.py#L130 (the issue can be reproduced 100% of the time on Travis).This happens very often so tests are up to 20 times and more slower on Travis than locally.

For example:

I haven't been able to track down the root cause yet aka why RabbitMQ throws that error on Travis, but not locally or on some other environment. Good news is that the workaround should be safe to use and appears to have no negative side affects. I tried things such as installing latest and previous RabbitMQ server version on Travis, but nothing made a difference. It could indicate that the issue is related to unique networking setup on Travis VMs or similar.

It appears that that issue has been present on Travis for quite a long time, but it got so bad recently we couldn't even get tests to pass due to the timing issues.

The workaround for the issue is available in 56b216b, da9177a.

I also have some other "related" PRs open, like switching from Precise to Xenial on Travis, etc. which I plan to finish now with that fix in place.