-

Notifications

You must be signed in to change notification settings - Fork 16.4k

Split installation of sdist providers into parallel chunks #29223

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Split installation of sdist providers into parallel chunks #29223

Conversation

|

This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. |

|

Not stale |

101d6a8 to

2a09c18

Compare

2a09c18 to

f8f243d

Compare

|

This one speeds up one of the longest CI jobs we have on Installing sdist packages has been always super-slow (and recent However - since we only want to see if provider This way we can install all sdist packages 5 times faster on seff-hosted machine with 8 CPUS (it's not 8x faster because of some common overhead repeated for each one - we install airflow from sdist package in each of the containers. This job is only running for self-hosted runners, so it will have no impact on non-committer PRS (but when we will speed up the remaning Helm Unit test that is very slow as well, this will give faster feedback for commiter PRs and faster builds for main "canary" builds (and some 20 minutes build-time cost for those. |

|

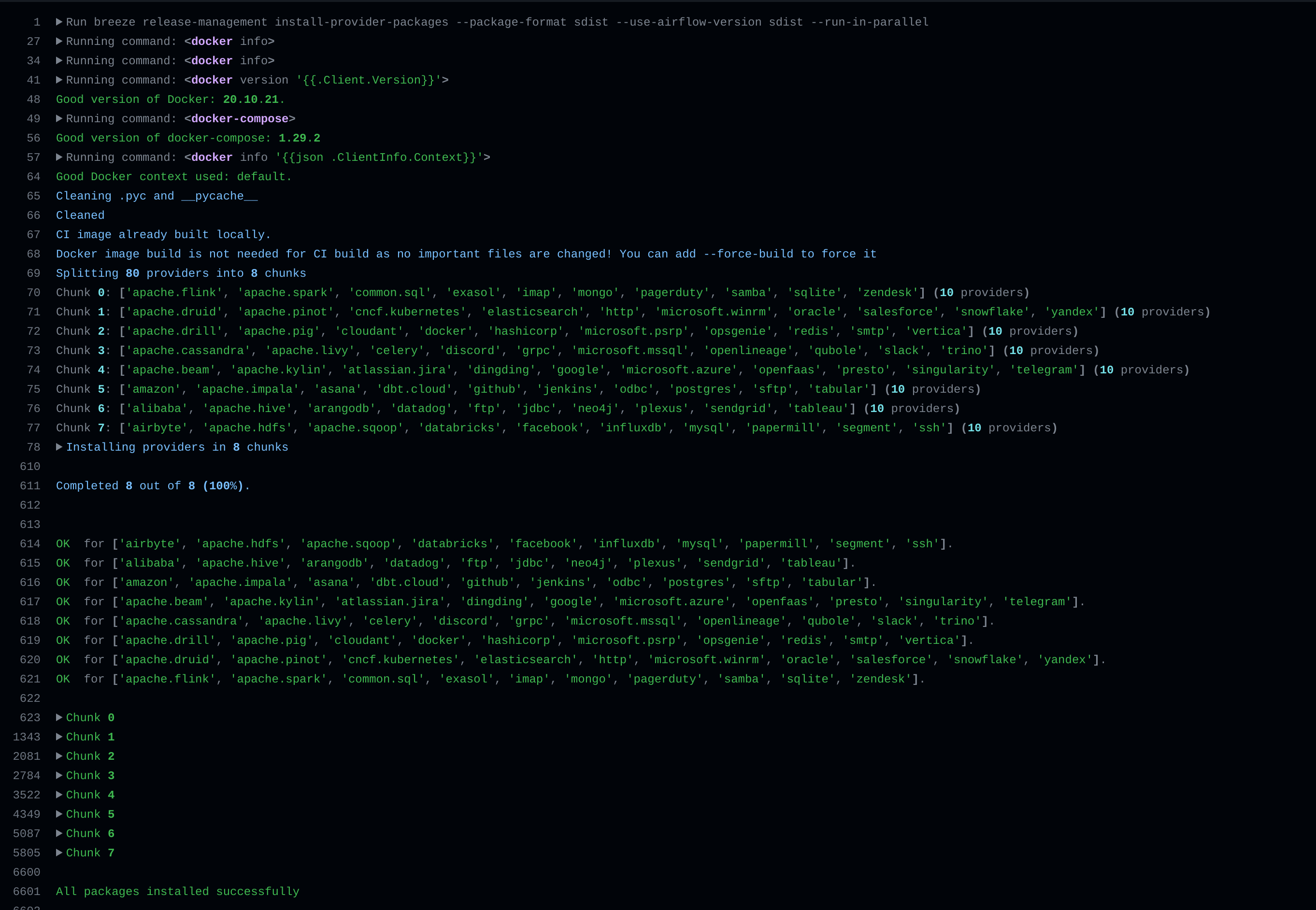

This is how it will look like (https://github.com/apache/airflow/actions/runs/4714228972/jobs/8360483236): |

f8f243d to

d9b5c8c

Compare

|

We also need this one to get feedback improvements (and cc: @hussein-awala - to your doubts from #30672 , this one has only positive impact - it just uses mulitple CPUS of our self-hosted runners much more effectively) we get close to 100% utilisation thanks to splitting sdist installation into chunks and parallelising them in multiple containers. Pretty much same "build time" but with 5x decrease of "elapsed time". |

|

Would apreciate to get that one merged. Tt will speed up some of the main builds and constraint refreshing, I think sdist and #30672 are the ones that delay (and cancel completion) of quite a number of those complete "canary" builds (because the jobs are cancelled by subsequent merges), and merging those will make the refreshing our cache and constraints more often. |

f46c942 to

869f9aa

Compare

|

Last speedup for CI in the current series. |

30b4df4 to

87a1471

Compare

1a7c363 to

7b3e734

Compare

|

Conflict after merging #30705 resolved. |

|

Reaaly nice speedup: from 20 to 6 mins without any other impact (just better use of multiple CPUS). I hope to get a quick review on that one :) |

d7c9db8 to

a119984

Compare

Sdist provider installation takes a lot of time because pip cannot parallelise the sdist package building. But we still want to test all our provider's installation as sdist packages. This can be achieved by running N parallel installations with only subset of providers being installed in each chunk. This is what we do in this PR.

a119984 to

51d701b

Compare

hussein-awala

left a comment

hussein-awala

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks good for me, LGTM

Sdist provider installation takes a lot of time because pip cannot parallelise the sdist package building. But we still want to test all our provider's installation as sdist packages.

This can be achieved by running N parallel installations with only subset of providers being installed in each chunk.

This is what we do in this PR.

^ Add meaningful description above

Read the Pull Request Guidelines for more information.

In case of fundamental code changes, an Airflow Improvement Proposal (AIP) is needed.

In case of a new dependency, check compliance with the ASF 3rd Party License Policy.

In case of backwards incompatible changes please leave a note in a newsfragment file, named

{pr_number}.significant.rstor{issue_number}.significant.rst, in newsfragments.