-

Notifications

You must be signed in to change notification settings - Fork 16.4k

DynamoDBToS3Operator - Add feature to export table to a point in time #30501

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@eladkal @o-nikolas Please review |

|

FYI - Test cases are failing because of the following issue - #30613 |

8fcdc46 to

1e215aa

Compare

|

Needs conflict resolving too. |

ferruzzi

left a comment

ferruzzi

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Left a couple of comments and non-blocking suggestion, but I like how this turned out. The PascalCase suggestion needs to be fixed, the other two are just thoughts you can feel free to revise or ignore.

I really appreciate your patience with the waiter shenanigans, we both learned something on that!

Co-authored-by: D. Ferruzzi <ferruzzi@amazon.com>

ferruzzi

left a comment

ferruzzi

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice 👍

| credentials = self.hook.get_credentials() | ||

| waiter = self.hook.get_waiter( | ||

| "export_table", | ||

| client=boto3.client( | ||

| "dynamodb", | ||

| region_name=client.meta.region_name, | ||

| aws_access_key_id=credentials.access_key, | ||

| aws_secret_access_key=credentials.secret_key, | ||

| ), | ||

| ) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

❤️

|

If the CI is happy, I'm happy 👍 Thanks for sticking with this one. |

|

CI is not happy. @utkarsharma2 -> you need to fix those . |

|

Hey @potiuk, CI is happy now :) |

|

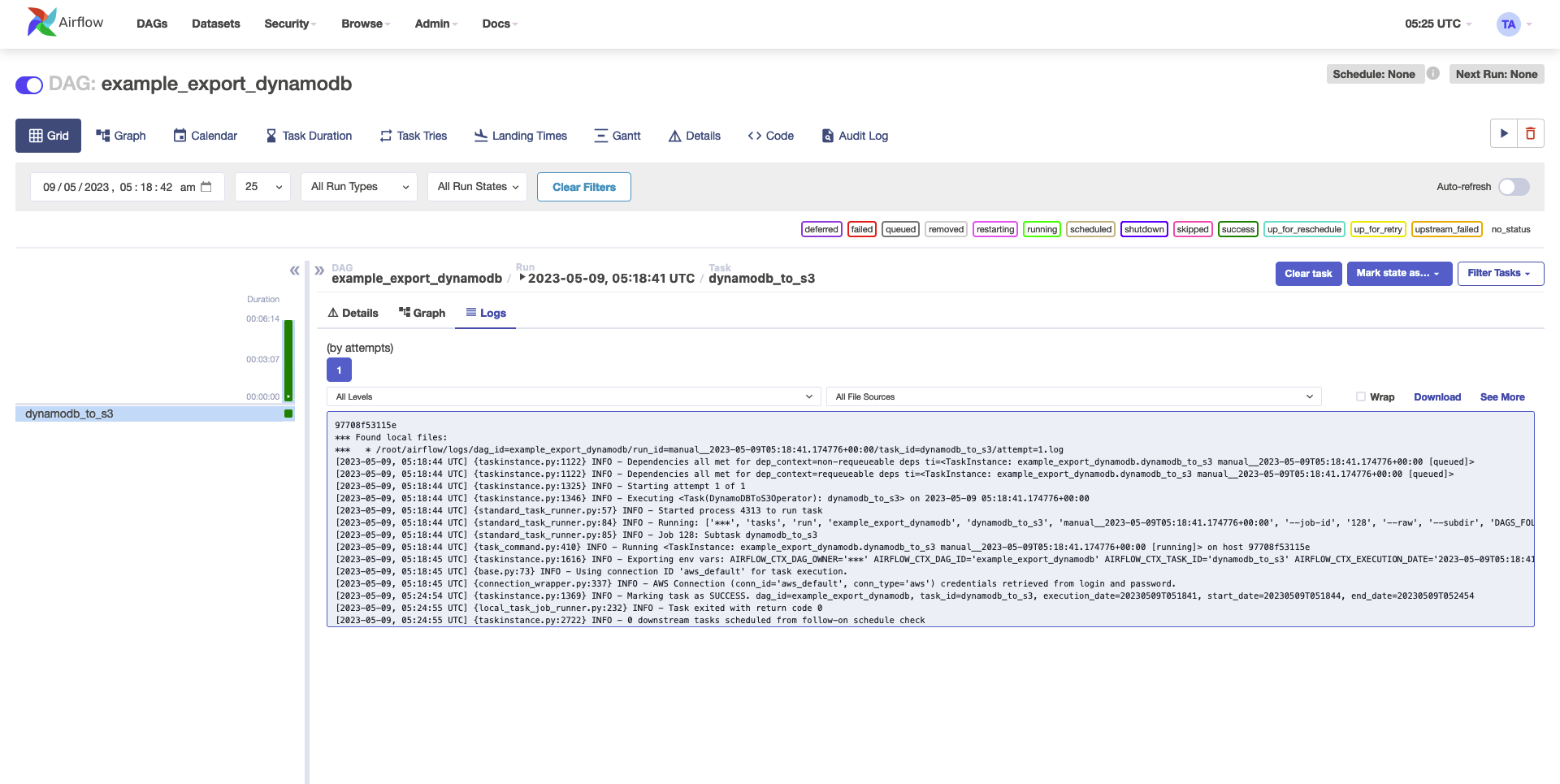

@utkarsharma2 Did you actually try this live? After the merge, the system test fails because this addition is missing the file_size paramter, and after adding that in it fails with the message Here you are passing |

… in time (apache#30501)" This reverts commit fc41661.

|

@ferruzzi Yes I did test it locally, I was passing table ARN in place of the table name, and file_size but forgot to add it in the example :/ |

|

@ferruzzi @o-nikolas Raised a new PR with the Fix Please review - #31142 |

|

Cool, thanks! I'll take a look later today when I get a moment 👍 |

DynamoDBToS3Operator - Add a feature to export the table to a point in time.

closes: #28830