-

Notifications

You must be signed in to change notification settings - Fork 16.4k

DynamoDBToS3Operator - Add a feature to export the table to a point in time. #31142

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Co-authored-by: D. Ferruzzi <ferruzzi@amazon.com>

| state at this point in time. | ||

| """ | ||

| client = self.hook.conn.meta.client | ||

| table_description = client.describe_table(TableName=self.dynamodb_table_name) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Fixed TableARN issue

| backup_db_to_point_in_time = DynamoDBToS3Operator( | ||

| task_id="backup_db_to_point_in_time", | ||

| dynamodb_table_name=table_name, | ||

| file_size=1000, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Added required param.

|

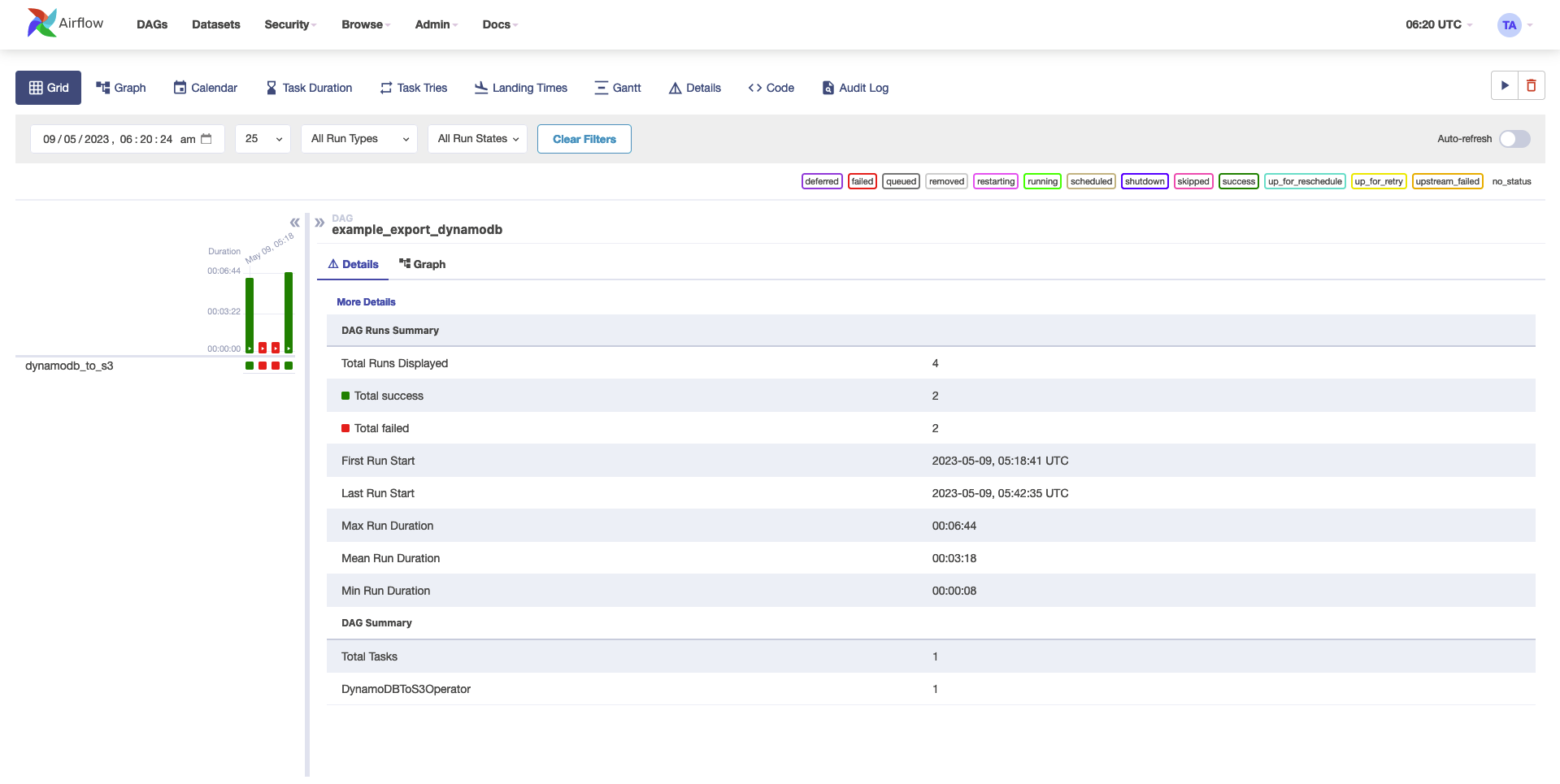

Have tested this locally with the below dag and it's working for me |

ferruzzi

left a comment

ferruzzi

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for not only taking on the fixes, but getting the done so quick. LGTM (again 🤦)

| client = self.hook.conn.meta.client | ||

| table_description = client.describe_table(TableName=self.dynamodb_table_name) | ||

| response = client.export_table_to_point_in_time( | ||

| TableArn=table_description.get("Table", {}).get("TableArn"), |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I saw your question in the other PR before I saw you already submitted this one. Glad you came tot his solution anyway :) As I mentioned over there, another possible solution would have been to use cached_property to get and store the ARN, but I don't think there is much difference in this case in the end. 👍

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 on this one :) Thanks for your reactivity!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for a quick review. I'll be more mindful going forward with respect to system tests. 👍

o-nikolas

left a comment

o-nikolas

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the quick turnaround!

|

@utkarsharma2 System tests still failing with the mesage |

|

I'm at Open source Summit so I can't look too closely right now, but I wonder if the prefix has to start with |

|

Or if a blank prefix is viable, maybe try using airflow.helpers.prune_dict() to drop the None value |

|

@ferruzzi I was able to reproduce the issue on local and there was a missing key |

DynamoDBToS3Operator - Add a feature to export the table to a point in time.

Fixed - Now fetching the TableARN from

client.describe_table(TableName=self.dynamodb_table_name)and addedfile_sizein the example.closes: #28830