-

Notifications

You must be signed in to change notification settings - Fork 3.7k

Description

Describe the bug

It is difficult to reproduce.

Suppose there are three BE nodes, A, B, C; there is a single copy of tablet_1, currently at node A. Due to data balancing, tablet_1 is moved between nodes

step1: A --> B

step2: B --> C

step3: C --> B

In step2, tablet_1 is removed, but the data is not actually deleted in node B. The tablet is still retained in the be meta. tablet_report task of node B is reported to FE master, and FE finds that tablet_1 should not exist in node B after comparing the meta and issues a drop task. B receives the rpc and deletes the data, and removes it from the BE meta.

In step3, FE master sends clone task to B node, B receives the rpc, performs clone, and adds tablet_1 to BE meta after completion.

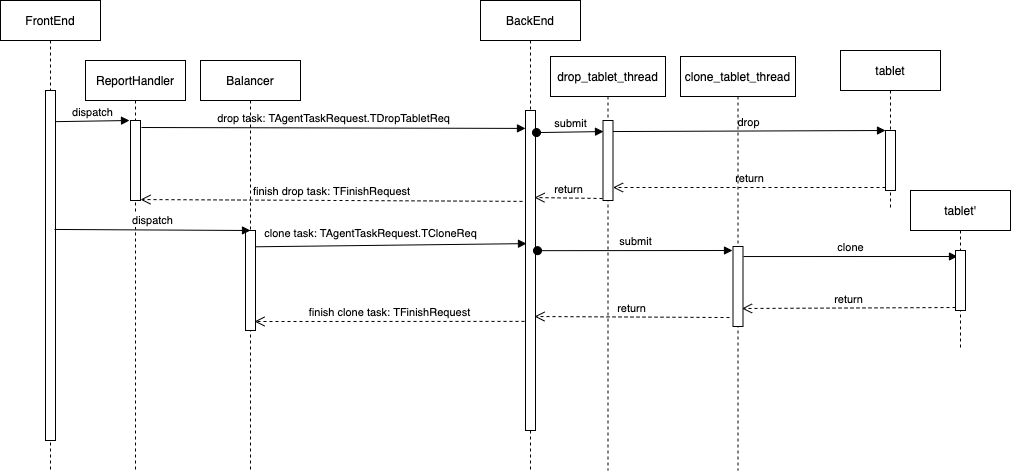

The normal flow is as follows.

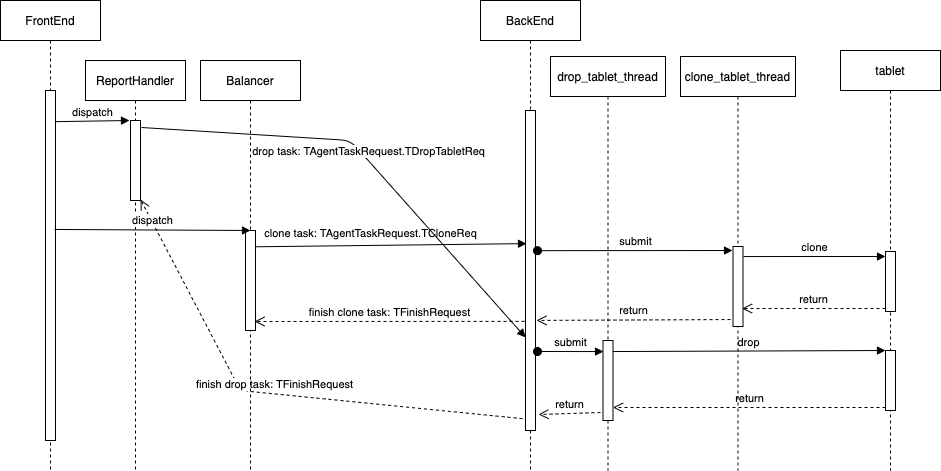

When the drop task rpc arrives at BE after the clone task rpc due to network or other reasons, the tablet just finished clone is deleted, resulting in data loss.

The abnormal flow is as follows.

Log

Note the timestamp of the log.

fe.log

-- step2 clone finish, delete tablet in fe meta

2021-05-26 10:14:37,352 INFO (tablet scheduler|33) [TabletScheduler.deleteReplicaInternal():983] delete replica. tablet id: 11415, backend id: 10006. reason: DECOMMISSION state, force: false

2021-05-26 10:14:37,352 INFO (tablet scheduler|33) [TabletScheduler.removeTabletCtx():1180] remove the tablet 11415. because: redundant replica is deleted

-- be report, fe finds out tablet is not in meta, send drop task to be

2021-05-26 10:15:17,809 WARN (Thread-48|104) [ReportHandler.deleteFromBackend():676] failed add to meta. tablet[11415], backend[10006]. errCode = 2, detailMessage = replica is enough[1-1]

2021-05-26 10:15:17,809 WARN (Thread-48|104) [ReportHandler.deleteFromBackend():689] delete tablet[11415 - 328326456] from backend[10006] because not found in meta

-- balancing, fe send clone task to be

2021-05-26 10:15:17,812 INFO (tablet scheduler|33) [TabletScheduler.schedulePendingTablets():417] add clone task to agent task queue: tablet id: 11415, schema hash: 328326456, storageMedium: HDD, visible version(hash): 1-0, src backend: xxxxx, src path hash: 8876445914979651155, dest backend: 10006, dest path hash: -1651394959110222208

-- fe receives be finish task report

2021-05-26 10:15:17,819 INFO (thrift-server-pool-38|2545) [TabletSchedCtx.finishCloneTask():878] clone finished: tablet id: 11415, status: HEALTHY, state: FINISHED, type: BALANCE. from backend: 10004, src path hash: 8876445914979651155. to backend: 10006, dest path hash: -1651394959110222208

2021-05-26 10:15:17,819 INFO (thrift-server-pool-38|2545) [TabletScheduler.removeTabletCtx():1180] remove the tablet 11415. because: finished

-- be tablet_report, be 10006 should have tablet 11415, but not have. set it as bad.

2021-05-26 10:16:17,810 ERROR (Thread-48|104) [ReportHandler.deleteFromMeta():573] backend [10006] invalid situation. tablet[11415] has few replica[1], replica num setting is [1]

2021-05-26 10:16:17,810 WARN (Thread-48|104) [ReportHandler.deleteFromMeta():602] tablet 11415 has only one replica 320661 on backend 10006 and it is lost, set it as bad

be.INFO

-- be receives clone task

I0526 10:15:17.814332 27810 task_worker_pool.cpp:219] submitting task. type=CLONE, signature=11415

I0526 10:15:17.814347 27810 task_worker_pool.cpp:231] success to submit task. type=CLONE, signature=11415, task_count_in_queue=2

I0526 10:15:17.814397 6411 task_worker_pool.cpp:879] get clone task. signature:11415

I0526 10:15:17.814409 6411 engine_clone_task.cpp:83] clone tablet exist yet, begin to incremental clone. signature:11415, tablet_id:11415, schema_hash:328326456, committed_version:1

I0526 10:15:17.814419 6411 engine_clone_task.cpp:99] finish to calculate missed versions when clone. tablet=11415.328326456.da44ef84b34f17cd-26194d724a4cca95, committed_version=1, missed_versions_size=0

-- clone task finished.

I0526 10:15:17.814425 6411 tablet_manager.cpp:921] begin to process report tablet info.tablet_id=11415, schema_hash=328326456

I0526 10:15:17.814515 6411 engine_clone_task.cpp:274] clone get tablet info success. tablet_id:11415, schema_hash:328326456, signature:11415, version:1

I0526 10:15:17.814522 6411 task_worker_pool.cpp:900] clone success, set tablet infos.signature:11415

-- be receives drop task

I0526 10:15:17.814952 30611 task_worker_pool.cpp:219] submitting task. type=DROP, signature=11415

I0526 10:15:17.814957 30611 task_worker_pool.cpp:231] success to submit task. type=DROP, signature=11415, task_count_in_queue=10

-- do drop task

I0526 10:15:17.816692 6387 tablet_manager.cpp:462] begin drop tablet. tablet_id=11415, schema_hash=328326456

I0526 10:15:17.816697 6387 tablet_manager.cpp:1401] set tablet to shutdown state and remove it from memory. tablet_id=11415, schema_hash=328326456, tablet_path=/ssd2/515813927922307072/palobe/data/data/899/11415/328326456

I0526 10:15:17.816767 6387 tablet_meta_manager.cpp:113] save tablet meta , key:tabletmeta_11415_328326456 meta_size=1172

-- remove task info

I0526 10:15:17.816998 6387 task_worker_pool.cpp:260] remove task info. type=DROP, signature=11415, queue_size=68

I0526 10:15:17.820333 6411 task_worker_pool.cpp:260] remove task info. type=CLONE, signature=11415, queue_size=1

Cause Analysis

There is no problem with FE timeline, BE should receive drop task first and then receive clone task. drop is executed after clone due to network or other reasons. And the BE meta does not record the version information of tablet. The task of the old data deleted the new data.