-

Notifications

You must be signed in to change notification settings - Fork 505

METRON-2217 Migrate current HBase client from HTableInterface to Table #1483

Conversation

…uences and extra unnecessary changes for the migration.

...-hbase/metron-hbase-common/src/test/java/org/apache/metron/hbase/client/HBaseClientTest.java

Outdated

Show resolved

Hide resolved

| return getConnection(config).getTable(TableName.valueOf(tableName)); | ||

| } | ||

|

|

||

| private Connection getConnection(Configuration config) throws IOException { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Will we use different Configurations here?

| * the interface everywhere we touch HBase, we can use a lazy initialization scheme to encapsulate | ||

| * this within the HTableProvider. This is a sort of poor man's connection pool. | ||

| */ | ||

| private static Map<Configuration, ThreadLocal<RetryingConnection>> connMap = new ConcurrentHashMap<>(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

And actually, Connection is thread safe, so you only need to cache Tables. And since the Table is getting from Connection, which means it will not make new TCP connections to HBaseCluster, maybe we even do not need to cache the Tables, as it is only some in memory operations.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Plus you are going to leak HBaseConnections here, as they never get cleaned up.

|

Linking this thread from the Apache HBase dev list - https://lists.apache.org/thread.html/6b83cd7548efb8c37899063affc97e4c5ce823a13359a49b477e3c07@%3Cdev.hbase.apache.org%3E Thanks @Apache9 for the feedback and assistance here - greatly appreciated! |

tigerquoll

left a comment

tigerquoll

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hbaseconnections are thread-safe objects. Setting up a HBaseConnection is pretty resource intensive. If you are authenticating with a static identity that is not going to change (i.e. a service account), and you are constantly interacting with HBase, then I believe it is recommended that you keep the HBaseConnection object around in a cache or context, and reuse it to pull out Table and Mutation objects.

| * the interface everywhere we touch HBase, we can use a lazy initialization scheme to encapsulate | ||

| * this within the HTableProvider. This is a sort of poor man's connection pool. | ||

| */ | ||

| private static Map<Configuration, ThreadLocal<RetryingConnection>> connMap = new ConcurrentHashMap<>(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Plus you are going to leak HBaseConnections here, as they never get cleaned up.

Yes, that is what we're doing here. The connection model differs subtly from a standard request/response lifecycle web app in that we don't need to open/close the connections. They are bound to the process on startup for the duration of the program's execution. There should be no instance where we are left with a leaked connection because we're only opening a new one when the given thread does not already have an open connection. This generally only happens during application initialization/startup for us. |

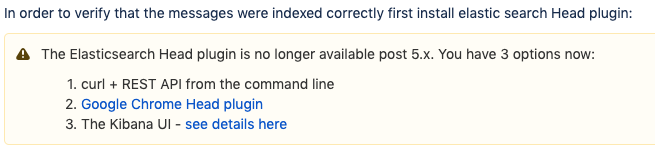

Test PlanEnrichmentsThis will cover enrichments, threat intel, and the bulk loading utilities that write data to HBase Test basic enrichmentSpin up full dev Optional - free up resources. We're going to be spinning up some additional topologies. The resources in full dev are limited, so you'll probably want to stop non-critical topologies in order to have enough Storm slots. Follow the following [updated] blog series steps here to get some data into Metron using Squid along with an enrichment

Test threat intelTest multi-threadingFor the final step, we'll deviate from the blog a bit so we can test that the thread pool doesn't cause any deadlocking/threading issues on the new HBase connection approach. Taken from https://cwiki.apache.org/confluence/display/METRON/2016/06/16/Metron+Tutorial+-+Fundamentals+Part+6%3A+Streaming+Enrichment. Follow the steps in the blog tutorial for setting up the user streaming enrichment, but instead of modifying/using bro as suggested at the end, follow the below instructions. Let's load the original whois list from step 1 as a threatintel for added fun. This way we can run multiple enrichments and also have it trigger threat intel from the same messages. Create a file Load the threat intel into HBase Clear the squid logs Re-run new squid client commands similar to step 1. Rather than a fraction of the records matching on domain for the whois enrichment, we'll have them all match for this test. Update your squid.json enrichment to include Stellar enrichments. We're going to duplicate the Load the changed enrichment Wipe your squid indexes in ES Stop the enrichment topology In Ambari, navigate to Metron > Configs > Enrichment. Make the following config adjustments:

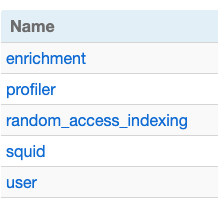

Restart the enrichment topology. You should see a log message in the storm worker logs similar to the following: Import the squid access data to Kafka. Run it multiple times by running the following: After a bit of time, you should see new records in the squid index that have the new enrichment and threat intel fields (note the fields dws #1-4). You should get 210 records in your squid index assuming you setup your squid access log with 7 records during the earlier squidclient setup. Test recoverability with HBase downNow, again clear your squid index. Stop HBase and wait a few moments. Import the squid data again: Wait about a minute and check your squid index. You should not see any new data in the index. Now, restart HBase again in Ambari. After HBase has restarted, check the squid index. After some amount of time, the data should be able to flow through enrichments and make it to the squid index. After completing the above steps you should not see any HBase exceptions or errors in the enrichment logs. ProfilerStop the profiler. In Ambari, set the profiler period duration to 1 minute via the Profiler config section. Create Modify Push your changes to Zookeeper Restart the profiler again. Clear your squid data And publish some squid data to the squid topic for roughly 500 seconds. This is a somewhat arbitrary choice, but we want to give the profiles enough time to flush in order for the enrichments to start picking up the profile data from HBase. Once this process completes, you should note the following:

|

|

Metron tutorial needs updating from the new Kafka client tools. Step 5 needs to be updated to read Kafka via: |

|

Metron tutorial step 5 global.json additional to support squid needs a comma after last square bracket. |

|

Metron tutorial Step 5, no spare workers available to run squid topology. Need to add instructions on adding another supervisor slot port. |

|

Metron tutorial part 2. To "Check if Zookeeper enrichment tag was properly populated" |

|

Metron tutorial part 2, should have instructions to retrigger squid data feed, and directions on how to drive the Rest interface to prove data is being enriched. |

|

Threatintel tutorial: Dropping of elasticsearch index requires a sudo as their owned by root |

|

What commands are you using to query the ES index? |

|

@tigerquoll thanks for the test script feedback! Ultimately, I think it would be nice to get the blog entries added in our use-cases directory where we can track and maintain them more easily. This is a good first step.

Updated

Not sure what you mean here - this is a snippet to use as guidance to modify the global.json, not replace it.

I added instructions to cleanup resources. I'm going to add the same to my test script here.

Fixed

That tutorial references back to part 1 for instructions, but I added an extra line with the command detail. The Head plugin can be used for searching the ES indexes in this context.

This series of tutorials assumes full dev - you should be running as root by default in that environment

|

|

@tigerquoll - Needed to update my enrichment config - I used stellar expression groups and forgot to update my script for the enrichment config accordingly. |

|

I just merged this PR with master, which includes the latest Maven changes from #1436. This PR (1483) doesn't include any changes to Maven dependencies. I reviewed the test plan on 1436 and it looks like we may have missed a test case for that PR which introduces a regression for streaming enrichments. Logging a ticket against master. |

|

I was getting classpath issues with my PR that now seem to be fixed with #1498. That PR should get merged tomorrow sometime, barring any discussion to the contrary. In the meantime, I've merged those changes into this PR for sake of testing. I ran through the major parts of the test plan one more time and everything works as expected. I did not repeat the failure recovery scenario. For reference, this another exception I ran into while running the basic parser test. Again, this issue appears to have been resolved now with the changes in pr 1498: |

|

For the test script: full dev still needed another supervisor slot in Storm to allow the squid enrichment topology to run. |

|

@tigerquoll what all are you running? This leaves me with 1 slot available still |

|

@tigerquoll I've updated the test script on this PR to reflect the note in the first tutorial. |

|

@mmiklavc I have completed tests on a 5 node cluster, for the profiler tests I see below exceptions in the worker log of enrichment |

|

@MohanDV thanks for the review! The enrichment I constructed wasn't robust in the sense that if an ip_dst_addr is missing, it would be expected to blow up like this. The data samples I provided for squid should all have had an ip_dst_addr. This looks like one of the records may be null for some reason or another. Can you share the sample squid access log? I suspect there's a record in there that doesn't look right. |

|

@mmiklavc Yes I have an entry |

|

@MohanDV that makes sense then - this would be an expected exception in that case. |

|

Tests completed on a fresh pull / full dev deployment. All tests passed successfully. One nit: Command for stopping unneeded parsers should be: |

|

One thing to note, there was a non-fatal class cast exception in the nimbus logs: |

|

Thanks @mmiklavc ! it's +1 with verification from my side . |

Contributor Comments

https://issues.apache.org/jira/browse/METRON-2217

UPDATE - Test plan has been added below. I ran through this plan and variations of it many times over as I went through testing.

I want to get this in front of people to start reviewing asap. It's going to take me a couple days to work through a reasonable test plan for this, but this should not hold up reviewing the approach. Of note is that the change from HTableInterface to Table by HBase has now shifted the burden of connection management from the HTable implementation to the end user/client. We previously had very little, if any, hooks to close our HBase tables or attempt to clean up resources. In response to this change, there were a couple options for dealing with this:

This PR takes the approach in option 2. The biggest question surrounding this approach is whether the included connection management changes introduced in the TableProvider are sufficient, or if we need to immediately take a more robust connection pooling approach to dealing with HBase connections. I spent some time looking at the current HTable implementation that we depend on. Every time an HTable is created, the underlying code makes a call to an internal connection manager. It's unclear to me what the connection management contract is for the user in this case, e.g. stale connection cleanup, connection retries, connection pooling, etc. This is probably the riskiest part of this change. The way that I'm handling this is to

The combination of these 2 options provides a semi-robust way to handle connections without boiling the ocean as well as offering per-thread connection re-use that should limit the overall number of connections we keep open to HBase in a reasonable and reliable way.

I'd like to connect with some HBase committers or just hop on their dev list and ask what the implications of leaving connections alone would be. Again, we don't really do anything to manage creating/closing connections currently, and afaict we have never had any reports of leaking connections. This doesn't mean we won't now, but it's worth noting what the status quo has been for a while now.

Note: This is a backwards compatible change

Pull Request Checklist

Thank you for submitting a contribution to Apache Metron.

Please refer to our Development Guidelines for the complete guide to follow for contributions.

Please refer also to our Build Verification Guidelines for complete smoke testing guides.

In order to streamline the review of the contribution we ask you follow these guidelines and ask you to double check the following:

For all changes:

For code changes:

Have you included steps to reproduce the behavior or problem that is being changed or addressed?

Have you included steps or a guide to how the change may be verified and tested manually?

Have you ensured that the full suite of tests and checks have been executed in the root metron folder via:

Have you written or updated unit tests and or integration tests to verify your changes?

n/a If adding new dependencies to the code, are these dependencies licensed in a way that is compatible for inclusion under ASF 2.0?

Have you verified the basic functionality of the build by building and running locally with Vagrant full-dev environment or the equivalent?

For documentation related changes:

n/a Have you ensured that format looks appropriate for the output in which it is rendered by building and verifying the site-book? If not then run the following commands and the verify changes via

site-book/target/site/index.html:n/a Have you ensured that any documentation diagrams have been updated, along with their source files, using draw.io? See Metron Development Guidelines for instructions.

Note:

Please ensure that once the PR is submitted, you check travis-ci for build issues and submit an update to your PR as soon as possible.

It is also recommended that travis-ci is set up for your personal repository such that your branches are built there before submitting a pull request.