-

Notifications

You must be signed in to change notification settings - Fork 594

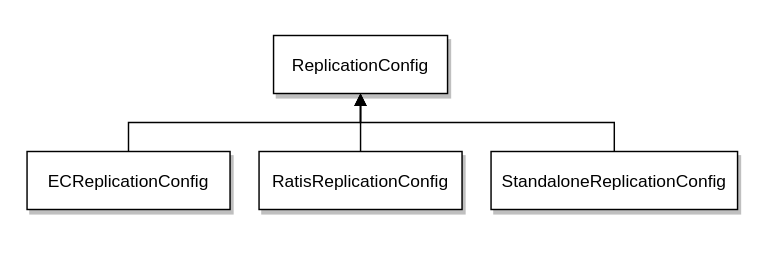

HDDS-4882. Introduce the ReplicationConfig and modify the proto files #1973

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

This change LGTM, but we should let Uma have a look too. I think you have linked this PR to the wrong Jira - it should be HDDS-4882 I think? Also, is it your intention to commit this to master, rather than the EC branch? |

| required uint64 size = 1; | ||

| required uint32 numBlocks = 2; | ||

|

|

||

| //deprected, use RatisReplicationConfig |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

deprected -- > deprecated

I think let's discuss this separately in master as it's making some deprecations?

I suggest first let's discuss how we pass the ReplicationConfig to server from createKeyAPI:

It should be a same object type isn't it?

If we have two separated config classes (RatisReplicationConfig and ECReplicationConfig), then it's not easy to pass through the same API right?

Example: here is the place we build OmKeyArgs and pass, here we are passing ReplicationFactor. Now having two classes, how we can use them here?

Also currently replicationFactor is carrying two ReplicationType factor ( One is for NON Ratis and other is for Ratis). So, we need another config class for non Ratis ( you named only RatisReplicationConfig, why do we need to bother Ratis or non Ratis? it's just replication right )?

I believe we need more discussion here.

I think we can bring ReplicationConfig changes in master itself and in EC branch we just need to add new EC config.

ECReplicationConfig: based on this name, we accepted the terminology Replication for EC as well. So, why can't we add replication type with more specific like EC_3_2 and replication factor is 5.

Then based on replication type EC_3_2, we can define EC specific interpreter class to understand what's the parity and data block numbers?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If we have two separated config classes (RatisReplicationConfig and ECReplicationConfig), then it's not easy to pass through the same API right?

In Java I assume we will have a common superclass / interface, so it will be possible. Unfortunately we can do it only with protobuf 3 and I wouldn't like to be blocked by that.

Until using proto3 I followed the same approach what we used earlier:

We have multiple subclasses (like CloseContainerRequestProto, ListContainerRequestProto ...) and based on a type ContainerCommandRequestProto.type we choose the right one.

Here I did exactly the same. The type is encoded in the replication type and based on the type we have two protos. But we can deserialize them to good old java classes which have common marker interface.

So, we need another config class for non Ratis ( you named only RatisReplicationConfig, why do we need to bother Ratis or non Ratis? it's just replication right )?

If I understood well, you talk about STANDALONE.

- STANDALONE replication is deprecated, therefore I didn't create configuration for that.

- The supported way to use ONE factor is using one node Ratis

- we do need to bother Ratis or non Ratis because they are different replications. In this model we may have different replication configuration for each of the replcation method

I think we can bring ReplicationConfig changes in master itself and in EC branch we just need to add new EC config.

I think the current state of the EC development is more like a proto-type phase. I would prefer to do this first on EC branch, check if it works well end2end (at least the read / write path). If it works well we can add it to the master.

But I wouldn't change master until we see a working poc from ec.

So, why can't we add replication type with more specific like EC_3_2 and replication factor is 5.

Because it's a less generic approach and instead of using meaningful, typed fields to hold information we would use string based naming conventions which is more error-prone, what I would like to avoid.

And what about if we need to introduce 2-3 new parameters for EC? Would it be EC_3_2_PARAM1_PARAM2_PARAM3?

| //the index of this replica in the replication group. | ||

| //this will be different for each instance in case of EC | ||

| //but the same (0) for all instances for standard Ratis | ||

| optional int32 replicationIndex = 14; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see this index added only ContainerReplica: but if we don't add it to ContainerID itself, this might leads to more changes.

example: Once we received container replica report, the below API we use to updateContainerReplica:

So, your thought is to add another field in this kind of API to carry index? otherwise we need to add that in ContainerID also.

private void updateContainerReplica(final DatanodeDetails datanodeDetails,

final ContainerID containerId,

final ContainerReplicaProto replicaProto)

throws ContainerNotFoundException, ContainerReplicaNotFoundException {

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry, I didn't get the challenge here. Can you please explain it in more details?

I am not sure if it's an answer: but I would prefer to keep it in a separated field to make sure we add it only when it's needed. It provides more visibility and code path which more easy to understand...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@elek Why does this patch not include all changes to API as well when this field is introduced?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I prefer to post smaller patches (when possible) to make it easier to review.

But in this case it's a fair question: it can be harder to imagine how would it be used from SCM protocol, so I quickly created the next patch on top of it: please see pr #2010

(Note: even #2010 not a full refactor we need more patches to refactor the pipeline management... Or, it can be part of HDDS-4892)

|

Why is replication config clubbed with the container replication index? -1 on mixing them in the same patch. |

arp7

left a comment

arp7

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

-1 with replication factor changes. Also the change looks quite incomplete. Won't we need to propagate the replication index in almost every message and API where the container ID is being passed? This is going to be a huge change.

This is one of the reasons I prefer the bitmasking approach.

No, we won't need it. That's one reason why I am against bit masking. For example for OM the instance id should be fully opaque and even for internal SCM container grouping it's not required. Other reasons are explained in the attached design document at https://issues.apache.org/jira/browse/HDDS-3816 Until now, I have strong concerns about the bitmasking approach but others (including Uma) said that but approach fine with them, so this seems to be Less Common Denominator. Yesterday we discussed this with @swagle: as the majority of this patch is not about container instance id but about |

|

Container indexes are removed by #c0e9a2f, can you please @arp7 have an other look. Thx a lot. |

|

This is not the correct way to bring replication factor changes into Ozone source. This requires a holistic discussion of the requirements and design first. My -1 on this PR still stands. |

That is just one example. However you will need the index in a bunch of places in the SCM, DataNode and client and it will result in many method signature changes all over the codebase. I don't disagree with your reasoning and also I understand the merits of a separate index. However I think the scope of the changes with adding an index everywhere are going to be huge so it may be better to choose the more practical option. Apart from the scope of changes I am unsure about adding yet one more index into the mix (we already have container ID, block ID, chunk ID). Is there anyone else in favor of going with the index-based approach? If you can get consensus from others who are working on EC then I won't object to going with indexes. |

But none of the reasons really technical concerns to me. Example: you said backward compatibility issue. It's not valid, unless you did not get the proposal fully. In the JIRA, in one of the doc you have listed and we discussed Since you are strongly willing to do the separated index, please go ahead and provide the patch with clear analysis. It does not mean that I am agreeing with your concerns what you have on bit masking. I am still saying they are not really valid concerns for me. Except the point, you said it's complex for you. It's a subjective, I cannot judge others understanding :-) . We just need to move forward and not really worth spending much time on this small part, which will block everything. Here are some inputs for your analysis: we need to create the blockOutputStreams to different nodes by passing, BlockID(containerID, localID). Here we must include the index as well, so that datanode knows and report the index to SCM. With bit asking this happens implicitly. Since you are trying index based approach here, we need to pass it explicitly. Let me know if your thought is different. |

|

It's confusing to me. JIRA still saying container metadata, but we have only changes related to replication config here. I see you have removed that changes here: #1973 (comment) Thanks |

|

Thanks for update the title of the Jira @umamaheswararao. I uploaded my proposal about the maintaining the instanceIndex to #2055 I suggest checking it and continue the discussion about container instances there. Let's talk here only about the |

Can you please help with more specific, technical questions? After 4-5 month of discussion and sharing dozen of pages design docs, -- I think -- it's good to be more exact and talk about the implementation details as we agreed on the high level designs. I think sharing some initial POC code can help this discussion. |

Upgrades. Not just client->server compat, also downgrade concerns. E.g. if the new ReplicationConfig message is in the OM ratis log, will the old software fail after downgrades? Secondly if the new configuration introduces new functionality, we must ensure it is not used until upgrade is finalized. We either bring it through the upgrade framework or think of some other way.

Was there a design note about these details of this index based approach? Perhaps I missed it. I am okay to have this be part of the jira description also. Will the clients send the old ReplicationFactor message in perpetuity?

It is very hard to tell from just the proto changes what direction we are going in. This is one instance in which a more complete patch with a short design note will help give reviewers a more complete picture. |

Thanks for the question, it's a very good and important one. I think we should differentiate between backward-compatibility on RPC and backward-compatibility related to persistence. On RPC we don't have any issue, as t's the question of the serialization/de-serialization code. That code can read information from both of the fields (old replicationFactor and new RatisReplicationConfig) as I am pretty sure that we can't remove any field we can just start the deprecate process. We should support the existing replicationFactor until Ozone 2.0. The persistence side is slightly more tricky as we should be sure that we persist the old field, at least until the metadata version is upgraded. That can be handled by the upgrade framework when it's ready to use (or we can just always fill the legacy field until a while)

Please note that this PR doesn't include anything related to the index based approach. Please continue the discussion about indexes in #2055. This PR is purely about (Note: you can find the design docs about both topics on the parent EC Jira)

Just the old clients. Server-side can check both the fields to support both old and actual field. This should be done until Ozone 2.0.

Totally agree, and providing more code is already suggested by @swagle. And as I wrote in the earlier comments, you can find some example code in #2010 where you can find the details of serialization/de-serialization (full SCM is not refactor as it's blocked by this discussion, but it has code for all the important parts...) Design thoughts can be found in EC v3 design doc. |

I don't think that will work. It will prevent new clients from talking to an old server. It will be useful to write down the 4 permutations in a table format. E.g. the columns could be:

|

|

Thanks the question (and the clear problem description) @arp7 In general, I should fill both the new and old fields all the time to make it possible to deprecate the old fields eventually. But let me explain my view in more details (as I also did offline). There are two questions here (I think).

The first part can be done easily with introducing new Java classes (and only one This change is separated and uploaded in the PR #2068 This The second question: do we need to follow existing conventions (long-term) or not. There are two options here:

Summary: 1. follows the convention and more flexible (even Using the first approach (this patch) we need to take care about the backward compatibility:

|

|

Thanks @elek for filling out the table. A few questions on row 3 from the table:

Are you proposing we stop storing the old factor/type after finalize? Also until finalize will you be storing both or just the old factor/type? If you do store ReplicationConfig prior to finalize what will be the OMs behavior on downgrade. Will it choke on seeing the unknown type in RAFT log or DB, or just ignore it. My guess is the latter, since we use protobuf serialization but it will be good to have a definite answer. In either case you will need to hook with the upgrade process somehow to determine that there is an upgrade in progress. Secondly, what if someone sends a scheme that cannot be converted to old factor/type before the upgrade is finalized? Should we fail the request? It will be good to collect these points and table in either a short doc or in the jira description so folks more familiar with the upgrade path like @avijayanhwx can take a look too. |

|

Sorry one more comment on row 1:

This may not be possible when the scheme cannot be expressed using factor/type. I guess the client can suppress sending factor/type in this case. It will be good to describe this also clearly. |

|

Thanks for the discussions.

This is very good point. so, OM state needs to be checked for every request to make sure they are convertible. @avijayanhwx could you please check above? if needed we can schedule online meeting as well. ( this is an important piece to complete soon as we have dependencies on this ) |

|

Yeah we should crisply define the semantics that we want first, and then we can discuss with @avijayanhwx how to implement it. |

|

Adding a few more columns that I think we need to define:

G3 and G4 also need updates. |

When we say "scheme" here, are we talking about an EC config that is not "convertible" into factor/type representations? Or, are we talking about some other replication config representation that is not convertible? |

Yeah that should be it. I cannot think of any other scenario since all we will have is Ratis replicated and EC. |

Got it. Until finalization the server cannot entertain any EC request since that is backward incompatible. This can be added as a check in the OM request handling logic. |

Agree. But it's a generic problem. Independent on this patch it should be implemented, IMHO. |

|

We discussed this question with @umamaheswararao and @sodonnel on the EC sync, and based on the feedback I would like to highlight my previous comment.

We can do it in a more lightweight and more compatible way (use ReplicationConfig just in the Java code and for new replication types in protos). This is uploaded in a separated PR (please check it). This PR talks about changing the old replication types which is more like a strategic question... |

@elek Is 2068 for the master and this PR for the branch (changes to be brought in via upgrades)? Do we have it the other way around? |

There are two ways to do this change and both can be done on master or EC:

|

|

Closing this PR for now. I think it's an important question if we need to this full migration or not. (Especially to support advanced configuration for Ratis/Standalone, like different closed container replication scheme), but it's enough to decide later. But for EC it's enough to have #2089 #2068, and I suggest to focus on those. |

What changes were proposed in this pull request?

Modify the SCM/datanode proto file with:

What is the link to the Apache JIRA

https://issues.apache.org/jira/browse/HDDS-4882

How was this patch tested?

CI