-

Notifications

You must be signed in to change notification settings - Fork 5.3k

use YMM registers on x64 for BlkUnroll. #33665

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

PTAL @CarolEidt @dotnet/jit-contrib |

|

Are the loads/stores aligned? If not, |

| if (size >= XMM_REGSIZE_BYTES) | ||

| { | ||

| #ifdef FEATURE_SIMD | ||

| bool useYmm = (compiler->getSIMDVectorRegisterByteLength() == YMM_REGSIZE_BYTES) && (size >= YMM_REGSIZE_BYTES); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we want to tie this to the size of Vector<T> rather than to the underlying ISAs that are available (based on the instructions used)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you mean to replace compiler->getSIMDVectorRegisterByteLength() == YMM_REGSIZE_BYTES with compiler->>getSIMDSupportLevel() == SIMD_AVX2_Supported? I do not have a preference here, what is your opinion?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, to replace it with a compSupports(InstructionSet_AVX2) check so we aren't tied to Vector<T> when we aren't actually using Vector<T>.

The appropriate check might change slightly with #33274, but I imagine this PR would get merged first. CC. @davidwrighton

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, will do that before merge, thanks.

| emit->emitIns_R_R(INS_mov_i2xmm, EA_PTRSIZE, srcXmmReg, srcIntReg); | ||

| emit->emitIns_R_R(INS_punpckldq, EA_16BYTE, srcXmmReg, srcXmmReg); | ||

| #ifdef TARGET_X86 | ||

| // For x86, we need one more to convert it from 8 bytes to 16 bytes. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This else block could be more efficient as just a shuffle (SSE2) or broadcast (AVX2). You can see the pattern we use for setting all elements of a vector to value here:

- 32-bit: https://source.dot.net/#System.Private.CoreLib/Vector128.cs,399

- 64-bit: https://source.dot.net/#System.Private.CoreLib/Vector128.cs,434

Likewise for V256 it can be a shuffle + insert (AVX) or broadcast (AVX2):

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We start with 2 bytes of data after emit->emitIns_R_R(INS_mov_i2xmm, EA_PTRSIZE, srcXmmReg, srcIntReg);, if we want to use a broadcast we will need to copy it up to 4 bytes. It could still be profitable, I think it is worth to open an issue from your comment, mark it as easy and up-for-grabs probably.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How do we start with 2 bytes of data? this is mov_i2xmm which is movd which only supports reading 32-bits or 64-bits (also hence the EA_PTRSIZE).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

oh yes, we are transferring from 1 byte that Initblk accepts to int/long/float size in fgMorphPromoteLocalInitBlock

The alignment rules are the same as they were for xmm usages before, and the instruction are the same. faster than for a random value rcx value we are good. I see that we have |

I don't know about that

I think that depends on a lot of factors. The optimization manual has a lot of notes on the penalties for crossing a cache line vs crossing a page boundary (~150 cycles) and calls out that it may be more optimal to do two 16-bit operations than a single 32-bit operation. |

@adiaaida could you please help us with that? |

|

@DrewScoggins could probably help |

For cases when a 32bit operation hits the cache/page boundary, one of those two 16 bit operations most likely will hit it too, won't it? (unless the boundary is between them) |

|

We currently do not have any PR level performance testing that is done on actual hardware. The runs that you are seeing are basically just CI for our performance tests to make sure that changes don't break our perf tests. To investigate this issue further I would go here, https://github.com/dotnet/performance/blob/master/docs/benchmarking-workflow-dotnet-runtime.md, and folllow the instructions how on to run the performance tests locally against a built version of the runtime repo. If you need any help running these just let me know and I can help with anywhere you get stuck. Also after you have checked in we will run the full battery of tests against the change and I can point you to the report that we generate and you can look through that and see if we are seeing any major changes to the benchmarks that we have. |

The manual isn't exactly clear on this, it just indicates that it doubles the split rate.

etc |

|

Thanks @DrewScoggins. I will do a local perfomance run when I have time and a free machine and post results here. |

The same statement is true for two SSE loads if vectors are not 16 bytes aligned basically. So: |

I don't believe so, hence the explicit recommendations:

I believe the underlying issue is that the cache is 64-bytes and so with YMM you have a cache line split every other access. |

|

@sandreenko - I'm looking forward to seeing performance numbers; it seems that the guidance would indicate that it could be problematic. |

|

Quoting Agner Fog (2011):

I have an old (2012) blog post on the topic: Data alignment for speed: myth or reality? I'm skeptical that switching from ymm to xmm can improve matters. |

| emit->emitIns_R_S(simdMov, EA_ATTR(regSize), tempReg, srcLclNum, srcOffset); | ||

| } | ||

| else | ||

| auto unrollUsingXMM = [&](unsigned regSize, regNumber tempReg) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'd prefer to make these actual methods rather than lambdas. To me the lambdas make it more difficult to see what's being modified, and it seems that sometimes the debugger(s) don't support them all that well. That said, doing that here would require some out (pointer) arguments). @BruceForstall do we have any guidance on this for the JIT?

I'm interested in others' thoughts on this @dotnet/jit-contrib. Perhaps it's only me that finds them obfuscating.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I did not want to do a method because it would need 7+ arguments and ~5 of them were pointers or references.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Right - I realize that there are tradeoffs, but I would probably make them in the other direction. I'd still like to hear from other JIT devs on this question, because I think it will continue to come up.

ping @dotnet/jit-contrib.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Given that this is mainly just used to cover the regular + trailing case, and the trailing case should only ever be one iteration, might it be simpler to just copy the logic for the one trailing case needed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For just this case I don't think it's worth debating over - but I think this is a good opportunity to discuss these tradeoffs relative to the JIT coding conventions. It may be that most others feel as I do, but it may also be that most JIT devs would prefer the slight obfuscation and possible debug challenges over the admittedly messy approach of passing in a bunch of pointer arguments. As I say, it's a tradeoff and it would be nice to get some consensus on where the balance should lie.

This, to my knowledge (and based on past profiling numbers) is only applicable to unaligned loads/stores that don't cross a cache-line or page-line boundary. |

|

@tannergooding in this comment you quoted the optimization manual but it seems it doesn't include this green block: |

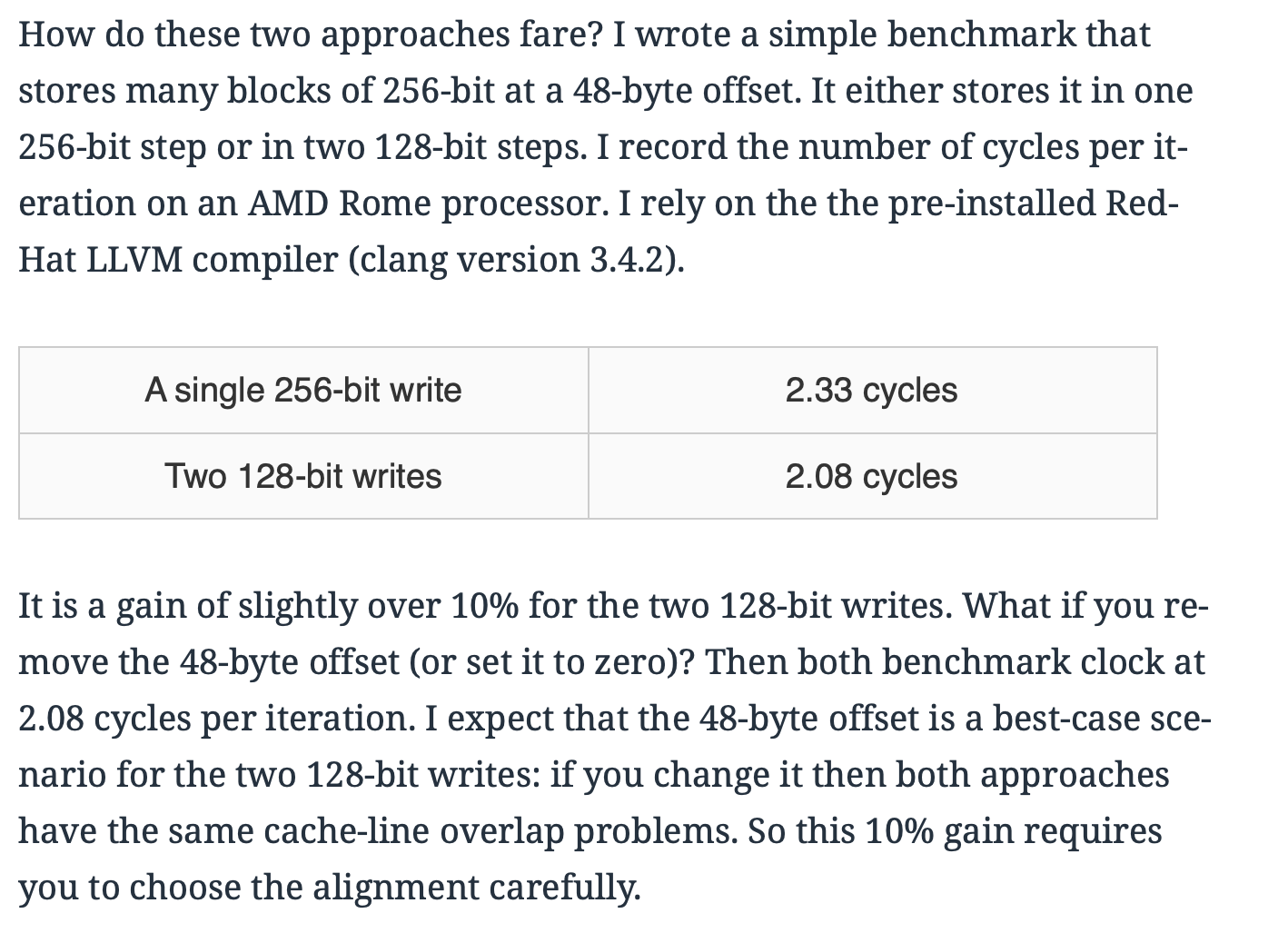

After checking, if you configure things just right, so that the ymm is stored always on two cache lines, and where the two xmm avoid crossing a cache line, there is a small benefit to the xmm approach. But as with my Data alignment for speed: myth or reality? post quoted above, these are small differences of the order of 10%. You are not going to save 50% or anything of the sort. |

|

Blog post with hard numbers and source code (C++) : Avoiding cache line overlap by replacing one 256-bit store with two 128-bit stores Screenshot: |

@EgorBo, looks like I just didn't have the latest copy of the manual. I have the copy from 2018, not the copy from Sep 2019. In any case, Skylake is a relatively newer baseline and many of the azure machines sizes are still on Haswell/Broadwell: https://docs.microsoft.com/en-us/azure/virtual-machines/sizes-general.

Right, I wasn't expecting anything crazy, but 10% could still be significant for certain workloads. This also looks to assume that the 16-byte read/writes will be aligned. It might be beneficial to also see what the numbers are like when none of it is aligned (so you get a cache line split every other load/store for YMM and every 4 loads/stores for XMM). It might also be beneficial to check on a Haswell or Broadwell era processor as that is a common baseline for Azure and AWS machines. |

|

Thanks everybody for the feedback. I was running performance\benchmarks\micro for a few hours and the results are on my local machine (Intel Core i7-6700 CPU 3.40GHz (Skylake)) by MannWhitney(3ms): I would be glad to discuss it further and to do more measurements for this change if I had more time. |

I'd be fine with this. |

Of course, one needs to test on one's actual hardware in one's actual code paths... but I did run these tests informally last night and allude to it in my post. On AMD Rome, the only time the two XMM bested the one YMM is when you the exact 48-byte offset scenario. That is, the 48-byte offset is designed to make the two XMM approach look as good as possible. It should be reasonably easy to rerun my benchmark anywhere... you just need Linux with perf counter access... and a compiler that does not screw up your code. |

Can you help me understand this? I don't see how codegen details can impact inlining decisions.

You might want to create a focused benchmark similar in spirt to the ones we had for the prolog zeroing work (see for example). |

good question, don't we use code size as an inlining metric if the method that we are considering to inline was already compiled? I saw that I ran the diffs again but this time it did not show me the regressions, the improved methods stayed the same DetailsHm, I have run it a few more times, it shows a bit different results each time, sometimes it shows that |

No, we don't. It creates coupling between the back end of the jit and the front end that makes it hard to work on back end changes. The inliner can extrapolate about the general behavior of the backend (say estimating that this kind of IR typically creates this much native code) but doesn't look at exact sizes.

Just in SPC, right? Did you see this in other assemblies? @erozenfeld has been working hard to try and fix the flakiness, but new nondeterminisc modes keep creeping in. |

|

I'll try to repro and fix the non-deterministic pmi behaviour. |

|

I have recollected the numbers with |

|

The non-deterministic pmi behavior is addressed in dotnet/jitutils#255 . |

|

I am going to close it for now until I find time for more benchmarking if somebody wants to finish it - feel free. |

PMI SPC diffs:

Total bytes of diff: -7255 (-0.13% of base),the actual diff is better, but we start inlining more and that is shown as asm size regression.

Details

frameworks libraries (I used crossgen without check for

compiler->getSIMDVectorRegisterByteLength() == YMM_REGSIZE_BYTESto estimate PMI diffs. PMI takes too long)Total bytes of diff: -56175 (-0.17% of base)Details

Diffs look like:

before:

after:

and we are emitting

VZEROUPPEReverywhere now, so adding YMM usage doesn't add any penalty, that could change with #11496Fixes #33617.