-

Notifications

You must be signed in to change notification settings - Fork 963

improve rocksDB #3006

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

improve rocksDB #3006

Conversation

1.rocks all old parameter change to be configurable 2.add RateLimiter feature,default closed

|

@eolivelli @pkumar-singh @zymap |

|

have you considered adding support to use the native RocksDB configuration file support? https://github.com/facebook/rocksdb/blob/main/java/src/main/java/org/rocksdb/OptionsUtil.java |

I think it's a good idea, finished this pr, I will research that direction |

|

I would suggest using the RocksDB config file directly. That will make us don't need to introduce extra configuration changes in the bookkeeper repo. |

…apache#3011) * Auditor should get the LegdgerManagerFactory from the client instance * Removed unused import

* Initial commit for dropping maven * fix gh action * fix typo

* Added OWASP dependency-check * Suppress ETCD-related misdetections

### Motivation While experimenting with OWASP dependency checker I noticed that we have 3 versions of netty mixed in: 4.1.72 (current one, expected) plus 4.1.63 and 4.1.50 (brought with ZK and some other dependencies). ### Changes Made gradle enforce the same version of netty in subprojects. Reviewers: Nicolò Boschi <boschi1997@gmail.com>, Enrico Olivelli <eolivelli@gmail.com> This closes apache#3008 from dlg99/gradle-netty

…ns etc. ### Motivation Older versions of guava (w/CVEs) used in some subprojects ### Changes Forced the same version of guava; fixed deprecation problems (murmur3_32) and compilation problems (checkArgument). checkArgument cannot be statically imported because there are now overrides of it; checkstyle was not very cooperative so I had to remove the import altogether. Then updated the guava version to match one in Pulsar. Reviewers: Enrico Olivelli <eolivelli@gmail.com>, Nicolò Boschi <boschi1997@gmail.com> This closes apache#3010 from dlg99/gradle-guava

### Motivation Changelog: https://netty.io/news/2022/01/12/4-1-73-Final.html The main reason to upgrade is because of an [intensive I/O disk scheduled task](netty/netty#11943) introduced in 4.1.72.Final which is synchronous and can cause EventLoop to blocked very often. ### Changes * Upgrade Netty from 4.1.72.Final to 4.1.73.Final * [Netty 4.1.73.Final depends on netty-tc-native 2.0.46](https://github.com/netty/netty/blob/b5219aeb4ee62f15d5dfb2b9c29d0c694aca05be/pom.xml#L545) as Netty 4.1.72.Final, so no need to upgrade Reviewers: Andrey Yegorov <None> This closes apache#3020 from nicoloboschi/upgrade-netty-4.1.73

### Motivation BK 4.14.4 has been released and we should test it in the upgrade tests Note: for the sake of test performance, we test the upgrades only for the latest releases of each minor release ### Changes * Replaced BK 4.14.3 with 4.14.4 Reviewers: Enrico Olivelli <eolivelli@gmail.com>, Andrey Yegorov <None> This closes apache#2997 from nicoloboschi/tests/add-bk-4144-backward-compat

* Remove annoying println * Update gradle to 6.9.2 This includes `Mitigations for log4j vulnerability in Gradle builds` gradle/gradle#19328 Full release notes https://docs.gradle.org/6.9.2/release-notes.html

…che Pulsar 1.21) (apache#3028)

### Motivation I was trying to start multiple bookies locally and found it's a bit inconvenient to specify different http ports for different bookies. ### Changes Add a command-line argument `httpport` to the bookie command to support specifying bookie http port from the command line.

Descriptions of the changes in this PR: Dependency change ### Motivation I encountered apache#3024 and noticed that newer version of RocksDB includes multiple fixes for concurrency issues with various side-effects and fixes for a few crashes. I upgraded, ran `org.apache.bookkeeper.bookie.BookieJournalTest` test in a loop and didn't repro the crash so far. It is hard to say 100% if it is fixed given it was not happening all the time. ### Changes Upgraded RocksDB Master Issue: apache#3024 Reviewers: Enrico Olivelli <eolivelli@gmail.com>, Nicolò Boschi <boschi1997@gmail.com> This closes apache#3026 from dlg99/rocksdb-upgrade

### Motivation After we support RocksDB backend entryMetaMap, we should avoid updating the entryMetaMap if unnecessary. In `doGcEntryLogs` method, it iterate through the entryLogMetaMap and update the meta if ledgerNotExists. We should check whether the meta has been modified in `removeIfLedgerNotExists`. If not modified, we can avoid update the entryLogMetaMap. ### Modification 1. Add a flag to represent whether the meta has been modified in `removeIfLedgerNotExists` method. If not, skip update the entryLogMetaMap.

### Motivation

When we set region or rack placement policy, but the region or rack name set to `/` or empty string, it will throw the following exception on handling bookies join.

```

java.lang.StringIndexOutOfBoundsException: String index out of range: -1

at java.lang.String.substring(String.java:1841) ~[?:?]

at org.apache.bookkeeper.net.NetworkTopologyImpl$InnerNode.getNextAncestorName(NetworkTopologyImpl.java:144) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.net.NetworkTopologyImpl$InnerNode.add(NetworkTopologyImpl.java:180) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.net.NetworkTopologyImpl.add(NetworkTopologyImpl.java:425) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.TopologyAwareEnsemblePlacementPolicy.handleBookiesThatJoined(TopologyAwareEnsemblePlacementPolicy.java:717) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicyImpl.handleBookiesThatJoined(RackawareEnsemblePlacementPolicyImpl.java:80) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicy.handleBookiesThatJoined(RackawareEnsemblePlacementPolicy.java:249) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.TopologyAwareEnsemblePlacementPolicy.onClusterChanged(TopologyAwareEnsemblePlacementPolicy.java:663) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicyImpl.onClusterChanged(RackawareEnsemblePlacementPolicyImpl.java:80) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicy.onClusterChanged(RackawareEnsemblePlacementPolicy.java:92) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.BookieWatcherImpl.processWritableBookiesChanged(BookieWatcherImpl.java:197) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.BookieWatcherImpl.lambda$initialBlockingBookieRead$1(BookieWatcherImpl.java:233) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.discover.ZKRegistrationClient$WatchTask.accept(ZKRegistrationClient.java:147) [io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.discover.ZKRegistrationClient$WatchTask.accept(ZKRegistrationClient.java:70) [io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:859) [?:?]

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:837) [?:?]

at java.util.concurrent.CompletableFuture$Completion.run(CompletableFuture.java:478) [?:?]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) [?:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:264) [?:?]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:304) [?:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [?:?]

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) [io.netty-netty-common-4.1.72.Final.jar:4.1.72.Final]

at java.lang.Thread.run(Thread.java:829) [?:?]

```

The root cause is that the node networkLocation is empty string and then use `substring(1)` operation, which will lead to `StringIndexOutOfBoundsException`

### Modification

1. Add `n.getNetworkLocation()` is empty check on `isAncestor` method to make the exception more clear.

…apache#2965) ### Motivation When we use RocksDB backend entryMetadataMap for multi ledger directories configured, the bookie start up failed, and throw the following exception. ``` 12:24:28.530 [main] ERROR org.apache.pulsar.PulsarStandaloneStarter - Failed to start pulsar service. java.io.IOException: Error open RocksDB database at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.<init>(KeyValueStorageRocksDB.java:202) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.<init>(KeyValueStorageRocksDB.java:89) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.lambda$static$0(KeyValueStorageRocksDB.java:62) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.PersistentEntryLogMetadataMap.<init>(PersistentEntryLogMetadataMap.java:87) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.GarbageCollectorThread.createEntryLogMetadataMap(GarbageCollectorThread.java:265) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.GarbageCollectorThread.<init>(GarbageCollectorThread.java:154) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.GarbageCollectorThread.<init>(GarbageCollectorThread.java:133) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.SingleDirectoryDbLedgerStorage.<init>(SingleDirectoryDbLedgerStorage.java:182) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.DbLedgerStorage.newSingleDirectoryDbLedgerStorage(DbLedgerStorage.java:190) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.DbLedgerStorage.initialize(DbLedgerStorage.java:150) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.BookieResources.createLedgerStorage(BookieResources.java:110) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.pulsar.zookeeper.LocalBookkeeperEnsemble.buildBookie(LocalBookkeeperEnsemble.java:328) ~[org.apache.pulsar-pulsar-zookeeper-utils-2.8.1.jar:2.8.1] at org.apache.pulsar.zookeeper.LocalBookkeeperEnsemble.runBookies(LocalBookkeeperEnsemble.java:391) ~[org.apache.pulsar-pulsar-zookeeper-utils-2.8.1.jar:2.8.1] at org.apache.pulsar.zookeeper.LocalBookkeeperEnsemble.startStandalone(LocalBookkeeperEnsemble.java:521) ~[org.apache.pulsar-pulsar-zookeeper-utils-2.8.1.jar:2.8.1] at org.apache.pulsar.PulsarStandalone.start(PulsarStandalone.java:264) ~[org.apache.pulsar-pulsar-broker-2.8.1.jar:2.8.1] at org.apache.pulsar.PulsarStandaloneStarter.main(PulsarStandaloneStarter.java:121) [org.apache.pulsar-pulsar-broker-2.8.1.jar:2.8.1] Caused by: org.rocksdb.RocksDBException: lock hold by current process, acquire time 1640492668 acquiring thread 123145515651072: data/standalone/bookkeeper00/entrylogIndexCache/metadata-cache/LOCK: No locks available at org.rocksdb.RocksDB.open(Native Method) ~[org.rocksdb-rocksdbjni-6.10.2.jar:?] at org.rocksdb.RocksDB.open(RocksDB.java:239) ~[org.rocksdb-rocksdbjni-6.10.2.jar:?] at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.<init>(KeyValueStorageRocksDB.java:199) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] ... 15 more ``` The reason is multi garbageCollectionThread will open the same RocksDB and own the LOCK, and then throw the above exception. ### Modification 1. Change the default GcEntryLogMetadataCachePath from `getLedgerDirNames()[0] + "/" + ENTRYLOG_INDEX_CACHE` to `null`. If it is `null`, it will use each ledger's directory. 2. Remove the internal directory `entrylogIndexCache`. The data structure looks like: ``` └── current ├── lastMark ├── ledgers │ ├── 000003.log │ ├── CURRENT │ ├── IDENTITY │ ├── LOCK │ ├── LOG │ ├── MANIFEST-000001 │ └── OPTIONS-000005 ├── locations │ ├── 000003.log │ ├── CURRENT │ ├── IDENTITY │ ├── LOCK │ ├── LOG │ ├── MANIFEST-000001 │ └── OPTIONS-000005 └── metadata-cache ├── 000003.log ├── CURRENT ├── IDENTITY ├── LOCK ├── LOG ├── MANIFEST-000001 └── OPTIONS-000005 ``` 3. If user configured `GcEntryLogMetadataCachePath` in `bk_server.conf`, it only support one ledger directory configured for `ledgerDirectories`. Otherwise, the best practice is to keep it default. 4. The PR is better to release with apache#1949

Descriptions of the changes in this PR: Dependency change ### Motivation I encountered apache#3024 and noticed that newer version of RocksDB includes multiple fixes for concurrency issues with various side-effects and fixes for a few crashes. I upgraded, ran `org.apache.bookkeeper.bookie.BookieJournalTest` test in a loop and didn't repro the crash so far. It is hard to say 100% if it is fixed given it was not happening all the time. ### Changes Upgraded RocksDB Master Issue: apache#3024 Reviewers: Enrico Olivelli <eolivelli@gmail.com>, Nicolò Boschi <boschi1997@gmail.com> This closes apache#3026 from dlg99/rocksdb-upgrade

### Motivation After we support RocksDB backend entryMetaMap, we should avoid updating the entryMetaMap if unnecessary. In `doGcEntryLogs` method, it iterate through the entryLogMetaMap and update the meta if ledgerNotExists. We should check whether the meta has been modified in `removeIfLedgerNotExists`. If not modified, we can avoid update the entryLogMetaMap. ### Modification 1. Add a flag to represent whether the meta has been modified in `removeIfLedgerNotExists` method. If not, skip update the entryLogMetaMap.

### Motivation

When we set region or rack placement policy, but the region or rack name set to `/` or empty string, it will throw the following exception on handling bookies join.

```

java.lang.StringIndexOutOfBoundsException: String index out of range: -1

at java.lang.String.substring(String.java:1841) ~[?:?]

at org.apache.bookkeeper.net.NetworkTopologyImpl$InnerNode.getNextAncestorName(NetworkTopologyImpl.java:144) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.net.NetworkTopologyImpl$InnerNode.add(NetworkTopologyImpl.java:180) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.net.NetworkTopologyImpl.add(NetworkTopologyImpl.java:425) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.TopologyAwareEnsemblePlacementPolicy.handleBookiesThatJoined(TopologyAwareEnsemblePlacementPolicy.java:717) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicyImpl.handleBookiesThatJoined(RackawareEnsemblePlacementPolicyImpl.java:80) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicy.handleBookiesThatJoined(RackawareEnsemblePlacementPolicy.java:249) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.TopologyAwareEnsemblePlacementPolicy.onClusterChanged(TopologyAwareEnsemblePlacementPolicy.java:663) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicyImpl.onClusterChanged(RackawareEnsemblePlacementPolicyImpl.java:80) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicy.onClusterChanged(RackawareEnsemblePlacementPolicy.java:92) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.BookieWatcherImpl.processWritableBookiesChanged(BookieWatcherImpl.java:197) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.client.BookieWatcherImpl.lambda$initialBlockingBookieRead$1(BookieWatcherImpl.java:233) ~[io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.discover.ZKRegistrationClient$WatchTask.accept(ZKRegistrationClient.java:147) [io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at org.apache.bookkeeper.discover.ZKRegistrationClient$WatchTask.accept(ZKRegistrationClient.java:70) [io.streamnative-bookkeeper-server-4.14.3.1.jar:4.14.3.1]

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:859) [?:?]

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:837) [?:?]

at java.util.concurrent.CompletableFuture$Completion.run(CompletableFuture.java:478) [?:?]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) [?:?]

at java.util.concurrent.FutureTask.run(FutureTask.java:264) [?:?]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:304) [?:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [?:?]

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) [io.netty-netty-common-4.1.72.Final.jar:4.1.72.Final]

at java.lang.Thread.run(Thread.java:829) [?:?]

```

The root cause is that the node networkLocation is empty string and then use `substring(1)` operation, which will lead to `StringIndexOutOfBoundsException`

### Modification

1. Add `n.getNetworkLocation()` is empty check on `isAncestor` method to make the exception more clear.

…apache#2965) When we use RocksDB backend entryMetadataMap for multi ledger directories configured, the bookie start up failed, and throw the following exception. ``` 12:24:28.530 [main] ERROR org.apache.pulsar.PulsarStandaloneStarter - Failed to start pulsar service. java.io.IOException: Error open RocksDB database at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.<init>(KeyValueStorageRocksDB.java:202) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.<init>(KeyValueStorageRocksDB.java:89) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.lambda$static$0(KeyValueStorageRocksDB.java:62) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.PersistentEntryLogMetadataMap.<init>(PersistentEntryLogMetadataMap.java:87) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.GarbageCollectorThread.createEntryLogMetadataMap(GarbageCollectorThread.java:265) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.GarbageCollectorThread.<init>(GarbageCollectorThread.java:154) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.GarbageCollectorThread.<init>(GarbageCollectorThread.java:133) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.SingleDirectoryDbLedgerStorage.<init>(SingleDirectoryDbLedgerStorage.java:182) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.DbLedgerStorage.newSingleDirectoryDbLedgerStorage(DbLedgerStorage.java:190) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.storage.ldb.DbLedgerStorage.initialize(DbLedgerStorage.java:150) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.bookkeeper.bookie.BookieResources.createLedgerStorage(BookieResources.java:110) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] at org.apache.pulsar.zookeeper.LocalBookkeeperEnsemble.buildBookie(LocalBookkeeperEnsemble.java:328) ~[org.apache.pulsar-pulsar-zookeeper-utils-2.8.1.jar:2.8.1] at org.apache.pulsar.zookeeper.LocalBookkeeperEnsemble.runBookies(LocalBookkeeperEnsemble.java:391) ~[org.apache.pulsar-pulsar-zookeeper-utils-2.8.1.jar:2.8.1] at org.apache.pulsar.zookeeper.LocalBookkeeperEnsemble.startStandalone(LocalBookkeeperEnsemble.java:521) ~[org.apache.pulsar-pulsar-zookeeper-utils-2.8.1.jar:2.8.1] at org.apache.pulsar.PulsarStandalone.start(PulsarStandalone.java:264) ~[org.apache.pulsar-pulsar-broker-2.8.1.jar:2.8.1] at org.apache.pulsar.PulsarStandaloneStarter.main(PulsarStandaloneStarter.java:121) [org.apache.pulsar-pulsar-broker-2.8.1.jar:2.8.1] Caused by: org.rocksdb.RocksDBException: lock hold by current process, acquire time 1640492668 acquiring thread 123145515651072: data/standalone/bookkeeper00/entrylogIndexCache/metadata-cache/LOCK: No locks available at org.rocksdb.RocksDB.open(Native Method) ~[org.rocksdb-rocksdbjni-6.10.2.jar:?] at org.rocksdb.RocksDB.open(RocksDB.java:239) ~[org.rocksdb-rocksdbjni-6.10.2.jar:?] at org.apache.bookkeeper.bookie.storage.ldb.KeyValueStorageRocksDB.<init>(KeyValueStorageRocksDB.java:199) ~[org.apache.bookkeeper-bookkeeper-server-4.15.0-SNAPSHOT.jar:4.15.0-SNAPSHOT] ... 15 more ``` The reason is multi garbageCollectionThread will open the same RocksDB and own the LOCK, and then throw the above exception. 1. Change the default GcEntryLogMetadataCachePath from `getLedgerDirNames()[0] + "/" + ENTRYLOG_INDEX_CACHE` to `null`. If it is `null`, it will use each ledger's directory. 2. Remove the internal directory `entrylogIndexCache`. The data structure looks like: ``` └── current ├── lastMark ├── ledgers │ ├── 000003.log │ ├── CURRENT │ ├── IDENTITY │ ├── LOCK │ ├── LOG │ ├── MANIFEST-000001 │ └── OPTIONS-000005 ├── locations │ ├── 000003.log │ ├── CURRENT │ ├── IDENTITY │ ├── LOCK │ ├── LOG │ ├── MANIFEST-000001 │ └── OPTIONS-000005 └── metadata-cache ├── 000003.log ├── CURRENT ├── IDENTITY ├── LOCK ├── LOG ├── MANIFEST-000001 └── OPTIONS-000005 ``` 3. If user configured `GcEntryLogMetadataCachePath` in `bk_server.conf`, it only support one ledger directory configured for `ledgerDirectories`. Otherwise, the best practice is to keep it default. 4. The PR is better to release with apache#1949

…ed (apache#2856) Descriptions of the changes in this PR: ### Motivation When start bookie, it will throws the following error message when dns resolver initialize failed. ``` [main] ERROR org.apache.bookkeeper.client.RackawareEnsemblePlacementPolicyImpl - Failed to initialize DNS Resolver org.apache.bookkeeper.net.ScriptBasedMapping, used default subnet resolver : java.lang.RuntimeException: No network topology script is found when using script based DNS resolver. ``` It is confusing for users. ### Modification 1. change the log level from error to warn.

### Motivation Current official docker images do not handle the SIGTERM sent by the docker runtime and so get killed after the timeout. No graceful shutdown occurs. The reason is that the entrypoint does not use `exec` when executing the `bin/bookkeeper` shell script and so the BookKeeper process cannot receive signals from the docker runtime. ### Changes Use `exec` when calling the `bin/bookkeeper` shell script. Reviewers: Nicolò Boschi <boschi1997@gmail.com>, Enrico Olivelli <eolivelli@gmail.com>, Lari Hotari <None>, Matteo Merli <mmerli@apache.org> This closes apache#2857 from Vanlightly/docker-image-handle-sigterm

Fix apache#2823 RocksDB support several format versions which uses different data structure to implement key-values indexes and have huge different performance. https://rocksdb.org/blog/2019/03/08/format-version-4.html https://github.com/facebook/rocksdb/blob/d52b520d5168de6be5f1494b2035b61ff0958c11/include/rocksdb/table.h#L368-L394 ```C++ // We currently have five versions: // 0 -- This version is currently written out by all RocksDB's versions by // default. Can be read by really old RocksDB's. Doesn't support changing // checksum (default is CRC32). // 1 -- Can be read by RocksDB's versions since 3.0. Supports non-default // checksum, like xxHash. It is written by RocksDB when // BlockBasedTableOptions::checksum is something other than kCRC32c. (version // 0 is silently upconverted) // 2 -- Can be read by RocksDB's versions since 3.10. Changes the way we // encode compressed blocks with LZ4, BZip2 and Zlib compression. If you // don't plan to run RocksDB before version 3.10, you should probably use // this. // 3 -- Can be read by RocksDB's versions since 5.15. Changes the way we // encode the keys in index blocks. If you don't plan to run RocksDB before // version 5.15, you should probably use this. // This option only affects newly written tables. When reading existing // tables, the information about version is read from the footer. // 4 -- Can be read by RocksDB's versions since 5.16. Changes the way we // encode the values in index blocks. If you don't plan to run RocksDB before // version 5.16 and you are using index_block_restart_interval > 1, you should // probably use this as it would reduce the index size. // This option only affects newly written tables. When reading existing // tables, the information about version is read from the footer. // 5 -- Can be read by RocksDB's versions since 6.6.0. Full and partitioned // filters use a generally faster and more accurate Bloom filter // implementation, with a different schema. uint32_t format_version = 5; ``` Different format version requires different rocksDB version and it couldn't roll back once upgrade to new format version In our current RocksDB storage code, we hard code the format_version to 2, which is hard to to upgrade format_version to achieve new RocksDB's high performance. 1. Make the format_version configurable. Reviewers: Matteo Merli <mmerli@apache.org>, Enrico Olivelli <eolivelli@gmail.com> This closes apache#2824 from hangc0276/chenhang/make_rocksdb_format_version_configurable

As title, delete duplicated semicolon Reviewers: Andrey Yegorov <None> This closes apache#2810 from gaozhangmin/remove-duplicated-semicolon

Includes the BP-46 design proposal markdown document. Master Issue: apache#2705 Reviewers: Andrey Yegorov <None>, Enrico Olivelli <eolivelli@gmail.com> This closes apache#2706 from Vanlightly/bp-44

…cess of replication Motivation Now ReplicationStats numUnderReplicatedLedger registers when `publishSuspectedLedgersAsync`, but its value doesn't decrease as with the ledger replicated successfully, We cannot know the progress of replication from the stat. Changes registers a notifyUnderReplicationLedgerChanged when auditor starts. numUnderReplicatedLedger value will decrease when the ledger path under replicate deleted. Reviewers: Nicolò Boschi <boschi1997@gmail.com>, Enrico Olivelli <eolivelli@gmail.com>, Andrey Yegorov <None> This closes apache#2805 from gaozhangmin/replication-stats-num-under-replicated-ledgers

…sOnMetadataServerException occurs in over-replicated ledger GC ### Motivation - Even if an exception other than BKNoSuchLedgerExistsOnMetadataServerException occurs of readLedgerMetadata in over-replicated ledger GC, nothing will be output to the log. (apache#2844 (comment)) ### Changes - If an exception other than BKNoSuchLedgerExistsOnMetadataServerException occurs in readLedgerMetadata, output information to the log. Reviewers: Andrey Yegorov <None>, Nicolò Boschi <boschi1997@gmail.com> This closes apache#2873 from shustsud/improved_error_handling

Signed-off-by: Eric Shen <ericshenyuhaooutlook.com> Descriptions of the changes in this PR: ### Motivation The description of `bin/bookkeeper autorecovery` is wrong, it won't start in daemon. ### Changes * Changed the description in bookkeeper shell * Update the doc Reviewers: Yong Zhang <zhangyong1025.zy@gmail.com> This closes apache#2910 from ericsyh/fix-bk-cli

### Motivation I found many flaky-test like apache#3031 apache#3034 apache#3033. Because many flaky tests are actually production code issues so I think it's a good way to add flaky-test template to track them ### Changes - Add flaky-test template. Reviewers: Andrey Yegorov <None> This closes apache#3035 from mattisonchao/template_flaky_test

``` > Task :bookkeeper-tools-framework:compileTestJava Execution optimizations have been disabled for task ':bookkeeper-tools-framework:compileTestJava' to ensure correctness due to the following reasons: - Gradle detected a problem with the following location: '/Users/mbarnum/src/bookkeeper/tools/framework/build/classes/java/main'. Reason: Task ':bookkeeper-tools-framework:compileTestJava' uses this output of task ':tools:framework:compileJava' without declaring an explicit or implicit dependency. This can lead to incorrect results being produced, depending on what order the tasks are executed. Please refer to https://docs.gradle.org/7.3.3/userguide/validation_problems.html#implicit_dependency for more details about this problem. ```

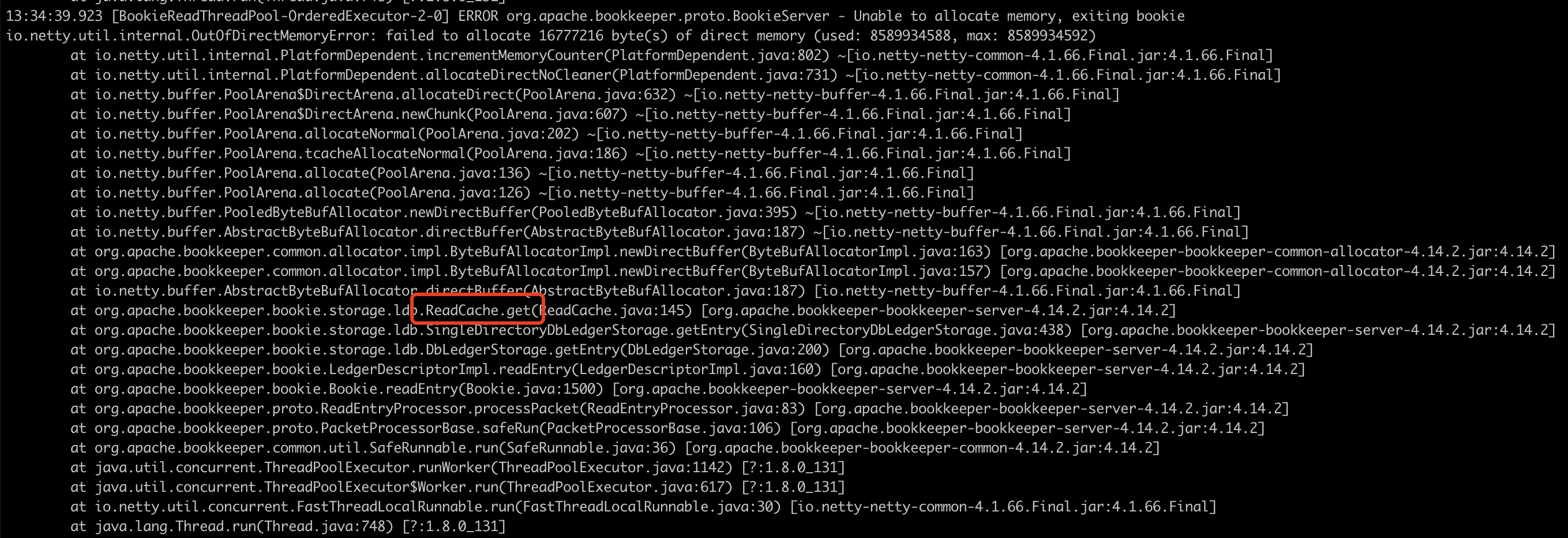

…ding the entry in ReadCache ### Motivation Original PR: apache#1755, It should be that this PR forgot to modify the memory application method. When the direct memory is insufficient, it does not fall back to the jvm memory, and the bookie hangs directly.   ### Changes Use `OutOfMemoryPolicy` when the direct memory is insufficient when reading the entry in `ReadCache`. Reviewers: Enrico Olivelli <eolivelli@gmail.com>, Andrey Yegorov <None> This closes apache#2836 from wenbingshen/useOutOfMemoryPolicyInReadCache

### Motivation Sometimes CI jobs fail due to timeout. It would be useful understand what the latest test was doing before being interrupted. ### Changes * Added a new script for dumping stacktrace. * Added in all the jobs the step in case of `cancelled()` is true. Reviewers: Andrey Yegorov <None> This closes apache#3042 from nicoloboschi/ci-thread-dump

Descriptions of the changes in this PR: ### Motivation some metric's value is not right,so update it the current is problem-driven, and a comprehensive review will be done later. ### Changes update 2 metric: 1.Bookie: ReadBytes use entrySize 2.Journal: report journal write error metric

### Motivation Changelog: https://netty.io/news/2022/02/08/4-1-74-Final.html Netty 4.1.74 had solved several dns resolver bug ### Modifications * Upgrade Netty from 4.1.73.Final to 4.1.74.Final * Netty 4.1.74.Final depends on netty-tc-native 2.0.48, also updates

Descriptions of the changes in this PR: ### Motivation RocksDB segfaulted during CompactionTest ### Changes RocksDB can segfault if one tries to use it after close. [Shutdown/compaction sequence](apache#3040 (comment)) can lead to such situation. The fix prevents segfault. CompactionTests were updated at some point to use metadata cache and non-cached case is not tested. I added the test suites for this case. Master Issue: apache#3040 Reviewers: Yong Zhang <zhangyong1025.zy@gmail.com>, Nicolò Boschi <boschi1997@gmail.com> This closes apache#3043 from dlg99/fix/issue3040, closes apache#3040

…oubleshooting on CI ### Motivation Flaky test on CI ### Changes Added extra check & logging to simplify troubleshooting of the flaky test on CI. Cannot repro the failure locally after running 100+ times in a loop. Master Issue: apache#3034 Reviewers: Yong Zhang <zhangyong1025.zy@gmail.com>, Enrico Olivelli <eolivelli@gmail.com> This closes apache#3049 from dlg99/fix/issue3034, closes apache#3034

…er_improveRocksDB # Conflicts: # bookkeeper-server/src/main/java/org/apache/bookkeeper/bookie/storage/ldb/KeyValueStorageRocksDB.java # bookkeeper-server/src/main/java/org/apache/bookkeeper/bookie/storage/ldb/PersistentEntryLogMetadataMap.java

Descriptions of the changes in this PR: ### Motivation 1. some old parameters in rocksDB is not configurable 2. for all the tuning of rocksdb in the future, there is no need to update the code or introduce configuration to bookie ### Changes 1) rocks all old parameter change to be configurable 2) use OptionsUtil to init all params for rocksdb the old pr #3006 has some rebase error,open a new pr Reviewers: Andrey Yegorov <None>, LinChen <None> This closes #3056 from StevenLuMT/master_improveRocksDB

Descriptions of the changes in this PR: 1. some old parameters in rocksDB is not configurable 2. for all the tuning of rocksdb in the future, there is no need to update the code or introduce configuration to bookie 1) rocks all old parameter change to be configurable 2) use OptionsUtil to init all params for rocksdb the old pr apache#3006 has some rebase error,open a new pr Reviewers: Andrey Yegorov <None>, LinChen <None> This closes apache#3056 from StevenLuMT/master_improveRocksDB (cherry picked from commit 3edbc98)

Descriptions of the changes in this PR: 1. some old parameters in rocksDB is not configurable 2. for all the tuning of rocksdb in the future, there is no need to update the code or introduce configuration to bookie 1) rocks all old parameter change to be configurable 2) use OptionsUtil to init all params for rocksdb the old pr apache#3006 has some rebase error,open a new pr Reviewers: Andrey Yegorov <None>, LinChen <None> This closes apache#3056 from StevenLuMT/master_improveRocksDB (cherry picked from commit 3edbc98)

Descriptions of the changes in this PR: ### Motivation 1. some old parameters in rocksDB is not configurable 2. for all the tuning of rocksdb in the future, there is no need to update the code or introduce configuration to bookie ### Changes 1) rocks all old parameter change to be configurable 2) use OptionsUtil to init all params for rocksdb the old pr apache#3006 has some rebase error,open a new pr Reviewers: Andrey Yegorov <None>, LinChen <None> This closes apache#3056 from StevenLuMT/master_improveRocksDB

Descriptions of the changes in this PR:

Motivation

Changes

1.rocks all old parameter change to be configurable

2.add RateLimiter feature,default closed